Related Research Articles

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learning is one of the three basic machine learning paradigms, alongside supervised learning and unsupervised learning.

A* is a graph traversal and pathfinding algorithm that is used in many fields of computer science due to its completeness, optimality, and optimal efficiency. Given a weighted graph, a source node and a goal node, the algorithm finds the shortest path from source to goal.

Simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent's location within it. While this initially appears to be a chicken or the egg problem, there are several algorithms known to solve it in, at least approximately, tractable time for certain environments. Popular approximate solution methods include the particle filter, extended Kalman filter, covariance intersection, and GraphSLAM. SLAM algorithms are based on concepts in computational geometry and computer vision, and are used in robot navigation, robotic mapping and odometry for virtual reality or augmented reality.

Trajectory optimization is the process of designing a trajectory that minimizes some measure of performance while satisfying a set of constraints. Generally speaking, trajectory optimization is a technique for computing an open-loop solution to an optimal control problem. It is often used for systems where computing the full closed-loop solution is not required, impractical or impossible. If a trajectory optimization problem can be solved at a rate given by the inverse of the Lipschitz constant, then it can be used iteratively to generate a closed-loop solution in the sense of Caratheodory. If only the first step of the trajectory is executed for an infinite-horizon problem, then this is known as Model Predictive Control (MPC).

Motion planning, also path planning is a computational problem to find a sequence of valid configurations that moves the object from the source to destination. The term is used in computational geometry, computer animation, robotics and computer games.

Obstacle avoidance, in robotics, is a critical aspect of autonomous navigation and control systems. It is the capability of a robot or an autonomous system/machine to detect and circumvent obstacles in its path to reach a predefined destination. This technology plays a pivotal role in various fields, including industrial automation, self-driving cars, drones, and even space exploration. Obstacle avoidance enables robots to operate safely and efficiently in dynamic and complex environments, reducing the risk of collisions and damage.

Navigation function usually refers to a function of position, velocity, acceleration and time which is used to plan robot trajectories through the environment. Generally, the goal of a navigation function is to create feasible, safe paths that avoid obstacles while allowing a robot to move from its starting configuration to its goal configuration.

The Euclidean shortest path problem is a problem in computational geometry: given a set of polyhedral obstacles in a Euclidean space, and two points, find the shortest path between the points that does not intersect any of the obstacles.

Neurorobotics is the combined study of neuroscience, robotics, and artificial intelligence. It is the science and technology of embodied autonomous neural systems. Neural systems include brain-inspired algorithms, computational models of biological neural networks and actual biological systems. Such neural systems can be embodied in machines with mechanic or any other forms of physical actuation. This includes robots, prosthetic or wearable systems but also, at smaller scale, micro-machines and, at the larger scales, furniture and infrastructures.

A rapidly exploring random tree (RRT) is an algorithm designed to efficiently search nonconvex, high-dimensional spaces by randomly building a space-filling tree. The tree is constructed incrementally from samples drawn randomly from the search space and is inherently biased to grow towards large unsearched areas of the problem. RRTs were developed by Steven M. LaValle and James J. Kuffner Jr. They easily handle problems with obstacles and differential constraints and have been widely used in autonomous robotic motion planning.

The probabilistic roadmap planner is a motion planning algorithm in robotics, which solves the problem of determining a path between a starting configuration of the robot and a goal configuration while avoiding collisions.

D* is any one of the following three related incremental search algorithms:

In robotics, Vector Field Histogram (VFH) is a real time motion planning algorithm proposed by Johann Borenstein and Yoram Koren in 1991. The VFH utilizes a statistical representation of the robot's environment through the so-called histogram grid, and therefore places great emphasis on dealing with uncertainty from sensor and modeling errors. Unlike other obstacle avoidance algorithms, VFH takes into account the dynamics and shape of the robot, and returns steering commands specific to the platform. While considered a local path planner, i.e., not designed for global path optimality, the VFH has been shown to produce near optimal paths.

Robotics is the interdisciplinary study and practice of the design, construction, operation, and use of robots.

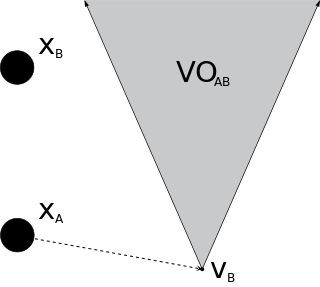

In robotics and motion planning, a velocity obstacle, commonly abbreviated VO, is the set of all velocities of a robot that will result in a collision with another robot at some moment in time, assuming that the other robot maintains its current velocity. If the robot chooses a velocity inside the velocity obstacle then the two robots will eventually collide, if it chooses a velocity outside the velocity obstacle, such a collision is guaranteed not to occur.

Any-angle path planning algorithms are pathfinding algorithms that search for a Euclidean shortest path between two points on a grid map while allowing the turns in the path to have any angle. The result is a path that cuts directly through open areas and has relatively few turns. More traditional pathfinding algorithms such as A* either lack in performance or produce jagged, indirect paths.

Oussama Khatib is a roboticist and a professor of computer science at Stanford University, and a Fellow of the IEEE. He is credited with seminal work in areas ranging from robot motion planning and control, human-friendly robot design, to haptic interaction and human motion synthesis. His work's emphasis has been to develop theories, algorithms, and technologies, that control robot systems by using models of their physical dynamics. These dynamic models are used to derive optimal controllers for complex robots that interact with the environment in real-time.

The Robotics Collaborative Technology Alliance (R-CTA) was a research program initiated and sponsored by the US Army Research Laboratory. The purpose was to "bring together government, industrial, and academic institutions to address research and development required to enable the deployment of future military unmanned ground vehicle systems ranging in size from man-portables to ground combat vehicles." Collaborative Technology and Research Alliances was a term for partnerships between Army laboratories and centers, private industry and academia for performing research and technology development intended to benefit the US Army. The partnerships were funded by the US Army.

The problem of Multi-Agent Pathfinding (MAPF) is an instance of multi-agent planning and consists in the computation of collision-free paths for a group of agents from their location to an assigned target. It is an optimization problem, since the aim is to find those paths that optimize a given objective function, usually defined as the number of time steps until all agents reach their goal cells. MAPF is the multi-agent generalization of the pathfinding problem, and it is closely related to the shortest path problem in the context of graph theory.

Linear-quadratic regulator rapidly exploring random tree (LQR-RRT) is a sampling based algorithm for kinodynamic planning. A solver is producing random actions which are forming a funnel in the state space. The generated tree is the action sequence which fulfills the cost function. The restriction is, that a prediction model, based on differential equations, is available to simulate a physical system. The method is an extension of the rapidly exploring random tree, a widely used approach to motion planning.

References

- 1 2 3 Hui-Zhong Zhuang; Shu-Xin Du; Tie-Jun Wu (2005). "Real-Time Path Planning for Mobile Robots". 2005 International Conference on Machine Learning and Cybernetics. IEEE. pp. 526–531. doi:10.1109/icmlc.2005.1527001. ISBN 0780390911. S2CID 23851845.

- ↑ Jackson, Russell C.; Çavuşoğlu, M. Cenk (2013-12-31). "Needle path planning for autonomous robotic surgical suturing". 2013 IEEE International Conference on Robotics and Automation. Vol. 2013. pp. 1669–1675. doi:10.1109/ICRA.2013.6630794. ISBN 978-1-4673-5643-5. ISSN 2152-4092. PMC 3966119 . PMID 24683500.

- 1 2 LaValle, Steven (2006). Planning Algorithms . Cambridge University Press. pp. 127–128.

- 1 2 Jahanshahi, Hadi; Jafarzadeh, Mohsen; Sari, Naeimeh Najafizadeh; Pham, Viet-Thanh; Huynh, Van Van; Nguyen, Xuan Quynh (February 2019). "Robot Motion Planning in an Unknown Environment with Danger Space". Electronics. 8 (2): 201. doi: 10.3390/electronics8020201 .

- 1 2 Limone, Brett. "What is Global Path Planning & How Does it Compare to Local Path Planning?". www.energid.com. Retrieved 2019-10-15.

- ↑ LaValle, Steven (1998). Rapidly-exploring random trees: A new tool for path planning. Citeseer.

- ↑ Kavraki, Lydia E.; Latombe, Jean-claude (1998). Probabilistic Roadmaps for Robot Path Planning. CiteSeerX 10.1.1.41.4215 .

- ↑ Leven, Peter; Hutchinson, Seth (2016-07-02). "A Framework for Real-time Path Planning in Changing Environments". The International Journal of Robotics Research. 21 (12): 999–1030. doi:10.1177/0278364902021012001. S2CID 11169688.

- ↑ Vadakkepat, P.; Kay Chen Tan; Wang Ming-Liang (July 2000). "Evolutionary artificial potential fields and their application in real time robot path planning". Proceedings of the 2000 Congress on Evolutionary Computation. CEC00 (Cat. No.00TH8512). Vol. 1. pp. 256–263 vol.1. doi:10.1109/CEC.2000.870304. ISBN 0-7803-6375-2. S2CID 16846787.

- ↑ Geraerts, R. (May 2010). "Planning short paths with clearance using explicit corridors". 2010 IEEE International Conference on Robotics and Automation. pp. 1997–2004. doi:10.1109/ROBOT.2010.5509263. ISBN 978-1-4244-5038-1. S2CID 1760187.

- ↑ Jaklin, Norman; Cook, Atlas; Geraerts, Roland (May 2013). "Real-time path planning in heterogeneous environments: Real-time path planning in heterogeneous environments". Computer Animation and Virtual Worlds. 24 (3–4): 285–295. doi:10.1002/cav.1511. S2CID 2350524.

- ↑ Fujita, Masahiro; Fukuchi, Masaki; Gutmann, Jens-Steffen (2005). "Real-Time Path Planning for Humanoid Robot Navigation". IJCAI. 2005. S2CID 1331595.

- ↑ Katrakazas, Christos; Quddus, Mohammed; Chen, Wen-Hua; Deka, Lipika (2015-11-01). "Real-time motion planning methods for autonomous on-road driving: State-of-the-art and future research directions". Transportation Research Part C: Emerging Technologies. 60: 416–442. Bibcode:2015TRPC...60..416K. doi: 10.1016/j.trc.2015.09.011 . hdl: 2086/14646 . ISSN 0968-090X.

- ↑ Hui, Jonathan (2018-04-18). "Self-driving car: Path planning to maneuver the traffic". Medium. Retrieved 2019-11-05.