In number theory, an arithmetic, arithmetical, or number-theoretic function is generally any function f(n) whose domain is the positive integers and whose range is a subset of the complex numbers. Hardy & Wright include in their definition the requirement that an arithmetical function "expresses some arithmetical property of n". There is a larger class of number-theoretic functions that do not fit this definition, for example, the prime-counting functions. This article provides links to functions of both classes.

In calculus, the chain rule is a formula that expresses the derivative of the composition of two differentiable functions f and g in terms of the derivatives of f and g. More precisely, if is the function such that for every x, then the chain rule is, in Lagrange's notation,

In numerical analysis, the condition number of a function measures how much the output value of the function can change for a small change in the input argument. This is used to measure how sensitive a function is to changes or errors in the input, and how much error in the output results from an error in the input. Very frequently, one is solving the inverse problem: given one is solving for x, and thus the condition number of the (local) inverse must be used.

In computing, floating-point arithmetic (FP) is arithmetic that represents subsets of real numbers using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. Numbers of this form are called floating-point numbers. For example, 12.345 is a floating-point number in base ten with five digits of precision:

In mathematical analysis, the Dirac delta function, also known as the unit impulse, is a generalized function on the real numbers, whose value is zero everywhere except at zero, and whose integral over the entire real line is equal to one. Since there is no function having this property, to model the delta "function" rigorously involves the use of limits or, as is common in mathematics, measure theory and the theory of distributions.

A finite difference is a mathematical expression of the form f (x + b) − f (x + a). If a finite difference is divided by b − a, one gets a difference quotient. The approximation of derivatives by finite differences plays a central role in finite difference methods for the numerical solution of differential equations, especially boundary value problems.

The Zeeman effect is the effect of splitting of a spectral line into several components in the presence of a static magnetic field. It is named after the Dutch physicist Pieter Zeeman, who discovered it in 1896 and received a Nobel prize for this discovery. It is analogous to the Stark effect, the splitting of a spectral line into several components in the presence of an electric field. Also similar to the Stark effect, transitions between different components have, in general, different intensities, with some being entirely forbidden, as governed by the selection rules.

In mathematics, a Riemann sum is a certain kind of approximation of an integral by a finite sum. It is named after nineteenth century German mathematician Bernhard Riemann. One very common application is in numerical integration, i.e., approximating the area of functions or lines on a graph, where it is also known as the rectangle rule. It can also be applied for approximating the length of curves and other approximations.

In vector calculus, Green's theorem relates a line integral around a simple closed curve C to a double integral over the plane region D bounded by C. It is the two-dimensional special case of Stokes' theorem.

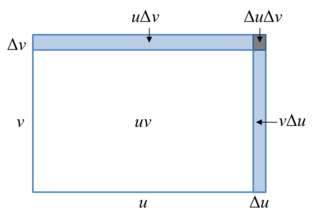

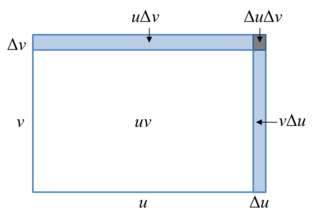

In calculus, the product rule is a formula used to find the derivatives of products of two or more functions. For two functions, it may be stated in Lagrange's notation as

In physics, an operator is a function over a space of physical states onto another space of physical states. The simplest example of the utility of operators is the study of symmetry. Because of this, they are useful tools in classical mechanics. Operators are even more important in quantum mechanics, where they form an intrinsic part of the formulation of the theory.

In the calculus of variations, a field of mathematical analysis, the functional derivative relates a change in a functional to a change in a function on which the functional depends.

Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set to output values in a (countable) smaller set, often with a finite number of elements. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms.

Significant figures, also referred to as significant digits or sig figs, are specific digits within a number written in positional notation that carry both reliability and necessity in conveying a particular quantity. When presenting the outcome of a measurement, if the number of digits exceeds what the measurement instrument can resolve, only the number of digits within the resolution's capability are dependable and therefore considered significant.

A directional derivative is a concept in multivariable calculus that measures the rate at which a function changes in a particular direction at a given point.

In quantum mechanics, the canonical commutation relation is the fundamental relation between canonical conjugate quantities. For example,

In mathematics, the total derivative of a function f at a point is the best linear approximation near this point of the function with respect to its arguments. Unlike partial derivatives, the total derivative approximates the function with respect to all of its arguments, not just a single one. In many situations, this is the same as considering all partial derivatives simultaneously. The term "total derivative" is primarily used when f is a function of several variables, because when f is a function of a single variable, the total derivative is the same as the ordinary derivative of the function.

Flory–Huggins solution theory is a lattice model of the thermodynamics of polymer solutions which takes account of the great dissimilarity in molecular sizes in adapting the usual expression for the entropy of mixing. The result is an equation for the Gibbs free energy change for mixing a polymer with a solvent. Although it makes simplifying assumptions, it generates useful results for interpreting experiments.

In calculus, the differential represents the principal part of the change in a function with respect to changes in the independent variable. The differential is defined by

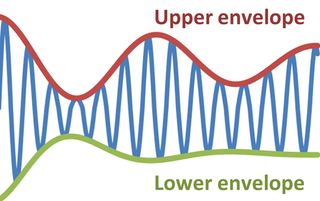

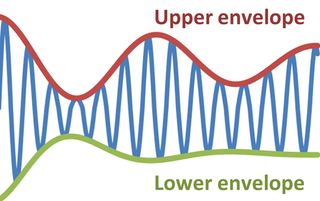

In physics and engineering, the envelope of an oscillating signal is a smooth curve outlining its extremes. The envelope thus generalizes the concept of a constant amplitude into an instantaneous amplitude. The figure illustrates a modulated sine wave varying between an upper envelope and a lower envelope. The envelope function may be a function of time, space, angle, or indeed of any variable.