In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable , or just distribution function of , evaluated at , is the probability that will take a value less than or equal to .

In mathematics, the Laplace transform, named after Pierre-Simon Laplace, is an integral transform that converts a function of a real variable to a function of a complex variable .

In mathematics, the concept of a measure is a generalization and formalization of geometrical measures and other common notions, such as magnitude, mass, and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations of measure are widely used in quantum physics and physics in general.

In mathematics, the branch of real analysis studies the behavior of real numbers, sequences and series of real numbers, and real functions. Some particular properties of real-valued sequences and functions that real analysis studies include convergence, limits, continuity, smoothness, differentiability and integrability.

In mathematics, the extended real number system is obtained from the real number system by adding two infinity elements: and where the infinities are treated as actual numbers. It is useful in describing the algebra on infinities and the various limiting behaviors in calculus and mathematical analysis, especially in the theory of measure and integration. The extended real number system is denoted or or It is the Dedekind–MacNeille completion of the real numbers.

In the mathematical field of real analysis, the monotone convergence theorem is any of a number of related theorems proving the good convergence behaviour of monotonic sequences, i.e. sequences that are non-increasing, or non-decreasing. In its simplest form, it says that a non-decreasing bounded-above sequence of real numbers converges to its smallest upper bound, its supremum. Likewise, a non-increasing bounded-below sequence converges to its largest lower bound, its infimum. In particular, infinite sums of non-negative numbers converge to the supremum of the partial sums if and only if the partial sums are bounded.

In signal processing, the power spectrum of a continuous time signal describes the distribution of power into frequency components composing that signal. According to Fourier analysis, any physical signal can be decomposed into a number of discrete frequencies, or a spectrum of frequencies over a continuous range. The statistical average of any sort of signal as analyzed in terms of its frequency content, is called its spectrum.

In mathematics, the limit of a function is a fundamental concept in calculus and analysis concerning the behavior of that function near a particular input which may or may not be in the domain of the function.

In mathematics, Fatou's lemma establishes an inequality relating the Lebesgue integral of the limit inferior of a sequence of functions to the limit inferior of integrals of these functions. The lemma is named after Pierre Fatou.

In mathematics, the Radon–Nikodym theorem is a result in measure theory that expresses the relationship between two measures defined on the same measurable space. A measure is a set function that assigns a consistent magnitude to the measurable subsets of a measurable space. Examples of a measure include area and volume, where the subsets are sets of points; or the probability of an event, which is a subset of possible outcomes within a wider probability space.

In measure theory, Lebesgue's dominated convergence theorem gives a mild sufficient condition under which limits and integrals of a sequence of functions can be interchanged. More technically it says that if a sequence of functions is bounded in absolute value by an integrable function and is almost everywhere point wise convergent to a function then the sequence convergences in to its point wise limit, and in particular the integral of the limit is the limit of the integrals. Its power and utility are two of the primary theoretical advantages of Lebesgue integration over Riemann integration.

In calculus, it is usually possible to compute the limit of the sum, difference, product, quotient or power of two functions by taking the corresponding combination of the separate limits of each respective function. For example,

In mathematics, the oscillation of a function or a sequence is a number that quantifies how much that sequence or function varies between its extreme values as it approaches infinity or a point. As is the case with limits, there are several definitions that put the intuitive concept into a form suitable for a mathematical treatment: oscillation of a sequence of real numbers, oscillation of a real-valued function at a point, and oscillation of a function on an interval.

In mathematics, mixing is an abstract concept originating from physics: the attempt to describe the irreversible thermodynamic process of mixing in the everyday world: e.g. mixing paint, mixing drinks, industrial mixing.

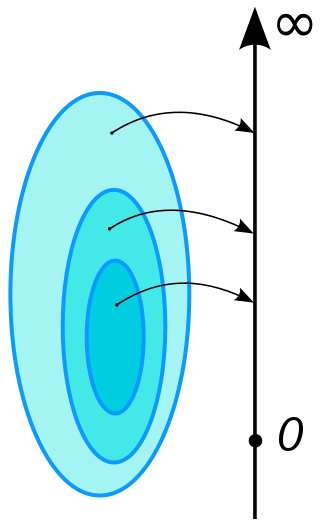

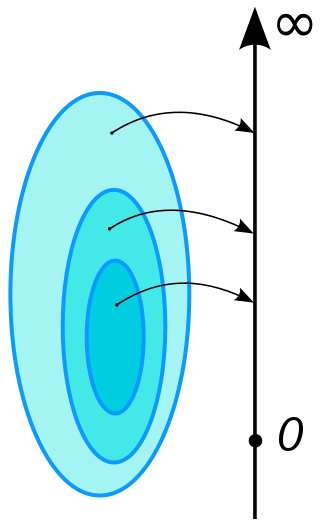

In statistics, a consistent estimator or asymptotically consistent estimator is an estimator—a rule for computing estimates of a parameter θ0—having the property that as the number of data points used increases indefinitely, the resulting sequence of estimates converges in probability to θ0. This means that the distributions of the estimates become more and more concentrated near the true value of the parameter being estimated, so that the probability of the estimator being arbitrarily close to θ0 converges to one.

In probability theory and mathematical physics, a random matrix is a matrix-valued random variable—that is, a matrix in which some or all of its entries are sampled randomly from a probability distribution. Random matrix theory (RMT) is the study of properties of random matrices, often as they become large. RMT provides techniques like mean-field theory, diagrammatic methods, the cavity method, or the replica method to compute quantities like traces, spectral densities, or scalar products between eigenvectors. Many physical phenomena, such as the spectrum of nuclei of heavy atoms, the thermal conductivity of a lattice, or the emergence of quantum chaos, can be modeled mathematically as problems concerning large, random matrices.

In mathematics, uniform integrability is an important concept in real analysis, functional analysis and measure theory, and plays a vital role in the theory of martingales.

In mathematical analysis, the Hardy–Littlewood Tauberian theorem is a Tauberian theorem relating the asymptotics of the partial sums of a series with the asymptotics of its Abel summation. In this form, the theorem asserts that if the sequence is such that there is an asymptotic equivalence

The uncertainty theory invented by Baoding Liu is a branch of mathematics based on normality, monotonicity, self-duality, countable subadditivity, and product measure axioms.

In mathematics, a limit is the value that a function approaches as the input approaches some value. Limits are essential to calculus and mathematical analysis, and are used to define continuity, derivatives, and integrals.