In mathematics, a piecewise linear or segmented function is a real-valued function of a real variable, whose graph is composed of straight-line segments.

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects. The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics.

In computer science, cycle detection or cycle finding is the algorithmic problem of finding a cycle in a sequence of iterated function values.

In mathematics and computing, the Levenberg–Marquardt algorithm, also known as the damped least-squares (DLS) method, is used to solve non-linear least squares problems. These minimization problems arise especially in least squares curve fitting. The LMA interpolates between the Gauss–Newton algorithm (GNA) and the method of gradient descent. The LMA is more robust than the GNA, which means that in many cases it finds a solution even if it starts very far off the final minimum. For well-behaved functions and reasonable starting parameters, the LMA tends to be slower than the GNA. LMA can also be viewed as Gauss–Newton using a trust region approach.

Interior-point methods are algorithms for solving linear and non-linear convex optimization problems. IPMs combine two advantages of previously-known algorithms:

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets. Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard.

In numerical analysis, the Crank–Nicolson method is a finite difference method used for numerically solving the heat equation and similar partial differential equations. It is a second-order method in time. It is implicit in time, can be written as an implicit Runge–Kutta method, and it is numerically stable. The method was developed by John Crank and Phyllis Nicolson in the 1940s.

Geometry processing is an area of research that uses concepts from applied mathematics, computer science and engineering to design efficient algorithms for the acquisition, reconstruction, analysis, manipulation, simulation and transmission of complex 3D models. As the name implies, many of the concepts, data structures, and algorithms are directly analogous to signal processing and image processing. For example, where image smoothing might convolve an intensity signal with a blur kernel formed using the Laplace operator, geometric smoothing might be achieved by convolving a surface geometry with a blur kernel formed using the Laplace-Beltrami operator.

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem. Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality.

Numerical continuation is a method of computing approximate solutions of a system of parameterized nonlinear equations,

Compressed sensing is a signal processing technique for efficiently acquiring and reconstructing a signal, by finding solutions to underdetermined linear systems. This is based on the principle that, through optimization, the sparsity of a signal can be exploited to recover it from far fewer samples than required by the Nyquist–Shannon sampling theorem. There are two conditions under which recovery is possible. The first one is sparsity, which requires the signal to be sparse in some domain. The second one is incoherence, which is applied through the isometric property, which is sufficient for sparse signals. Compressed sensing has applications in, for example, MRI where the incoherence condition is typically satisfied.

Smoothing splines are function estimates, , obtained from a set of noisy observations of the target , in order to balance a measure of goodness of fit of to with a derivative based measure of the smoothness of . They provide a means for smoothing noisy data. The most familiar example is the cubic smoothing spline, but there are many other possibilities, including for the case where is a vector quantity.

The Bregman method is an iterative algorithm to solve certain convex optimization problems involving regularization. The original version is due to Lev M. Bregman, who published it in 1967.

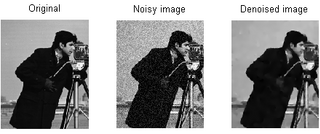

In signal processing, particularly image processing, total variation denoising, also known as total variation regularization or total variation filtering, is a noise removal process (filter). It is based on the principle that signals with excessive and possibly spurious detail have high total variation, that is, the integral of the image gradient magnitude is high. According to this principle, reducing the total variation of the signal—subject to it being a close match to the original signal—removes unwanted detail whilst preserving important details such as edges. The concept was pioneered by L. I. Rudin, S. Osher, and E. Fatemi in 1992 and so is today known as the ROF model.

In applied mathematics and statistics, basis pursuit denoising (BPDN) refers to a mathematical optimization problem of the form

Augmented Lagrangian methods are a certain class of algorithms for solving constrained optimization problems. They have similarities to penalty methods in that they replace a constrained optimization problem by a series of unconstrained problems and add a penalty term to the objective, but the augmented Lagrangian method adds yet another term designed to mimic a Lagrange multiplier. The augmented Lagrangian is related to, but not identical with, the method of Lagrange multipliers.

The in-crowd algorithm is a numerical method for solving basis pursuit denoising quickly; faster than any other algorithm for large, sparse problems. This algorithm is an active set method, which minimizes iteratively sub-problems of the global basis pursuit denoising:

The Harrow–Hassidim–Lloyd algorithm or HHL algorithm is a quantum algorithm for numerically solving a system of linear equations, designed by Aram Harrow, Avinatan Hassidim, and Seth Lloyd. The algorithm estimates the result of a scalar measurement on the solution vector to a given linear system of equations.

Sparse dictionary learning is a representation learning method which aims at finding a sparse representation of the input data in the form of a linear combination of basic elements as well as those basic elements themselves. These elements are called atoms and they compose a dictionary. Atoms in the dictionary are not required to be orthogonal, and they may be an over-complete spanning set. This problem setup also allows the dimensionality of the signals being represented to be higher than the one of the signals being observed. The above two properties lead to having seemingly redundant atoms that allow multiple representations of the same signal but also provide an improvement in sparsity and flexibility of the representation.

In mathematics, the Chambolle-Pock algorithm is an algorithm used to solve convex optimization problems. It was introduced by Antonin Chambolle and Thomas Pock in 2011 and has since become a widely used method in various fields, including image processing, computer vision, and signal processing.