This article needs additional citations for verification .(April 2012) |

A technical failure is an (unwanted) error of technology based systems.

This article needs additional citations for verification .(April 2012) |

A technical failure is an (unwanted) error of technology based systems.

Causalities include fatigue and attenuation distortions.

| Mechanical failure modes |

|---|

In computer network communications, the HTTP 404, 404 not found, 404, 404 error, page not found, or file not found error message is a hypertext transfer protocol (HTTP) standard response code, to indicate that the browser was able to communicate with a given server, but the server could not find what was requested. The error may also be used when a server does not wish to disclose whether it has the requested information.

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire life-cycle. It is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The term is broad in scope and may have widely different meanings depending on the specific context even under the same general umbrella of computing. It is at times used as a proxy term for data quality, while data validation is a prerequisite for data integrity.

An error is an inaccurate or incorrect action, thought, or judgement.

Accuracy and precision are two measures of observational error. Accuracy is how close a given set of measurements are to their true value. Precision is how close the measurements are to each other.

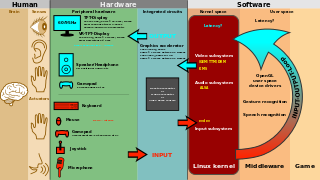

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

The Human Development Index (HDI) is a statistical composite index of life expectancy, education, and per capita income indicators, which is used to rank countries into four tiers of human development. A country scores a higher level of HDI when the lifespan is higher, the education level is higher, and the gross national income GNI (PPP) per capita is higher. It was developed by Pakistani economist Mahbub ul-Haq and was further used to measure a country's development by the United Nations Development Programme (UNDP)'s Human Development Report Office.

A reverse Turing test is a Turing test in which failure suggests that the test-taker is human, while success suggests the test-taker is automated.

A superintelligence is a hypothetical agent that possesses intelligence surpassing that of the brightest and most gifted human minds. "Superintelligence" may also refer to a property of problem-solving systems whether or not these high-level intellectual competencies are embodied in agents that act in the world. A superintelligence may or may not be created by an intelligence explosion and associated with a technological singularity.

Business software is any software or set of computer programs used by business users to perform various business functions. These business applications are used to increase productivity, measure productivity, and perform other business functions accurately.

An error-tolerant design is one that does not unduly penalize user or human errors. It is the human equivalent of fault tolerant design that allows equipment to continue functioning in the presence of hardware faults, such as a "limp-in" mode for an automobile electronics unit that would be employed if something like the oxygen sensor failed.

In the field of human factors and ergonomics, human reliability is the probability that a human performs a task to a sufficient standard. Reliability of humans can be affected by many factors such as age, physical health, mental state, attitude, emotions, personal propensity for certain mistakes, and cognitive biases.

Poka-yoke is a Japanese term that means "mistake-proofing" or "error prevention". It is also sometimes referred to as a forcing function or a behavior-shaping constraint.

User interface (UI) design or user interface engineering is the design of user interfaces for machines and software, such as computers, home appliances, mobile devices, and other electronic devices, with the focus on maximizing usability and the user experience. In computer or software design, user interface (UI) design primarily focuses on information architecture. It is the process of building interfaces that clearly communicate to the user what's important. UI design refers to graphical user interfaces and other forms of interface design. The goal of user interface design is to make the user's interaction as simple and efficient as possible, in terms of accomplishing user goals. User-centered design is typically accomplished through the execution of modern design thinking which involves empathizing with the target audience, defining a problem statement, ideating potential solutions, prototyping wireframes, and testing prototypes in order to refine final interface mockups.

In aviation, pilot error generally refers to an action or decision made by a pilot that is a substantial contributing factor leading to an aviation accident. It also includes a pilot's failure to make a correct decision or take proper action. Errors are intentional actions that fail to achieve their intended outcomes. The Chicago Convention defines the term "accident" as "an occurrence associated with the operation of an aircraft [...] in which [...] a person is fatally or seriously injured [...] except when the injuries are [...] inflicted by other persons." Hence the definition of "pilot error" does not include deliberate crashing.

A user error is an error made by the human user of a complex system, usually a computer system, in interacting with it. Although the term is sometimes used by human–computer interaction practitioners, the more formal term human error is used in the context of human reliability.

An error message is the information displayed when an unforeseen problem occurs, usually on a computer or other device. Modern operating systems with graphical user interfaces, often display error messages using dialog boxes. Error messages are used when user intervention is required, to indicate that a desired operation has failed, or to relay important warnings. Error messages are seen widely throughout computing, and are part of every operating system or computer hardware device. The proper design of error messages is an important topic in usability and other fields of human–computer interaction.

Human error is an action that has been done but that was "not intended by the actor; not desired by a set of rules or an external observer; or that led the task or system outside its acceptable limits". Human error has been cited as a primary cause and contributing factor in disasters and accidents in industries as diverse as nuclear power, aviation, space exploration, and medicine. Prevention of human error is generally seen as a major contributor to reliability and safety of (complex) systems. Human error is one of the many contributing causes of risk events.

The Swiss cheese model of accident causation is a model used in risk analysis and risk management. It likens human systems to multiple slices of Swiss cheese, which has randomly placed and sized holes in each slice, stacked side by side, in which the risk of a threat becoming a reality is mitigated by the differing layers and types of defenses which are "layered" behind each other. Therefore, in theory, lapses and weaknesses in one defense do not allow a risk to materialize, since other defenses also exist, to prevent a single point of failure.

Human factors are the physical or cognitive properties of individuals, or social behavior which is specific to humans, and which influence functioning of technological systems as well as human-environment equilibria. The safety of underwater diving operations can be improved by reducing the frequency of human error and the consequences when it does occur. Human error can be defined as an individual's deviation from acceptable or desirable practice which culminates in undesirable or unexpected results. Human factors include both the non-technical skills that enhance safety and the non-technical factors that contribute to undesirable incidents that put the diver at risk.

[Safety is] An active, adaptive process which involves making sense of the task in the context of the environment to successfully achieve explicit and implied goals, with the expectation that no harm or damage will occur. – G. Lock, 2022

Dive safety is primarily a function of four factors: the environment, equipment, individual diver performance and dive team performance. The water is a harsh and alien environment which can impose severe physical and psychological stress on a diver. The remaining factors must be controlled and coordinated so the diver can overcome the stresses imposed by the underwater environment and work safely. Diving equipment is crucial because it provides life support to the diver, but the majority of dive accidents are caused by individual diver panic and an associated degradation of the individual diver's performance. – M.A. Blumenberg, 1996

Automation bias is the propensity for humans to favor suggestions from automated decision-making systems and to ignore contradictory information made without automation, even if it is correct. Automation bias stems from the social psychology literature that found a bias in human-human interaction that showed that people assign more positive evaluations to decisions made by humans than to a neutral object. The same type of positivity bias has been found for human-automation interaction, where the automated decisions are rated more positively than neutral. This has become a growing problem for decision making as intensive care units, nuclear power plants, and aircraft cockpits have increasingly integrated computerized system monitors and decision aids to mostly factor out possible human error. Errors of automation bias tend to occur when decision-making is dependent on computers or other automated aids and the human is in an observatory role but able to make decisions. Examples of automation bias range from urgent matters like flying a plane on automatic pilot to such mundane matters as the use of spell-checking programs.