Latency, from a general point of view, is a time delay between the cause and the effect of some physical change in the system being observed. Lag, as it is known in gaming circles, refers to the latency between the input to a simulation and the visual or auditory response, often occurring because of network delay in online games.

The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP). Therefore, the entire suite is commonly referred to as TCP/IP. TCP provides reliable, ordered, and error-checked delivery of a stream of octets (bytes) between applications running on hosts communicating via an IP network. Major internet applications such as the World Wide Web, email, remote administration, and file transfer rely on TCP, which is part of the Transport Layer of the TCP/IP suite. SSL/TLS often runs on top of TCP.

In general terms, throughput is the rate of production or the rate at which something is processed.

Frame Relay is a standardized wide area network (WAN) technology that specifies the physical and data link layers of digital telecommunications channels using a packet switching methodology. Originally designed for transport across Integrated Services Digital Network (ISDN) infrastructure, it may be used today in the context of many other network interfaces.

Network topology is the arrangement of the elements of a communication network. Network topology can be used to define or describe the arrangement of various types of telecommunication networks, including command and control radio networks, industrial fieldbusses and computer networks.

Wormhole flow control, also called wormhole switching or wormhole routing, is a system of simple flow control in computer networking based on known fixed links. It is a subset of flow control methods called Flit-Buffer Flow Control.

A communication channel refers either to a physical transmission medium such as a wire, or to a logical connection over a multiplexed medium such as a radio channel in telecommunications and computer networking. A channel is used to convey an information signal, for example a digital bit stream, from one or several senders to one or several receivers. A channel has a certain capacity for transmitting information, often measured by its bandwidth in Hz or its data rate in bits per second.

In telecommunications, message switching involves messages routed in their entirety, one hop at a time. It evolved from circuit switching and was the precursor of packet switching.

Throughput of a network can be measured using various tools available on different platforms. This page explains the theory behind what these tools set out to measure and the issues regarding these measurements.

Network performance refers to measures of service quality of a network as seen by the customer.

In data communications, flow control is the process of managing the rate of data transmission between two nodes to prevent a fast sender from overwhelming a slow receiver. It provides a mechanism for the receiver to control the transmission speed, so that the receiving node is not overwhelmed with data from transmitting node. Flow control should be distinguished from congestion control, which is used for controlling the flow of data when congestion has actually occurred. Flow control mechanisms can be classified by whether or not the receiving node sends feedback to the sending node.

TCP tuning techniques adjust the network congestion avoidance parameters of Transmission Control Protocol (TCP) connections over high-bandwidth, high-latency networks. Well-tuned networks can perform up to 10 times faster in some cases. However, blindly following instructions without understanding their real consequences can hurt performance as well.

Packet loss occurs when one or more packets of data travelling across a computer network fail to reach their destination. Packet loss is either caused by errors in data transmission, typically across wireless networks, or network congestion. Packet loss is measured as a percentage of packets lost with respect to packets sent.

A computer network is a set of computers sharing resources located on or provided by network nodes. The computers use common communication protocols over digital interconnections to communicate with each other. These interconnections are made up of telecommunication network technologies, based on physically wired, optical, and wireless radio-frequency methods that may be arranged in a variety of network topologies.

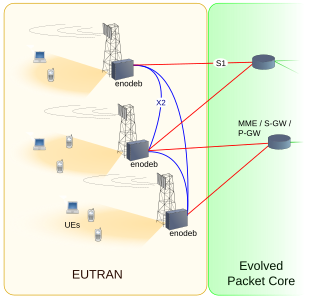

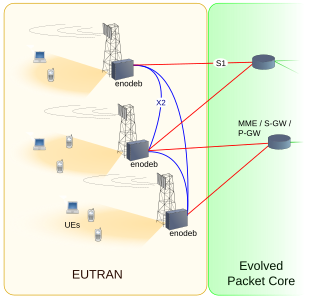

E-UTRA is the air interface of 3rd Generation Partnership Project (3GPP) Long Term Evolution (LTE) upgrade path for mobile networks. It is an acronym for Evolved Universal Mobile Telecommunications System (UMTS) Terrestrial Radio Access, also referred to as the 3GPP work item on the Long Term Evolution (LTE) also known as the Evolved Universal Terrestrial Radio Access (E-UTRA) in early drafts of the 3GPP LTE specification. E-UTRAN is the initialism of Evolved UMTS Terrestrial Radio Access Network and is the combination of E-UTRA, user equipment (UE), and E-UTRAN Node B or Evolved Node B (eNodeB).

In computer networks, goodput is the application-level throughput of a communication; i.e. the number of useful information bits delivered by the network to a certain destination per unit of time. The amount of data considered excludes protocol overhead bits as well as retransmitted data packets. This is related to the amount of time from the first bit of the first packet sent until the last bit of the last packet is delivered.

In capital markets, low latency is the use of algorithmic trading to react to market events faster than the competition to increase profitability of trades. For example, when executing arbitrage strategies the opportunity to "arb" the market may only present itself for a few milliseconds before parity is achieved. To demonstrate the value that clients put on latency, in 2007 a large global investment bank has stated that every millisecond lost results in $100m per annum in lost opportunity.

ITU-T Y.156sam Ethernet Service Activation Test Methodology is a draft recommendation under study by the ITU-T describing a new testing methodology adapted to the multiservice reality of packet-based networks.

ITU-T Y.1564 is an Ethernet service activation test methodology, which is the new ITU-T standard for turning up, installing and troubleshooting Ethernet-based services. It is the only standard test methodology that allows for complete validation of Ethernet service-level agreements (SLAs) in a single test.

Time-Sensitive Networking (TSN) is a set of standards under development by the Time-Sensitive Networking task group of the IEEE 802.1 working group. The TSN task group was formed in November 2012 by renaming the existing Audio Video Bridging Task Group and continuing its work. The name changed as a result of the extension of the working area of the standardization group. The standards define mechanisms for the time-sensitive transmission of data over deterministic Ethernet networks.