Related Research Articles

In computing, floating-point arithmetic (FP) is arithmetic using formulaic representation of real numbers as an approximation to support a trade-off between range and precision. For this reason, floating-point computation is often found in systems which include very small and very large real numbers, which require fast processing times. A number is, in general, represented approximately to a fixed number of significant digits and scaled using an exponent in some fixed base; the base for the scaling is normally two, ten, or sixteen. A number that can be represented exactly is of the following form:

In mathematics, an integral assigns numbers to functions in a way that can describe displacement, area, volume, and other concepts that arise by combining infinitesimal data. Integration is one of the two main operations of calculus, with its inverse operation, differentiation, being the other. Given a function f of a real variable x and an interval [a, b] of the real line, the definite integral

In mathematics, the logarithm is the inverse function to exponentiation. That means the logarithm of a given number x is the exponent to which another fixed number, the base b, must be raised, to produce that number x. In the simplest case, the logarithm counts the number of occurrences of the same factor in repeated multiplication; e.g., since 1000 = 10 × 10 × 10 = 103, the "logarithm base 10" of 1000 is 3, or log10(1000) = 3. The logarithm of x to baseb is denoted as logb (x), or without parentheses, logb x, or even without the explicit base, log x, when no confusion is possible, or when the base does not matter such as in big O notation.

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis. Numerical analysis naturally finds application in all fields of engineering and the physical sciences, but in the 21st century also the life sciences, social sciences, medicine, business and even the arts have adopted elements of scientific computations. The growth in computing power has revolutionized the use of realistic mathematical models in science and engineering, and subtle numerical analysis is required to implement these detailed models of the world. For example, ordinary differential equations appear in celestial mechanics ; numerical linear algebra is important for data analysis; stochastic differential equations and Markov chains are essential in simulating living cells for medicine and biology.

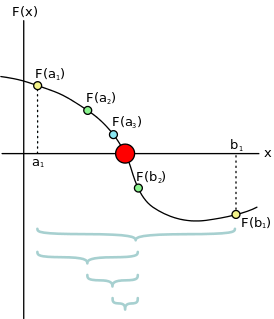

In numerical analysis, Newton's method, also known as the Newton–Raphson method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots of a real-valued function. The most basic version starts with a single-variable function f defined for a real variable x, the function's derivative f ′, and an initial guess x0 for a root of f. If the function satisfies sufficient assumptions and the initial guess is close, then

Mathematical optimization or mathematical programming is the selection of a best element from some set of available alternatives. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries.

In mathematics and computing, a root-finding algorithm is an algorithm for finding zeroes, also called "roots", of continuous functions. A zero of a function f, from the real numbers to real numbers or from the complex numbers to the complex numbers, is a number x such that f(x) = 0. As, generally, the zeroes of a function cannot be computed exactly nor expressed in closed form, root-finding algorithms provide approximations to zeroes, expressed either as floating point numbers or as small isolating intervals, or disks for complex roots.

In analysis, numerical integration comprises a broad family of algorithms for calculating the numerical value of a definite integral, and by extension, the term is also sometimes used to describe the numerical solution of differential equations. This article focuses on calculation of definite integrals. The term numerical quadrature is more or less a synonym for numerical integration, especially as applied to one-dimensional integrals. Some authors refer to numerical integration over more than one dimension as cubature; others take quadrature to include higher-dimensional integration.

In mathematics, the bisection method is a root-finding method that applies to any continuous functions for which one knows two values with opposite signs. The method consists of repeatedly bisecting the interval defined by these values and then selecting the subinterval in which the function changes sign, and therefore must contain a root. It is a very simple and robust method, but it is also relatively slow. Because of this, it is often used to obtain a rough approximation to a solution which is then used as a starting point for more rapidly converging methods. The method is also called the interval halving method, the binary search method, or the dichotomy method.

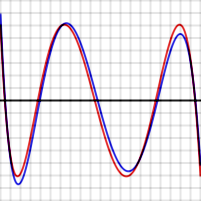

In mathematics, approximation theory is concerned with how functions can best be approximated with simpler functions, and with quantitatively characterizing the errors introduced thereby. Note that what is meant by best and simpler will depend on the application.

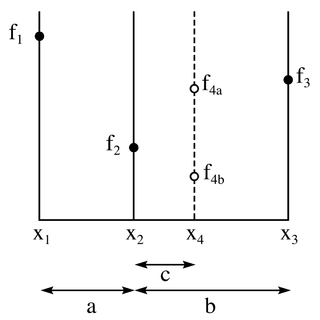

The golden-section search is a technique for finding an extremum of a function inside a specified interval. For a strictly unimodal function with an extremum inside the interval, it will find that extremum, while for an interval containing multiple extrema, it will converge to one of them. If the only extremum on the interval is on a boundary of the interval, it will converge to that boundary point. The method operates by successively narrowing the range of values on the specified interval, which makes it relatively slow, but very robust. The technique derives its name from the fact that the algorithm maintains the function values for four points whose three interval widths are in the ratio 2-φ:2φ-3:2-φ where φ is the golden ratio. These ratios are maintained for each iteration and are maximally efficient. Excepting boundary points, when searching for a minimum, the central point is always less than or equal to the outer points, assuring that a minimum is contained between the outer points. The converse is true when searching for a maximum. The algorithm is the limit of Fibonacci search for many function evaluations. Fibonacci search and golden-section search were discovered by Kiefer (1953).

Adaptive Simpson's method, also called adaptive Simpson's rule, is a method of numerical integration proposed by G.F. Kuncir in 1962. It is probably the first recursive adaptive algorithm for numerical integration to appear in print, although more modern adaptive methods based on Gauss–Kronrod quadrature and Clenshaw–Curtis quadrature are now generally preferred. Adaptive Simpson's method uses an estimate of the error we get from calculating a definite integral using Simpson's rule. If the error exceeds a user-specified tolerance, the algorithm calls for subdividing the interval of integration in two and applying adaptive Simpson's method to each subinterval in a recursive manner. The technique is usually much more efficient than composite Simpson's rule since it uses fewer function evaluations in places where the function is well-approximated by a cubic function.

This is an overview of Fortran 95 language features. Included are the additional features of TR-15581:Enhanced Data Type Facilities, which have been universally implemented. Old features that have been superseded by new ones are not described – few of those historic features are used in modern programs although most have been retained in the language to maintain backward compatibility. The current standard is Fortran 2018; many of its new features are still being implemented in compilers. The additional features of Fortran 2003, Fortran 2008 and Fortran 2018 are described by Metcalf, Reid and Cohen.

Clenshaw–Curtis quadrature and Fejér quadrature are methods for numerical integration, or "quadrature", that are based on an expansion of the integrand in terms of Chebyshev polynomials. Equivalently, they employ a change of variables and use a discrete cosine transform (DCT) approximation for the cosine series. Besides having fast-converging accuracy comparable to Gaussian quadrature rules, Clenshaw–Curtis quadrature naturally leads to nested quadrature rules, which is important for both adaptive quadrature and multidimensional quadrature (cubature).

The level-index (LI) representation of numbers, and its algorithms for arithmetic operations, were introduced by Charles Clenshaw and Frank Olver in 1984.

Comparison of programming languages is a common topic of discussion among software engineers. Basic instructions of several programming languages are compared here.

The Gauss–Kronrod quadrature formula is an adaptive method for numerical integration. It is a variant of Gaussian quadrature, in which the evaluation points are chosen so that an accurate approximation can be computed by re-using the information produced by the computation of a less accurate approximation. It is an example of what is called a nested quadrature rule: for the same set of function evaluation points, it has two quadrature rules, one higher order and one lower order. The difference between these two approximations is used to estimate the calculational error of the integration.

In numerical analysis, Gauss–Legendre quadrature is a form of Gaussian quadrature for approximating the definite integral of a function. For integrating over the interval [−1, 1], the rule takes the form:

A probability box is a characterization of an uncertain number consisting of both aleatoric and epistemic uncertainties that is often used in risk analysis or quantitative uncertainty modeling where numerical calculations must be performed. Probability bounds analysis is used to make arithmetic and logical calculations with p-boxes.

Validated numerics, or rigorous computation, verified computation, reliable computation, numerical verification is numerics including mathematically strict error evaluation, and it is one field of numerical analysis. For computation, interval arithmetic is used, and all results are represented by intervals. Validated numerics were used by Warwick Tucker in order to solve the 14th of Smale's problems, and today it is recognized as a powerful tool for the study of dynamical systems.

References

- 1 2 3 C.W. Clenshaw and F. W. J. Olver (April 1980). "An unrestricted algorithm for the exponential function". SIAM Journal on Numerical Analysis. 17 (2): 310–331. doi:10.1137/0717026. JSTOR 2156615.

- ↑ Richard P Brent (1980). "Unrestricted algorithms for elementary and special functions". In S. H. Lavington (ed.). Information Processing. 80. North-Holland, Amsterdam. pp. 613–619. arXiv: 1004.3621 .