In graph theory, an expander graph is a sparse graph that has strong connectivity properties, quantified using vertex, edge or spectral expansion. Expander constructions have spawned research in pure and applied mathematics, with several applications to complexity theory, design of robust computer networks, and the theory of error-correcting codes.

In graph theory, a regular graph is a graph where each vertex has the same number of neighbors; i.e. every vertex has the same degree or valency. A regular directed graph must also satisfy the stronger condition that the indegree and outdegree of each internal vertex are equal to each other. A regular graph with vertices of degree k is called a k‑regular graph or regular graph of degree k. Also, from the handshaking lemma, a regular graph contains an even number of vertices with odd degree.

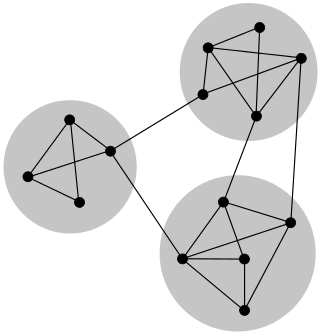

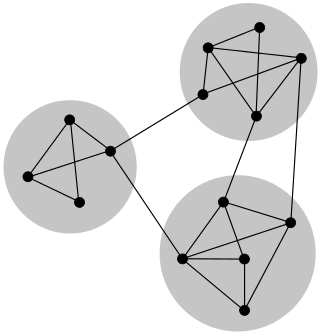

In mathematics, a hypergraph is a generalization of a graph in which an edge can join any number of vertices. In contrast, in an ordinary graph, an edge connects exactly two vertices.

In graph theory and computer science, an adjacency matrix is a square matrix used to represent a finite graph. The elements of the matrix indicate whether pairs of vertices are adjacent or not in the graph.

In mathematics, a root system is a configuration of vectors in a Euclidean space satisfying certain geometrical properties. The concept is fundamental in the theory of Lie groups and Lie algebras, especially the classification and representation theory of semisimple Lie algebras. Since Lie groups and Lie algebras have become important in many parts of mathematics during the twentieth century, the apparently special nature of root systems belies the number of areas in which they are applied. Further, the classification scheme for root systems, by Dynkin diagrams, occurs in parts of mathematics with no overt connection to Lie theory. Finally, root systems are important for their own sake, as in spectral graph theory.

This is a glossary of graph theory. Graph theory is the study of graphs, systems of nodes or vertices connected in pairs by lines or edges.

In discrete mathematics, and more specifically in graph theory, a graph is a structure amounting to a set of objects in which some pairs of the objects are in some sense "related". The objects correspond to mathematical abstractions called vertices and each of the related pairs of vertices is called an edge. Typically, a graph is depicted in diagrammatic form as a set of dots or circles for the vertices, joined by lines or curves for the edges. Graphs are one of the objects of study in discrete mathematics.

In mathematics, spectral graph theory is the study of the properties of a graph in relationship to the characteristic polynomial, eigenvalues, and eigenvectors of matrices associated with the graph, such as its adjacency matrix or Laplacian matrix.

In graph theory, the resistance distance between two vertices of a simple, connected graph, G, is equal to the resistance between two equivalent points on an electrical network, constructed so as to correspond to G, with each edge being replaced by a resistance of one ohm. It is a metric on graphs.

In mathematics, the discrete Laplace operator is an analog of the continuous Laplace operator, defined so that it has meaning on a graph or a discrete grid. For the case of a finite-dimensional graph, the discrete Laplace operator is more commonly called the Laplacian matrix.

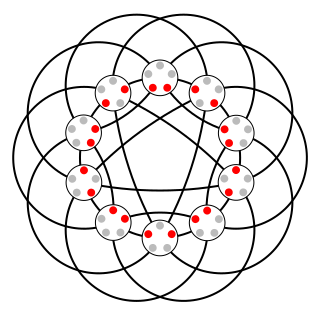

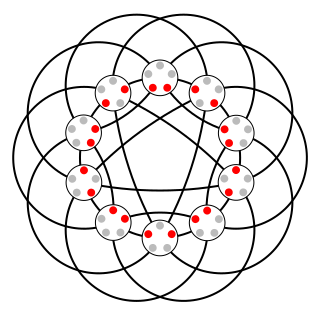

In graph theory, a strongly regular graph (SRG) is a regular graph G = (V, E) with v vertices and degree k such that for some given integers

In the mathematical field of graph theory, the Laplacian matrix, also called the graph Laplacian, admittance matrix, Kirchhoff matrix or discrete Laplacian, is a matrix representation of a graph. Named after Pierre-Simon Laplace, the graph Laplacian matrix can be viewed as a matrix form of the negative discrete Laplace operator on a graph approximating the negative continuous Laplacian obtained by the finite difference method.

In mathematics and computer science, connectivity is one of the basic concepts of graph theory: it asks for the minimum number of elements that need to be removed to separate the remaining nodes into two or more isolated subgraphs. It is closely related to the theory of network flow problems. The connectivity of a graph is an important measure of its resilience as a network.

Johnson graphs are a special class of undirected graphs defined from systems of sets. The vertices of the Johnson graph are the -element subsets of an -element set; two vertices are adjacent when the intersection of the two vertices (subsets) contains -elements. Both Johnson graphs and the closely related Johnson scheme are named after Selmer M. Johnson.

In the mathematical field of graph theory, a distance-regular graph is a regular graph such that for any two vertices v and w, the number of vertices at distance j from v and at distance k from w depends only upon j, k, and the distance between v and w.

A continuous-time quantum walk (CTQW) is a quantum walk on a given (simple) graph that is dictated by a time-varying unitary matrix that relies on the Hamiltonian of the quantum system and the adjacency matrix. The concept of a CTQW is believed to have been first considered for quantum computation by Edward Farhi and Sam Gutmann; since many classical algorithms are based on (classical) random walks, the concept of CTQWs were originally considered to see if there could be quantum analogues of these algorithms with e.g. better time-complexity than their classical counterparts. In recent times, problems such as deciding what graphs admit properties such as perfect state transfer with respect to their CTQWs have been of particular interest.

In mathematics, the Kontsevich quantization formula describes how to construct a generalized ★-product operator algebra from a given arbitrary finite-dimensional Poisson manifold. This operator algebra amounts to the deformation quantization of the corresponding Poisson algebra. It is due to Maxim Kontsevich.

In graph theory, the Lovász number of a graph is a real number that is an upper bound on the Shannon capacity of the graph. It is also known as Lovász theta function and is commonly denoted by , using a script form of the Greek letter theta to contrast with the upright theta used for Shannon capacity. This quantity was first introduced by László Lovász in his 1979 paper On the Shannon Capacity of a Graph.

In spectral graph theory, the Alon–Boppana bound provides a lower bound on the second-largest eigenvalue of the adjacency matrix of a -regular graph, meaning a graph in which every vertex has degree . The reason for the interest in the second-largest eigenvalue is that the largest eigenvalue is guaranteed to be due to -regularity, with the all-ones vector being the associated eigenvector. The graphs that come close to meeting this bound are Ramanujan graphs, which are examples of the best possible expander graphs.

Flag algebras are an important computational tool in the field of graph theory which have a wide range of applications in homomorphism density and related topics. Roughly, they formalize the notion of adding and multiplying homomorphism densities and set up a framework to solve graph homomorphism inequalities with computers by reducing them to semidefinite programming problems. Originally introduced by Alexander Razborov in a 2007 paper, the method has since come to solve numerous difficult, previously unresolved graph theoretic questions. These include the question regarding the region of feasible edge density, triangle density pairs and the maximum number of pentagons in triangle free graphs.