Eliezer S. Yudkowsky is an American artificial intelligence researcher and writer on decision theory and ethics, best known for popularizing ideas related to friendly artificial intelligence, including the idea that there might not be a "fire alarm" for AI. He is the founder of and a research fellow at the Machine Intelligence Research Institute (MIRI), a private research nonprofit based in Berkeley, California. His work on the prospect of a runaway intelligence explosion influenced philosopher Nick Bostrom's 2014 book Superintelligence: Paths, Dangers, Strategies.

Nick Bostrom is a Swedish philosopher at the University of Oxford known for his work on existential risk, the anthropic principle, human enhancement ethics, whole brain emulation, superintelligence risks, and the reversal test. He is the founding director of the Future of Humanity Institute at Oxford University.

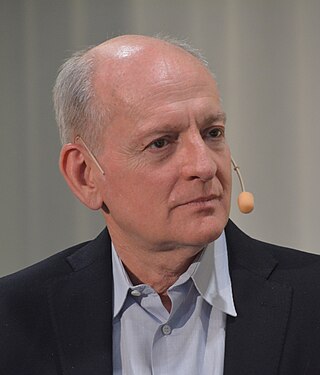

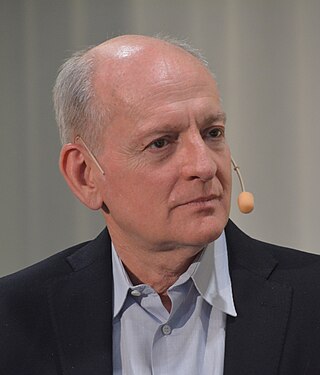

Stuart Jonathan Russell is a British computer scientist known for his contributions to artificial intelligence (AI). He is a professor of computer science at the University of California, Berkeley and was from 2008 to 2011 an adjunct professor of neurological surgery at the University of California, San Francisco. He holds the Smith-Zadeh Chair in Engineering at University of California, Berkeley. He founded and leads the Center for Human-Compatible Artificial Intelligence (CHAI) at UC Berkeley. Russell is the co-author with Peter Norvig of the authoritative textbook of the field of AI: Artificial Intelligence: A Modern Approach used in more than 1,500 universities in 135 countries.

An artificial general intelligence (AGI) is a hypothetical type of intelligent agent. If realized, an AGI could learn to accomplish any intellectual task that human beings or animals can perform. Alternatively, AGI has been defined as an autonomous system that surpasses human capabilities in the majority of economically valuable tasks. Creating AGI is a primary goal of some artificial intelligence research and of companies such as OpenAI, DeepMind, and Anthropic. AGI is a common topic in science fiction and futures studies.

The Machine Intelligence Research Institute (MIRI), formerly the Singularity Institute for Artificial Intelligence (SIAI), is a non-profit research institute focused since 2005 on identifying and managing potential existential risks from artificial general intelligence. MIRI's work has focused on a friendly AI approach to system design and on predicting the rate of technology development.

Human extinction is the hypothetical end of the human species, either by population decline due to extraneous natural causes, such as an asteroid impact or large-scale volcanism, or via anthropogenic destruction (self-extinction), for example by sub-replacement fertility.

Jaan Tallinn is an Estonian billionaire computer programmer and investor known for his participation in the development of Skype and file-sharing application FastTrack/Kazaa. Jaan Tallinn is a leading figure in the field of existential risk, having co-founded both the Centre for the Study of Existential Risk (CSER) at the University of Cambridge, in the United Kingdom and the Future of Life Institute in Cambridge, Massachusetts, in the United States. Tallinn was an early investor and board member at DeepMind and various other artificial intelligence companies.

Sir Partha Sarathi Dasgupta is an Indian-British economist who is Frank Ramsey Professor Emeritus of Economics at the University of Cambridge, United Kingdom, and a fellow of St John's College, Cambridge.

The Future of Humanity Institute (FHI) is an interdisciplinary research centre at the University of Oxford investigating big-picture questions about humanity and its prospects. It was founded in 2005 as part of the Faculty of Philosophy and the Oxford Martin School. Its director is philosopher Nick Bostrom, and its research staff include futurist Anders Sandberg and Giving What We Can founder Toby Ord.

A global catastrophic risk or a doomsday scenario is a hypothetical event that could damage human well-being on a global scale, even endangering or destroying modern civilization. An event that could cause human extinction or permanently and drastically curtail humanity's existence or potential is known as an "existential risk."

Huw Price is an Australian philosopher, formerly the Bertrand Russell Professor in the Faculty of Philosophy, Cambridge, and a Fellow of Trinity College, Cambridge.

Existential risk from artificial general intelligence is the hypothesis that substantial progress in artificial general intelligence (AGI) could result in human extinction or an irreversible global catastrophe.

The Leverhulme Centre for the Future of Intelligence (CFI) is an interdisciplinary research centre within the University of Cambridge that studies artificial intelligence. It is funded by the Leverhulme Trust.

Biotechnology risk is a form of existential risk from biological sources, such as genetically engineered biological agents. The release of such high-consequence pathogens could be

Olle Häggström is a professor of mathematical statistics at Chalmers University of Technology. Häggström earned his doctorate in 1994 at Chalmers University of Technology with Jeffrey Steif as supervisor. He became an associate professor in the same university in 1997, and professor of mathematical statistics at University of Gothenburg in 2000. In 2002 he was back at Chalmers University of Technology as professor. He mainly researches on probability theory such as Markov chains, percolation theory and other models in statistical mechanics.

The regulation of artificial intelligence is the development of public sector policies and laws for promoting and regulating artificial intelligence (AI); it is therefore related to the broader regulation of algorithms. The regulatory and policy landscape for AI is an emerging issue in jurisdictions globally, including in the European Union and in supra-national bodies like the IEEE, OECD and others. Since 2016, a wave of AI ethics guidelines have been published in order to maintain social control over the technology. Regulation is considered necessary to both encourage AI and manage associated risks. In addition to regulation, AI-deploying organizations need to play a central role in creating and deploying trustworthy AI in line with the principles of trustworthy AI, and take accountability to mitigate the risks. Regulation of AI through mechanisms such as review boards can also be seen as social means to approach the AI control problem.

Beth Victoria Lois Singler, born Beth Victoria White, is a British anthropologist specialising in artificial intelligence. She is known for her digital ethnographic research on the impact of apocalyptic stories on the conception of AI and robots, her comments on the societal implications of AI, as well as her public engagement work. The latter includes a series of four documentaries on whether robots could feel pain, human-robot companionship, AI ethics, and AI consciousness. She is currently the Junior Research Fellow in Artificial Intelligence at Homerton College, University of Cambridge.

Suffering risks, or s-risks, are risks involving an astronomical amount of suffering, much more than all of the suffering having occurred on Earth. They are sometimes categorized as a subclass of existential risks.

Scenarios in which a global catastrophic risk creates harm have been widely discussed. Some sources of catastrophic risk are anthropogenic, such as global warming, environmental degradation, and nuclear war. Others are non-anthropogenic or natural, such as meteor impacts or supervolcanoes. The impact of these scenarios can vary widely, depending on the cause and the severity of the event, ranging from temporary economic disruption to human extinction. Many societal collapses have already happened throughout human history.