In probability theory and statistics, the multivariate normal distribution, multivariate Gaussian distribution, or joint normal distribution is a generalization of the one-dimensional (univariate) normal distribution to higher dimensions. One definition is that a random vector is said to be k-variate normally distributed if every linear combination of its k components has a univariate normal distribution. Its importance derives mainly from the multivariate central limit theorem. The multivariate normal distribution is often used to describe, at least approximately, any set of (possibly) correlated real-valued random variables, each of which clusters around a mean value.

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference.

In statistical mechanics and information theory, the Fokker–Planck equation is a partial differential equation that describes the time evolution of the probability density function of the velocity of a particle under the influence of drag forces and random forces, as in Brownian motion. The equation can be generalized to other observables as well. The Fokker-Planck equation has multiple applications in information theory, graph theory, data science, finance, economics etc.

In mathematics, an indicator function or a characteristic function of a subset of a set is a function that maps elements of the subset to one, and all other elements to zero. That is, if A is a subset of some set X, then if and otherwise, where is a common notation for the indicator function. Other common notations are and

In probability theory and statistics, a Gaussian process is a stochastic process, such that every finite collection of those random variables has a multivariate normal distribution. The distribution of a Gaussian process is the joint distribution of all those random variables, and as such, it is a distribution over functions with a continuous domain, e.g. time or space.

Vapnik–Chervonenkis theory was developed during 1960–1990 by Vladimir Vapnik and Alexey Chervonenkis. The theory is a form of computational learning theory, which attempts to explain the learning process from a statistical point of view.

Statistical learning theory is a framework for machine learning drawing from the fields of statistics and functional analysis. Statistical learning theory deals with the statistical inference problem of finding a predictive function based on data. Statistical learning theory has led to successful applications in fields such as computer vision, speech recognition, and bioinformatics.

In information theory, the cross-entropy between two probability distributions and , over the same underlying set of events, measures the average number of bits needed to identify an event drawn from the set when the coding scheme used for the set is optimized for an estimated probability distribution , rather than the true distribution .

In statistics and information theory, a maximum entropy probability distribution has entropy that is at least as great as that of all other members of a specified class of probability distributions. According to the principle of maximum entropy, if nothing is known about a distribution except that it belongs to a certain class, then the distribution with the largest entropy should be chosen as the least-informative default. The motivation is twofold: first, maximizing entropy minimizes the amount of prior information built into the distribution; second, many physical systems tend to move towards maximal entropy configurations over time.

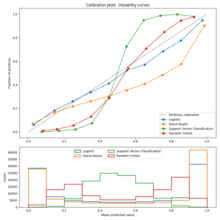

The Brier Score is a strictly proper score function or strictly proper scoring rule that measures the accuracy of probabilistic predictions. For unidimensional predictions, it is strictly equivalent to the mean squared error as applied to predicted probabilities.

In operator theory, a branch of mathematics, a positive-definite kernel is a generalization of a positive-definite function or a positive-definite matrix. It was first introduced by James Mercer in the early 20th century, in the context of solving integral operator equations. Since then, positive-definite functions and their various analogues and generalizations have arisen in diverse parts of mathematics. They occur naturally in Fourier analysis, probability theory, operator theory, complex function-theory, moment problems, integral equations, boundary-value problems for partial differential equations, machine learning, embedding problem, information theory, and other areas.

In probability theory and statistics, a stochastic order quantifies the concept of one random variable being "bigger" than another. These are usually partial orders, so that one random variable may be neither stochastically greater than, less than, nor equal to another random variable . Many different orders exist, which have different applications.

In multilinear algebra, the tensor rank decomposition or rank-R decomposition is the decomposition of a tensor as a sum of R rank-1 tensors, where R is minimal. Computing this decomposition is an open problem.

In probability theory, a Markov kernel is a map that in the general theory of Markov processes plays the role that the transition matrix does in the theory of Markov processes with a finite state space.

In cryptography, learning with errors (LWE) is a mathematical problem that is widely used to create secure encryption algorithms. It is based on the idea of representing secret information as a set of equations with errors. In other words, LWE is a way to hide the value of a secret by introducing noise to it. In more technical terms, it refers to the computational problem of inferring a linear -ary function over a finite ring from given samples some of which may be erroneous. The LWE problem is conjectured to be hard to solve, and thus to be useful in cryptography.

In probability theory, a logit-normal distribution is a probability distribution of a random variable whose logit has a normal distribution. If Y is a random variable with a normal distribution, and t is the standard logistic function, then X = t(Y) has a logit-normal distribution; likewise, if X is logit-normally distributed, then Y = logit(X)= log (X/(1-X)) is normally distributed. It is also known as the logistic normal distribution, which often refers to a multinomial logit version (e.g.).

In machine learning, the kernel embedding of distributions comprises a class of nonparametric methods in which a probability distribution is represented as an element of a reproducing kernel Hilbert space (RKHS). A generalization of the individual data-point feature mapping done in classical kernel methods, the embedding of distributions into infinite-dimensional feature spaces can preserve all of the statistical features of arbitrary distributions, while allowing one to compare and manipulate distributions using Hilbert space operations such as inner products, distances, projections, linear transformations, and spectral analysis. This learning framework is very general and can be applied to distributions over any space on which a sensible kernel function may be defined. For example, various kernels have been proposed for learning from data which are: vectors in , discrete classes/categories, strings, graphs/networks, images, time series, manifolds, dynamical systems, and other structured objects. The theory behind kernel embeddings of distributions has been primarily developed by Alex Smola, Le Song , Arthur Gretton, and Bernhard Schölkopf. A review of recent works on kernel embedding of distributions can be found in.

In statistics, the complex Wishart distribution is a complex version of the Wishart distribution. It is the distribution of times the sample Hermitian covariance matrix of zero-mean independent Gaussian random variables. It has support for Hermitian positive definite matrices.

Poisson-type random measures are a family of three random counting measures which are closed under restriction to a subspace, i.e. closed under thinning. They are the only distributions in the canonical non-negative power series family of distributions to possess this property and include the Poisson distribution, negative binomial distribution, and binomial distribution. The PT family of distributions is also known as the Katz family of distributions, the Panjer or (a,b,0) class of distributions and may be retrieved through the Conway–Maxwell–Poisson distribution.

In computational and mathematical biology, a biological lattice-gas cellular automaton (BIO-LGCA) is a discrete model for moving and interacting biological agents, a type of cellular automaton. The BIO-LGCA is based on the lattice-gas cellular automaton (LGCA) model used in fluid dynamics. A BIO-LGCA model describes cells and other motile biological agents as point particles moving on a discrete lattice, thereby interacting with nearby particles. Contrary to classic cellular automaton models, particles in BIO-LGCA are defined by their position and velocity. This allows to model and analyze active fluids and collective migration mediated primarily through changes in momentum, rather than density. BIO-LGCA applications include cancer invasion and cancer progression.

![Expected value of logarithmic rule, when Event 1 is expected to occur with probability of 0.8, the blue line is described by the function

0.8

log

[?]

(

x

)

+

(

1

-

0.8

)

log

[?]

(

1

-

x

)

{\displaystyle 0.8\log(x)+(1-0.8)\log(1-x)} ExpectedLog.png](http://upload.wikimedia.org/wikipedia/commons/thumb/5/55/ExpectedLog.png/280px-ExpectedLog.png)