Related Research Articles

Cognitive science is the interdisciplinary, scientific study of the mind and its processes with input from linguistics, psychology, neuroscience, philosophy, computer science/artificial intelligence, and anthropology. It examines the nature, the tasks, and the functions of cognition. Cognitive scientists study intelligence and behavior, with a focus on how nervous systems represent, process, and transform information. Mental faculties of concern to cognitive scientists include language, perception, memory, attention, reasoning, and emotion; to understand these faculties, cognitive scientists borrow from fields such as linguistics, psychology, artificial intelligence, philosophy, neuroscience, and anthropology. The typical analysis of cognitive science spans many levels of organization, from learning and decision to logic and planning; from neural circuitry to modular brain organization. One of the fundamental concepts of cognitive science is that "thinking can best be understood in terms of representational structures in the mind and computational procedures that operate on those structures."

Artificial consciousness (AC), also known as machine consciousness (MC), synthetic consciousness or digital consciousness, is the consciousness hypothesized to be possible in artificial intelligence. It is also the corresponding field of study, which draws insights from philosophy of mind, philosophy of artificial intelligence, cognitive science and neuroscience. The same terminology can be used with the term "sentience" instead of "consciousness" when specifically designating phenomenal consciousness.

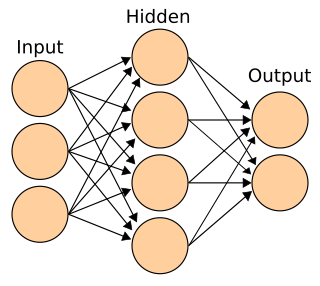

Connectionism is the name of an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many 'waves' since its beginnings.

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems, symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems.

Bio-inspired computing, short for biologically inspired computing, is a field of study which seeks to solve computer science problems using models of biology. It relates to connectionism, social behavior, and emergence. Within computer science, bio-inspired computing relates to artificial intelligence and machine learning. Bio-inspired computing is a major subset of natural computation.

The language of thought hypothesis (LOTH), sometimes known as thought ordered mental expression (TOME), is a view in linguistics, philosophy of mind and cognitive science, forwarded by American philosopher Jerry Fodor. It describes the nature of thought as possessing "language-like" or compositional structure. On this view, simple concepts combine in systematic ways to build thoughts. In its most basic form, the theory states that thought, like language, has syntax.

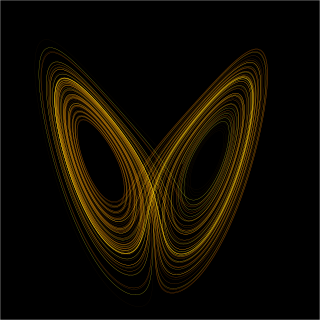

Dynamical systems theory is an area of mathematics used to describe the behavior of complex dynamical systems, usually by employing differential equations or difference equations. When differential equations are employed, the theory is called continuous dynamical systems. From a physical point of view, continuous dynamical systems is a generalization of classical mechanics, a generalization where the equations of motion are postulated directly and are not constrained to be Euler–Lagrange equations of a least action principle. When difference equations are employed, the theory is called discrete dynamical systems. When the time variable runs over a set that is discrete over some intervals and continuous over other intervals or is any arbitrary time-set such as a Cantor set, one gets dynamic equations on time scales. Some situations may also be modeled by mixed operators, such as differential-difference equations.

Paul Smolensky is Krieger-Eisenhower Professor of Cognitive Science at the Johns Hopkins University and a Senior Principal Researcher at Microsoft Research, Redmond Washington.

James Lloyd "Jay" McClelland, FBA is the Lucie Stern Professor at Stanford University, where he was formerly the chair of the Psychology Department. He is best known for his work on statistical learning and Parallel Distributed Processing, applying connectionist models to explain cognitive phenomena such as spoken word recognition and visual word recognition. McClelland is to a large extent responsible for the large increase in scientific interest in connectionism in the 1980s.

A cognitive architecture refers to both a theory about the structure of the human mind and to a computational instantiation of such a theory used in the fields of artificial intelligence (AI) and computational cognitive science. The formalized models can be used to further refine a comprehensive theory of cognition and as a useful artificial intelligence program. Successful cognitive architectures include ACT-R and SOAR. The research on cognitive architectures as software instantiation of cognitive theories was initiated by Allen Newell in 1990.

A neural network, also called a neuronal network, is an interconnected population of neurons. Biological neural networks are studied to understand the organization and functioning of nervous systems.

Neurophilosophy or philosophy of neuroscience is the interdisciplinary study of neuroscience and philosophy that explores the relevance of neuroscientific studies to the arguments traditionally categorized as philosophy of mind. The philosophy of neuroscience attempts to clarify neuroscientific methods and results using the conceptual rigor and methods of philosophy of science.

David Everett Rumelhart was an American psychologist who made many contributions to the formal analysis of human cognition, working primarily within the frameworks of mathematical psychology, symbolic artificial intelligence, and parallel distributed processing. He also admired formal linguistic approaches to cognition, and explored the possibility of formulating a formal grammar to capture the structure of stories.

In philosophy of mind, the computational theory of mind (CTM), also known as computationalism, is a family of views that hold that the human mind is an information processing system and that cognition and consciousness together are a form of computation. Warren McCulloch and Walter Pitts (1943) were the first to suggest that neural activity is computational. They argued that neural computations explain cognition. The theory was proposed in its modern form by Hilary Putnam in 1967, and developed by his PhD student, philosopher, and cognitive scientist Jerry Fodor in the 1960s, 1970s, and 1980s. It was vigorously disputed in analytic philosophy in the 1990s due to work by Putnam himself, John Searle, and others.

Embodied cognitive science is an interdisciplinary field of research, the aim of which is to explain the mechanisms underlying intelligent behavior. It comprises three main methodologies: the modeling of psychological and biological systems in a holistic manner that considers the mind and body as a single entity; the formation of a common set of general principles of intelligent behavior; and the experimental use of robotic agents in controlled environments.

Ron Sun is a cognitive scientist who made significant contributions to computational psychology and other areas of cognitive science and artificial intelligence. He is currently professor of cognitive sciences at Rensselaer Polytechnic Institute, and formerly the James C. Dowell Professor of Engineering and Professor of Computer Science at University of Missouri. He received his Ph.D. in 1992 from Brandeis University.

Harmonic grammar is a linguistic model proposed by Geraldine Legendre, Yoshiro Miyata, and Paul Smolensky in 1990. It is a connectionist approach to modeling linguistic well-formedness. During the late 2000s and early 2010s, the term 'harmonic grammar' has been used to refer more generally to models of language that use weighted constraints, including ones that are not explicitly connectionist – see e.g. Pater (2009) and Potts et al. (2010).

The LIDA cognitive architecture is an integrated artificial cognitive system that attempts to model a broad spectrum of cognition in biological systems, from low-level perception/action to high-level reasoning. Developed primarily by Stan Franklin and colleagues at the University of Memphis, the LIDA architecture is empirically grounded in cognitive science and cognitive neuroscience. In addition to providing hypotheses to guide further research, the architecture can support control structures for software agents and robots. Providing plausible explanations for many cognitive processes, the LIDA conceptual model is also intended as a tool with which to think about how minds work.

Cognitive musicology is a branch of cognitive science concerned with computationally modeling musical knowledge with the goal of understanding both music and cognition.

Robert M. French is a research director at the French National Centre for Scientific Research. He is currently at the University of Burgundy in Dijon. He holds a Ph.D. from the University of Michigan, where he worked with Douglas Hofstadter on the Tabletop computational cognitive model. He specializes in cognitive science and has made an extensive study of the process of analogy-making.

References

- ↑ Green, C., & Sokal, Michael M. (2000). "Dispelling the "Mystery" of Computational Cognitive Science". History of Psychology. 3 (1): 62–66. doi:10.1037/1093-4510.3.1.62. PMID 11624164.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ↑ Lieto, Antonio (2021). Cognitive Design for Artificial Minds. London, UK: Routledge, Taylor & Francis. ISBN 9781138207929.

- 1 2 McCorduck, Pamela (2004). Machines Who Think (2 ed.). Natick, MA: A. K. Peters, Ltd. pp. 100–101. ISBN 978-1-56881-205-2. Archived from the original on 2020-03-01. Retrieved 2016-12-25.

- ↑ Haugeland, John (1985). Artificial Intelligence: The Very Idea. Cambridge, MA: MIT Press. ISBN 978-0-262-08153-5.

- 1 2 Crevier, Daniel (1993). AI: The Tumultuous Search for Artificial Intelligence . New York, NY: BasicBooks. pp. 145–215. ISBN 978-0-465-02997-6.

- ↑ Megill, J. (2014). "Emotion, cognition and artificial intelligence". Minds and Machines. 24 (2): 189–199. doi:10.1007/s11023-013-9320-8. S2CID 17907148.

- ↑ Dreyfus, Hubert L. (1972). What Computers Still Can't Do:A Critique of Artificial Reason. MIT Press. ISBN 9780262540674.

- ↑ Sun, Ron (2008). Introduction to computational cognitive modeling. Cambridge, MA: Cambridge handbook of computational psychology. ISBN 978-0521674102.

- ↑ "Computer Simulations in Science". Stanford Encyclopedia of Philosophy, Computer Simulations in Science. Metaphysics Research Lab, Stanford University. 2018.

- ↑ Sun, R. (2008). The Cambridge Handbook of Computational Psychology. New York: Cambridge University Press.

- 1 2 3 Eysenck, Michael (2012). Fundamentals of Cognition. New York, NY: Psychology Press. ISBN 978-1848720718.

- ↑ Restrepo Echavarria, R. (2009). "Russell's Structuralism and the Supposed Death of Computational Cognitive Science". Minds and Machines. 19 (2): 181–197. doi:10.1007/s11023-009-9155-5. S2CID 195233608.

- ↑ Polk, Thad; Seifert, Colleen (2002). Cognitive Modeling. Cambridge, MA: MIT Press. ISBN 978-0-262-66116-4.

- ↑ Anderson, James; Pellionisz, Andras; Rosenfeld, Edward (1993). Neurocomputing 2: Directions for Research. Cambridge, MA: MIT Press. ISBN 978-0262510752.

- ↑ Rumelhart, David; McClelland, James (1986). Parallel distributed processing, Vol. 1: Foundations . Cambridge, MA: MIT Press. ASIN B008Q6LHXE.

- ↑ Cohen, Jonathan; Dunbar, Kevin; McClelland, James (1990). "On The Control Of Automatic Processes: A Parallel Distributed Processing Account Of The Stroop Effect". Psychological Review. 97 (3): 332–361. CiteSeerX 10.1.1.321.3453 . doi:10.1037/0033-295x.97.3.332. PMID 2200075.

- ↑ Garson, James; Zalta, Edward (Spring 2015). "Connectionism". The Stanford Encyclopedia of Philosophy. Stanford University.