Related Research Articles

In computer science, static program analysis is the analysis of computer programs performed without executing them, in contrast with dynamic program analysis, which is performed on programs during their execution in the integrated environment.

Software testing is the act of checking whether software satisfies expectations.

A race condition or race hazard is the condition of an electronics, software, or other system where the system's substantive behavior is dependent on the sequence or timing of other uncontrollable events, leading to unexpected or inconsistent results. It becomes a bug when one or more of the possible behaviors is undesirable.

Code review is a software quality assurance activity in which one or more people check a program, mainly by viewing and reading parts of its source code, either after implementation or as an interruption of implementation. At least one of the persons must not have authored the code. The persons performing the checking, excluding the author, are called "reviewers".

In the context of software engineering, software quality refers to two related but distinct notions:

In programming and software development, fuzzing or fuzz testing is an automated software testing technique that involves providing invalid, unexpected, or random data as inputs to a computer program. The program is then monitored for exceptions such as crashes, failing built-in code assertions, or potential memory leaks. Typically, fuzzers are used to test programs that take structured inputs. This structure is specified, e.g., in a file format or protocol and distinguishes valid from invalid input. An effective fuzzer generates semi-valid inputs that are "valid enough" in that they are not directly rejected by the parser, but do create unexpected behaviors deeper in the program and are "invalid enough" to expose corner cases that have not been properly dealt with.

Java Pathfinder (JPF) is a system to verify executable Java bytecode programs. JPF was developed at the NASA Ames Research Center and open sourced in 2005. The acronym JPF is not to be confused with the unrelated Java Plugin Framework project.

Runtime verification is a computing system analysis and execution approach based on extracting information from a running system and using it to detect and possibly react to observed behaviors satisfying or violating certain properties. Some very particular properties, such as datarace and deadlock freedom, are typically desired to be satisfied by all systems and may be best implemented algorithmically. Other properties can be more conveniently captured as formal specifications. Runtime verification specifications are typically expressed in trace predicate formalisms, such as finite state machines, regular expressions, context-free patterns, linear temporal logics, etc., or extensions of these. This allows for a less ad-hoc approach than normal testing. However, any mechanism for monitoring an executing system is considered runtime verification, including verifying against test oracles and reference implementations. When formal requirements specifications are provided, monitors are synthesized from them and infused within the system by means of instrumentation. Runtime verification can be used for many purposes, such as security or safety policy monitoring, debugging, testing, verification, validation, profiling, fault protection, behavior modification, etc. Runtime verification avoids the complexity of traditional formal verification techniques, such as model checking and theorem proving, by analyzing only one or a few execution traces and by working directly with the actual system, thus scaling up relatively well and giving more confidence in the results of the analysis, at the expense of less coverage. Moreover, through its reflective capabilities runtime verification can be made an integral part of the target system, monitoring and guiding its execution during deployment.

In computer programming jargon, a heisenbug is a software bug that seems to disappear or alter its behavior when one attempts to study it. The term is a pun on the name of Werner Heisenberg, the physicist who first asserted the observer effect of quantum mechanics, which states that the act of observing a system inevitably alters its state. In electronics, the traditional term is probe effect, where attaching a test probe to a device changes its behavior.

Dynamic program analysis is the act of analyzing software that involves executing a program – as opposed to static program analysis, which does not execute it.

Search-based software engineering (SBSE) applies metaheuristic search techniques such as genetic algorithms, simulated annealing and tabu search to software engineering problems. Many activities in software engineering can be stated as optimization problems. Optimization techniques of operations research such as linear programming or dynamic programming are often impractical for large scale software engineering problems because of their computational complexity or their assumptions on the problem structure. Researchers and practitioners use metaheuristic search techniques, which impose little assumptions on the problem structure, to find near-optimal or "good-enough" solutions.

In engineering, debugging is the process of finding the root cause of and workarounds and possible fixes for bugs.

Jinx was a concurrency debugger that deterministically controlled the interleaving of workloads across processor cores, focusing on shared memory interactions. Using this deterministic approach, Jinx aimed to increase the frequency of occurrence of elusive shared memory bugs, sometimes called Heisenbugs. Jinx is no longer available. Corensic, the company that was developing Jinx, was bought by F5 Networks and the Jinx project was cancelled.

Astrée is a static analyzer based on abstract interpretation. It analyzes programs written in the programming languages C and C++, and emits an exhaustive list of possible runtime errors and assertion violations. The defect classes covered include divisions by zero, buffer overflows, dereferences of null or dangling pointers, data races, deadlocks, etc. Astrée includes a static taint checker and helps finding cybersecurity vulnerabilities, such as Spectre. It is proprietary software written in the language OCaml.

For several years parallel hardware was only available for distributed computing but recently it is becoming available for the low end computers as well. Hence it has become inevitable for software programmers to start writing parallel applications. It is quite natural for programmers to think sequentially and hence they are less acquainted with writing multi-threaded or parallel processing applications. Parallel programming requires handling various issues such as synchronization and deadlock avoidance. Programmers require added expertise for writing such applications apart from their expertise in the application domain. Hence programmers prefer to write sequential code and most of the popular programming languages support it. This allows them to concentrate more on the application. Therefore, there is a need to convert such sequential applications to parallel applications with the help of automated tools. The need is also non-trivial because large amount of legacy code written over the past few decades needs to be reused and parallelized.

High performance computing applications run on massively parallel supercomputers consist of concurrent programs designed using multi-threaded, multi-process models. The applications may consist of various constructs with varying degree of parallelism. Although high performance concurrent programs use similar design patterns, models and principles as that of sequential programs, unlike sequential programs, they typically demonstrate non-deterministic behavior. The probability of bugs increases with the number of interactions between the various parallel constructs. Race conditions, data races, deadlocks, missed signals and live lock are common error types.

Real-time testing is the process of testing real-time computer systems.

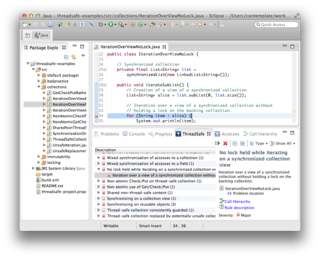

ThreadSafe is a source code analysis tool that identifies application risks and security vulnerabilities associated with concurrency in Java code bases, using whole-program interprocedural analysis. ThreadSafe is used to identify and avoid software failures in concurrent applications running in complex environments.

A software map represents static, dynamic, and evolutionary information of software systems and their software development processes by means of 2D or 3D map-oriented information visualization. It constitutes a fundamental concept and tool in software visualization, software analytics, and software diagnosis. Its primary applications include risk analysis for and monitoring of code quality, team activity, or software development progress and, generally, improving effectiveness of software engineering with respect to all related artifacts, processes, and stakeholders throughout the software engineering process and software maintenance.

References

- 1 2 Wang, Chao; Said, Mahmoud; Gupta, Aarti (21–28 May 2011). Coverage guided systematic concurrency testing. ICSE '11 Proceedings of the 33rd International Conference on Software Engineering. Waikiki. pp. 221–230.

- 1 2 Dustin, Elfriede (28 December 2002). Effective Software Testing: 50 Ways to Improve Your Software Testing. Addison-Wesley Longman. p. 186. ISBN 0201794292.

- ↑ Leiner, A.L.; Notz, W.A.; Smith, J.L.; Weinberger, A. (July 1959). "PILOT—A New Multiple Computer System". Journal of the ACM. 6 (3): 313–335. doi: 10.1145/320986.320987 . S2CID 19867617.

- ↑ Dijkstra, Edsger W. (May 1968). "The structure of the "THE"-multiprogramming system". Communications of the ACM. 11 (5): 341–346. doi: 10.1145/363095.363143 . S2CID 2021311.

- ↑ "Concurrent Software Testing: A Systematic Review" (PDF). Archived from the original on 24 September 2015. Retrieved 4 March 2014.

{{cite web}}: CS1 maint: bot: original URL status unknown (link) - 1 2 3 Binder, Robert V. (1999). Testing object-oriented systems: models, patterns, and tools . Addison-Wesley Longman. ISBN 0-201-80938-9.

- ↑ Melo, Silvana Morita; Souza, Simone do Rocio Senger de; Souza, Paulo Sérgio Lopes de; Carver, Jeffrey C. (2017). How to test your concurrent software: an approach for the selection of testing techniques. Conference on Systems, Programming, Languages, and Applications: Software for Humanity - SPLASH.

- 1 2 3 K.C., Tai (20–22 September 1989). Testing of concurrent software. Proceedings of the Thirteenth Annual International Computer Software & Applications Conference. Orlando, FL, USA, USA. pp. 62–64.

- 1 2 Hwang, Gwan-Hwan; Tai, Kuo-Chung; Huang, Ting-Lu (1995). "Reachability Testing: An Approach To Testing Concurrent Software". International Journal of Software Engineering and Knowledge Engineering. 5 (4): 493–510. doi:10.1142/S0218194095000241.

- ↑ Qi, Xiaofang; Li, Yueran (23–24 November 2018). Parallel Reachability Testing Based on Hadoop MapReduce. th International Conference, SATE 2018. Shenzhen, Guangdong, China. pp. 173–184. doi:10.1007/978-3-030-04272-1_11.

- 1 2 Lu, Shan; Park, Soyeon; Seo, Eunsoo; Zhou, Yuanyuan (1–5 March 2008). Learning from mistakes: a comprehensive study on real world concurrency bug characteristics. ASPLOS XIII Proceedings of the 13th international conference on Architectural support for programming languages and operating systems. Seattle, WA, USA. pp. 329–339.

- ↑ Artho, Cyrille; Biere, Armin (27–28 August 2001). Applying static analysis to large-scale, multi-threaded Java programs. Proceedings 2001 Australian Software Engineering Conference. Canberra, ACT, Australia, Australia. pp. 68–75.

- ↑ Manzoor, Numan; Munir, Hussan; Moayyed, Misagh (27–30 November 2012). Comparison of Static Analysis Tools for Finding Concurrency Bugs. 2012 IEEE 23rd International Symposium on Software Reliability Engineering Workshops. Dallas, TX, USA. pp. 129–133.