Digital storage media command and control (DSM-CC) is a toolkit for developing control channels associated with MPEG-1 and MPEG-2 streams. It is defined in part 6 of the MPEG-2 standard (Extensions for DSM-CC) and uses a client/server model connected via an underlying network (carried via the MPEG-2 multiplex or independently if needed).

MPEG-1 is a standard for lossy compression of video and audio. It is designed to compress VHS-quality raw digital video and CD audio down to 1.5 Mbit/s without excessive quality loss, making video CDs, digital cable/satellite TV and digital audio broadcasting (DAB) possible.

MPEG-2 is a standard for "the generic coding of moving pictures and associated audio information". It describes a combination of lossy video compression and lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard.

In telecommunications and computer networks, multiplexing is a method by which multiple analog or digital signals are combined into one signal over a shared medium. The aim is to share a scarce resource. For example, in telecommunications, several telephone calls may be carried using one wire. Multiplexing originated in telegraphy in the 1870s, and is now widely applied in communications. In telephony, George Owen Squier is credited with the development of telephone carrier multiplexing in 1910.

Contents

DSM-CC may be used for controlling the video reception, providing features normally found on Video Cassette Recorders (VCR) (fast-forward, rewind, pause, etc.). It may also be used for a wide variety of other purposes including packet data transport. It is defined by a series of weighty standards, principally MPEG-2 ISO/IEC 13818-6 (part 6 of the MPEG-2 standard).

The International Organization for Standardization is an international standard-setting body composed of representatives from various national standards organizations.

The International Electrotechnical Commission is an international standards organization that prepares and publishes International Standards for all electrical, electronic and related technologies – collectively known as "electrotechnology". IEC standards cover a vast range of technologies from power generation, transmission and distribution to home appliances and office equipment, semiconductors, fibre optics, batteries, solar energy, nanotechnology and marine energy as well as many others. The IEC also manages three global conformity assessment systems that certify whether equipment, system or components conform to its International Standards.

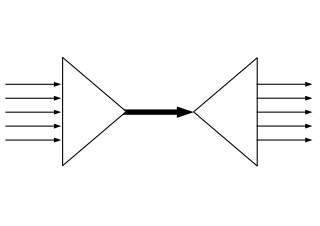

DSM-CC may work in conjunction with next generation packet networks, working alongside such internet protocols as RSVP, RTSP, RTP and SCP. Although DSM-CC is usually associated with video delivery (via satellite or terrestrially) and with interactive content, it is also used among audio servers and clients. The architecture describes three main parts of the system: the client, the server, and the session resource manager (SRM). The server provides content and other services to the client, and both are "clients" of the SRM. The SRM allocates and manages network resources (such as channels, bandwidth, and network addresses.) By combining server and client components together onto the same platforms, peer-to-peer content access and delivery systems can be constructed.

The Real-time Transport Protocol (RTP) is a network protocol for delivering audio and video over IP networks. RTP is used in communication and entertainment systems that involve streaming media, such as telephony, video teleconference applications including WebRTC, television services and web-based push-to-talk features.

Secure copy protocol (SCP) is a means of securely transferring computer files between a local host and a remote host or between two remote hosts. It is based on the Secure Shell (SSH) protocol. "SCP" commonly refers to both the Secure Copy Protocol and the program itself.

In the context of spaceflight, a satellite is an artificial object which has been intentionally placed into orbit. Such objects are sometimes called artificial satellites to distinguish them from natural satellites such as Earth's Moon.

These specifications include numerous implementation options. For example, MPEG-2 video can be encoded in different ways, and a DSM-CC system can be constructed to include or exclude certain features and interfaces. Normally, an outside specification will define a profile of specific options, allowing systems built using common profiles to interoperate.

DSM-CC defines or extends five distinct protocols:

- User-User

- Allows remote access by the client to objects on the server. The User-User specification goes beyond the definition of specific server object classes to define classes local to the client, as well as some of the interaction with other parts of the system. The distributed object model is based on CORBA. Objects are accessed using the internet inter-ORB protocol (IIOP), with some optional extensions. Two subsets, "core" and "extended", are defined. In the model, some clients may also load content onto the server.

- User-Network

- There are two parts to this protocol: Session and Resource. This protocol is used between the client and SRM, and between the server and SRM. The U-N Session protocol is used to establish sessions with the network, associated with resources which are allocated and released using the U-N Resource protocol.

- MPEG transport profiles

- The specification provides profiles to the standard MPEG transport protocol (defined by ISO/IEC 13818-1) to allow transmission of event, synchronization, download, and other information in the MPEG transport stream.

- Download

- Several variations of this protocol allow transfer of content from server to client, either within the MPEG transport stream or on a separate (presumably high-speed) channel. Flow-controlled download allows the download operations to be negotiated and controlled by the client. A variation of download is an autonomous "data carousel" on the server which repeatedly downloads information; the download carousel client waits for the information without initiating the transfer. An extension to the data carousel is the "object carousel", which presents downloaded information as objects compatible with the objects defined by the User-User API. (The choice of download or IIOP protocols is embedded in the object's IOR, so the means of access is transparent to the client application.)

- Switched Digital Broadcast-Channel Change Protocol (SDB/CCP)

- Enables a client to remotely switch from channel to channel in a broadcast environment. Used to attach a client to a continuous-feed session (CFS) or other broadcast feed. Sometimes used in pay-per-view.

An implementation does not always need all of these protocols. Almost all implementations in the real world use a subset.