In probability theory and statistics, the negative binomial distribution is a discrete probability distribution that models the number of failures in a sequence of independent and identically distributed Bernoulli trials before a specified (non-random) number of successes occurs. For example, we can define rolling a 6 on a die as a success, and rolling any other number as a failure, and ask how many failure rolls will occur before we see the third success. In such a case, the probability distribution of the number of failures that appear will be a negative binomial distribution.

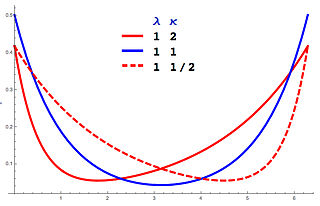

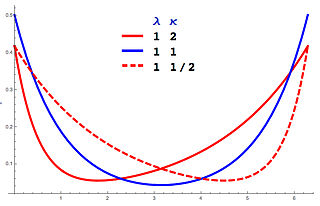

In probability theory and statistics, the exponential distribution or negative exponential distribution is the probability distribution of the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts.

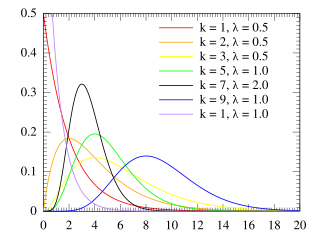

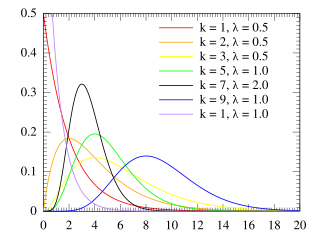

The Erlang distribution is a two-parameter family of continuous probability distributions with support . The two parameters are:

In mathematics, a polynomial sequence, i.e., a sequence of polynomials indexed by non-negative integers in which the index of each polynomial equals its degree, is said to be of binomial type if it satisfies the sequence of identities

In probability theory and statistics, the cumulantsκn of a probability distribution are a set of quantities that provide an alternative to the moments of the distribution. Any two probability distributions whose moments are identical will have identical cumulants as well, and vice versa.

The Gram–Charlier A series, and the Edgeworth series are series that approximate a probability distribution in terms of its cumulants. The series are the same; but, the arrangement of terms differ. The key idea of these expansions is to write the characteristic function of the distribution whose probability density function f is to be approximated in terms of the characteristic function of a distribution with known and suitable properties, and to recover f through the inverse Fourier transform.

In probability theory, a compound Poisson distribution is the probability distribution of the sum of a number of independent identically-distributed random variables, where the number of terms to be added is itself a Poisson-distributed variable. The result can be either a continuous or a discrete distribution.

In probability theory and mathematical statistics, the law of total cumulance is a generalization to cumulants of the law of total probability, the law of total expectation, and the law of total variance. It has applications in the analysis of time series. It was introduced by David Brillinger.

In probability theory and statistics, the noncentral chi-squared distribution is a noncentral generalization of the chi-squared distribution. It often arises in the power analysis of statistical tests in which the null distribution is a chi-squared distribution; important examples of such tests are the likelihood-ratio tests.

In statistics, Poisson regression is a generalized linear model form of regression analysis used to model count data and contingency tables. Poisson regression assumes the response variable Y has a Poisson distribution, and assumes the logarithm of its expected value can be modeled by a linear combination of unknown parameters. A Poisson regression model is sometimes known as a log-linear model, especially when used to model contingency tables.

In probability and statistics, a natural exponential family (NEF) is a class of probability distributions that is a special case of an exponential family (EF).

Self-similar processes are types of stochastic processes that exhibit the phenomenon of self-similarity. A self-similar phenomenon behaves the same when viewed at different degrees of magnification, or different scales on a dimension. Self-similar processes can sometimes be described using heavy-tailed distributions, also known as long-tailed distributions. Examples of such processes include traffic processes, such as packet inter-arrival times and burst lengths. Self-similar processes can exhibit long-range dependency.

In probability and statistics, the Tweedie distributions are a family of probability distributions which include the purely continuous normal, gamma and inverse Gaussian distributions, the purely discrete scaled Poisson distribution, and the class of compound Poisson–gamma distributions which have positive mass at zero, but are otherwise continuous. Tweedie distributions are a special case of exponential dispersion models and are often used as distributions for generalized linear models.

In probability theory and statistics, the Conway–Maxwell–Poisson distribution is a discrete probability distribution named after Richard W. Conway, William L. Maxwell, and Siméon Denis Poisson that generalizes the Poisson distribution by adding a parameter to model overdispersion and underdispersion. It is a member of the exponential family, has the Poisson distribution and geometric distribution as special cases and the Bernoulli distribution as a limiting case.

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known constant mean rate and independently of the time since the last event. It is named after French mathematician Siméon Denis Poisson. The Poisson distribution can also be used for the number of events in other specified interval types such as distance, area, or volume. It plays an important role for discrete-stable distributions.

In probability theory, concentration inequalities provide bounds on how a random variable deviates from some value. The law of large numbers of classical probability theory states that sums of independent random variables are, under very mild conditions, close to their expectation with a large probability. Such sums are the most basic examples of random variables concentrated around their mean. Recent results show that such behavior is shared by other functions of independent random variables.

In coding theory, Zemor's algorithm, designed and developed by Gilles Zemor, is a recursive low-complexity approach to code construction. It is an improvement over the algorithm of Sipser and Spielman.

In statistics, the q-Weibull distribution is a probability distribution that generalizes the Weibull distribution and the Lomax distribution. It is one example of a Tsallis distribution.

In probability theory and directional statistics, a wrapped asymmetric Laplace distribution is a wrapped probability distribution that results from the "wrapping" of the asymmetric Laplace distribution around the unit circle. For the symmetric case (asymmetry parameter κ = 1), the distribution becomes a wrapped Laplace distribution. The distribution of the ratio of two circular variates (Z) from two different wrapped exponential distributions will have a wrapped asymmetric Laplace distribution. These distributions find application in stochastic modelling of financial data.

In statistics, the complex Wishart distribution is a complex version of the Wishart distribution. It is the distribution of times the sample Hermitian covariance matrix of zero-mean independent Gaussian random variables. It has support for Hermitian positive definite matrices.