Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds.

Corpus linguistics is the study of a language as that language is expressed in its text corpus, its body of "real world" text. Corpus linguistics proposes that a reliable analysis of a language is more feasible with corpora collected in the field—the natural context ("realia") of that language—with minimal experimental interference.

Word-sense disambiguation (WSD) is the process of identifying which sense of a word is meant in a sentence or other segment of context. In human language processing and cognition, it is usually subconscious/automatic but can often come to conscious attention when ambiguity impairs clarity of communication, given the pervasive polysemy in natural language. In computational linguistics, it is an open problem that affects other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference.

Cross-language information retrieval (CLIR) is a subfield of information retrieval dealing with retrieving information written in a language different from the language of the user's query. The term "cross-language information retrieval" has many synonyms, of which the following are perhaps the most frequent: cross-lingual information retrieval, translingual information retrieval, multilingual information retrieval. The term "multilingual information retrieval" refers more generally both to technology for retrieval of multilingual collections and to technology which has been moved to handle material in one language to another. The term Multilingual Information Retrieval (MLIR) involves the study of systems that accept queries for information in various languages and return objects of various languages, translated into the user's language. Cross-language information retrieval refers more specifically to the use case where users formulate their information need in one language and the system retrieves relevant documents in another. To do so, most CLIR systems use various translation techniques. CLIR techniques can be classified into different categories based on different translation resources:

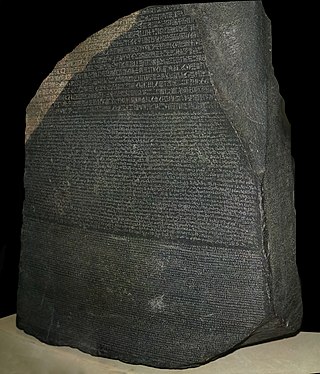

A parallel text is a text placed alongside its translation or translations. Parallel text alignment is the identification of the corresponding sentences in both halves of the parallel text. The Loeb Classical Library and the Clay Sanskrit Library are two examples of dual-language series of texts. Reference Bibles may contain the original languages and a translation, or several translations by themselves, for ease of comparison and study; Origen's Hexapla placed six versions of the Old Testament side by side. A famous example is the Rosetta Stone, whose discovery allowed the Ancient Egyptian language to begin being deciphered.

Machine translation can use a method based on dictionary entries, which means that the words will be translated as a dictionary does – word by word, usually without much correlation of meaning between them. Dictionary lookups may be done with or without morphological analysis or lemmatisation. While this approach to machine translation is probably the least sophisticated, dictionary-based machine translation is ideally suitable for the translation of long lists of phrases on the subsentential level, e.g. inventories or simple catalogs of products and services.

Document classification or document categorization is a problem in library science, information science and computer science. The task is to assign a document to one or more classes or categories. This may be done "manually" or algorithmically. The intellectual classification of documents has mostly been the province of library science, while the algorithmic classification of documents is mainly in information science and computer science. The problems are overlapping, however, and there is therefore interdisciplinary research on document classification.

In linguistics, a treebank is a parsed text corpus that annotates syntactic or semantic sentence structure. The construction of parsed corpora in the early 1990s revolutionized computational linguistics, which benefitted from large-scale empirical data.

Multi-document summarization is an automatic procedure aimed at extraction of information from multiple texts written about the same topic. The resulting summary report allows individual users, such as professional information consumers, to quickly familiarize themselves with information contained in a large cluster of documents. In such a way, multi-document summarization systems are complementing the news aggregators performing the next step down the road of coping with information overload.

Computational lexicology is a branch of computational linguistics, which is concerned with the use of computers in the study of lexicon. It has been more narrowly described by some scholars as the use of computers in the study of machine-readable dictionaries. It is distinguished from computational lexicography, which more properly would be the use of computers in the construction of dictionaries, though some researchers have used computational lexicography as synonymous.

A concept search is an automated information retrieval method that is used to search electronically stored unstructured text for information that is conceptually similar to the information provided in a search query. In other words, the ideas expressed in the information retrieved in response to a concept search query are relevant to the ideas contained in the text of the query.

The knowledge acquisition bottleneck is perhaps the major impediment to solving the word sense disambiguation (WSD) problem. Unsupervised learning methods rely on knowledge about word senses, which is barely formulated in dictionaries and lexical databases. Supervised learning methods depend heavily on the existence of manually annotated examples for every word sense, a requisite that can so far be met only for a handful of words for testing purposes, as it is done in the Senseval exercises.

In natural language processing and information retrieval, explicit semantic analysis (ESA) is a vectoral representation of text that uses a document corpus as a knowledge base. Specifically, in ESA, a word is represented as a column vector in the tf–idf matrix of the text corpus and a document is represented as the centroid of the vectors representing its words. Typically, the text corpus is English Wikipedia, though other corpora including the Open Directory Project have been used.

The following outline is provided as an overview of and topical guide to natural-language processing:

Sketch Engine is a corpus manager and text analysis software developed by Lexical Computing CZ s.r.o. since 2003. Its purpose is to enable people studying language behaviour to search large text collections according to complex and linguistically motivated queries. Sketch Engine gained its name after one of the key features, word sketches: one-page, automatic, corpus-derived summaries of a word's grammatical and collocational behaviour. Currently, it supports and provides corpora in 90+ languages.

In natural language processing, linguistics, and neighboring fields, Linguistic Linked Open Data (LLOD) describes a method and an interdisciplinary community concerned with creating, sharing, and (re-)using language resources in accordance with Linked Data principles. The Linguistic Linked Open Data Cloud was conceived and is being maintained by the Open Linguistics Working Group (OWLG) of the Open Knowledge Foundation, but has been a point of focal activity for several W3C community groups, research projects, and infrastructure efforts since then.

Drama annotation is the process of annotating the metadata of a drama. Given a drama expressed in some medium, the process of metadata annotation identifies what are the elements that characterize the drama and annotates such elements in some metadata format. For example, in the sentence "Laertes and Polonius warn Ophelia to stay away from Hamlet." from the text Hamlet, the word "Laertes", which refers to a drama element, namely a character, will be annotated as "Char", taken from some set of metadata. This article addresses the drama annotation projects, with the sets of metadata and annotations proposed in the scientific literature, based markup languages and ontologies.

In linguistics and language technology, a language resource is a "[composition] of linguistic material used in the construction, improvement and/or evaluation of language processing applications, (...) in language and language-mediated research studies and applications."

Mona Talat Diab is a computer science professor at George Washington University and a research scientist with Facebook AI. Her research focuses on natural language processing, computational linguistics, cross lingual/multilingual processing, computational socio-pragmatics, Arabic language processing, and applied machine learning.