Related Research Articles

Linear algebra is the branch of mathematics concerning linear equations such as:

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis. It is the study of numerical methods that attempt to find approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics, numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in medicine and biology.

In the mathematical discipline of linear algebra, a matrix decomposition or matrix factorization is a factorization of a matrix into a product of matrices. There are many different matrix decompositions; each finds use among a particular class of problems.

In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements is sometimes referred to as the sparsity of the matrix.

In mathematics, particularly matrix theory, a band matrix or banded matrix is a sparse matrix whose non-zero entries are confined to a diagonal band, comprising the main diagonal and zero or more diagonals on either side.

The finite element method (FEM) is a powerful technique originally developed for numerical solution of complex problems in structural mechanics, and it remains the method of choice for complex systems. In the FEM, the structural system is modeled by a set of appropriate finite elements interconnected at discrete points called nodes. Elements may have physical properties such as thickness, coefficient of thermal expansion, density, Young's modulus, shear modulus and Poisson's ratio.

In numerical analysis, Stone's method, also known as the strongly implicit procedure or SIP, is an algorithm for solving a sparse linear system of equations. The method uses an incomplete LU decomposition, which approximates the exact LU decomposition, to get an iterative solution of the problem. The method is named after Harold S. Stone, who proposed it in 1968.

Electromagnetic field solvers are specialized programs that solve Maxwell's equations directly. They form a part of the field of electronic design automation, or EDA, and are commonly used in the design of integrated circuits and printed circuit boards. They are used when a solution from first principles or the highest accuracy is required.

In numerical analysis and linear algebra, lower–upper (LU) decomposition or factorization factors a matrix as the product of a lower triangular matrix and an upper triangular matrix. The product sometimes includes a permutation matrix as well. LU decomposition can be viewed as the matrix form of Gaussian elimination. Computers usually solve square systems of linear equations using LU decomposition, and it is also a key step when inverting a matrix or computing the determinant of a matrix. The LU decomposition was introduced by the Polish astronomer Tadeusz Banachiewicz in 1938. To quote: "It appears that Gauss and Doolittle applied the method [of elimination] only to symmetric equations. More recent authors, for example, Aitken, Banachiewicz, Dwyer, and Crout … have emphasized the use of the method, or variations of it, in connection with non-symmetric problems … Banachiewicz … saw the point … that the basic problem is really one of matrix factorization, or “decomposition” as he called it." It is also sometimes referred to as LR decomposition.

Numerical linear algebra, sometimes called applied linear algebra, is the study of how matrix operations can be used to create computer algorithms which efficiently and accurately provide approximate answers to questions in continuous mathematics. It is a subfield of numerical analysis, and a type of linear algebra. Computers use floating-point arithmetic and cannot exactly represent irrational data, so when a computer algorithm is applied to a matrix of data, it can sometimes increase the difference between a number stored in the computer and the true number that it is an approximation of. Numerical linear algebra uses properties of vectors and matrices to develop computer algorithms that minimize the error introduced by the computer, and is also concerned with ensuring that the algorithm is as efficient as possible.

In mathematics, especially linear algebra, an M-matrix is a Z-matrix with eigenvalues whose real parts are nonnegative. The set of non-singular M-matrices are a subset of the class of P-matrices, and also of the class of inverse-positive matrices. The name M-matrix was seemingly originally chosen by Alexander Ostrowski in reference to Hermann Minkowski, who proved that if a Z-matrix has all of its row sums positive, then the determinant of that matrix is positive.

In numerical linear algebra, an incomplete LU factorization of a matrix is a sparse approximation of the LU factorization often used as a preconditioner.

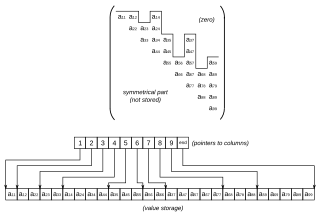

In scientific computing, skyline matrix storage, or SKS, or a variable band matrix storage, or envelope storage scheme is a form of a sparse matrix storage format matrix that reduces the storage requirement of a matrix more than banded storage. In banded storage, all entries within a fixed distance from the diagonal are stored. In column-oriented skyline storage, only the entries from the first nonzero entry to the last nonzero entry in each column are stored. There is also row oriented skyline storage, and, for symmetric matrices, only one triangle is usually stored.

MUMPS is a software application for the solution of large sparse systems of linear algebraic equations on distributed memory parallel computers. It was developed in European project PARASOL (1996–1999) by CERFACS, IRIT-ENSEEIHT and RAL. The software implements the multifrontal method, which is a version of Gaussian elimination for large sparse systems of equations, especially those arising from the finite element method. It is written in Fortran 90 with parallelism by MPI and it uses BLAS and ScaLAPACK kernels for dense matrix computations. Since 1999, MUMPS has been supported by CERFACS, IRIT-ENSEEIHT, and INRIA.

The finite element method (FEM) is a popular method for numerically solving differential equations arising in engineering and mathematical modeling. Typical problem areas of interest include the traditional fields of structural analysis, heat transfer, fluid flow, mass transport, and electromagnetic potential.

In mathematics, a matrix is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object.

In numerical analysis, nested dissection is a divide and conquer heuristic for the solution of sparse symmetric systems of linear equations based on graph partitioning. Nested dissection was introduced by George (1973); the name was suggested by Garrett Birkhoff.

In numerical mathematics, hierarchical matrices (H-matrices) are used as data-sparse approximations of non-sparse matrices. While a sparse matrix of dimension can be represented efficiently in units of storage by storing only its non-zero entries, a non-sparse matrix would require units of storage, and using this type of matrices for large problems would therefore be prohibitively expensive in terms of storage and computing time. Hierarchical matrices provide an approximation requiring only units of storage, where is a parameter controlling the accuracy of the approximation. In typical applications, e.g., when discretizing integral equations, preconditioning the resulting systems of linear equations, or solving elliptic partial differential equations, a rank proportional to with a small constant is sufficient to ensure an accuracy of . Compared to many other data-sparse representations of non-sparse matrices, hierarchical matrices offer a major advantage: the results of matrix arithmetic operations like matrix multiplication, factorization or inversion can be approximated in operations, where

In numerical analysis, the mixed finite element method, is a type of finite element method in which extra fields to be solved are introduced during the posing a partial differential equation problem. Somewhat related is the hybrid finite element method. The extra fields are constrained by using Lagrange multiplier fields. To be distinguished from the mixed finite element method, usual finite element methods that do not introduce such extra fields are also called irreducible or primal finite element methods. The mixed finite element method is efficient for some problems that would be numerically ill-posed if discretized by using the irreducible finite element method; one example of such problems is to compute the stress and strain fields in an almost incompressible elastic body.

References

- ↑ Renaud Sizaire, keyFE2 User Manual, 2005, Sec. I.4.2 Solving_linear_system online Archived October 8, 2006, at the Wayback Machine

- ↑ Hayrettin Kardestuncer, Ed. Finite Element Handbook.

- ↑ Irons, Bruce M. (1970). "A frontal solution program for finite element analysis". International Journal for Numerical Methods in Engineering. 2 (January/March): 5–32. Bibcode:1970IJNME...2....5I. doi:10.1002/nme.1620020104.

- ↑ I. S. Duff, J. K. Reid, The Multifrontal Solution of Indefinite Sparse Symmetric Linear, ACM Transactions on Mathematical Software (TOMS), v.9 n.3, p.302-325, Sept. 1983 DOI 10.1145/356044.356047

- ↑ Iain S Duff, Albert M Erisman, John K Reid, Direct methods for sparse matrices, Oxford University Press, Inc., New York, NY, 1986