In machine learning, a neural network is a model inspired by the structure and function of biological neural networks in animal brains.

Cognitive neuroscience is the scientific field that is concerned with the study of the biological processes and aspects that underlie cognition, with a specific focus on the neural connections in the brain which are involved in mental processes. It addresses the questions of how cognitive activities are affected or controlled by neural circuits in the brain. Cognitive neuroscience is a branch of both neuroscience and psychology, overlapping with disciplines such as behavioral neuroscience, cognitive psychology, physiological psychology and affective neuroscience. Cognitive neuroscience relies upon theories in cognitive science coupled with evidence from neurobiology, and computational modeling.

Unsupervised learning is a method in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Within such an approach, a machine learning model tries to find any similarities, differences, patterns, and structure in data by itself. No prior human intervention is needed.

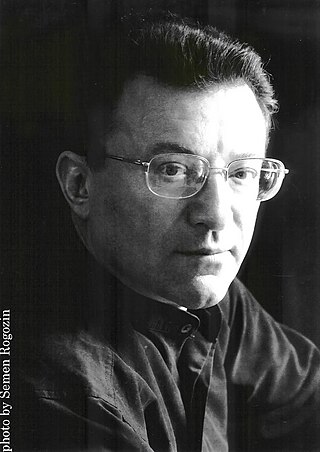

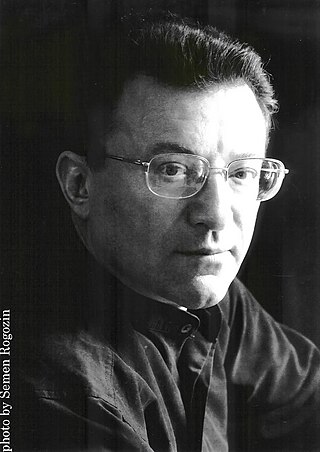

Jürgen Schmidhuber is a German computer scientist noted for his work in the field of artificial intelligence, specifically artificial neural networks. He is a scientific director of the Dalle Molle Institute for Artificial Intelligence Research in Switzerland. He is also director of the Artificial Intelligence Initiative and professor of the Computer Science program in the Computer, Electrical, and Mathematical Sciences and Engineering (CEMSE) division at the King Abdullah University of Science and Technology (KAUST) in Saudi Arabia.

Bart Andrew Kosko is a writer and professor of electrical engineering and law at the University of Southern California (USC). He is a researcher and popularizer of fuzzy logic, neural networks, and noise, and the author of several trade books and textbooks on these and related subjects of machine intelligence. He was awarded the 2022 Donald O. Hebb Award for neural learning by the International Neural Network Society.

Winner-take-all is a computational principle applied in computational models of neural networks by which neurons compete with each other for activation. In the classical form, only the neuron with the highest activation stays active while all other neurons shut down; however, other variations allow more than one neuron to be active, for example the soft winner take-all, by which a power function is applied to the neurons.

Neural gas is an artificial neural network, inspired by the self-organizing map and introduced in 1991 by Thomas Martinetz and Klaus Schulten. The neural gas is a simple algorithm for finding optimal data representations based on feature vectors. The algorithm was coined "neural gas" because of the dynamics of the feature vectors during the adaptation process, which distribute themselves like a gas within the data space. It is applied where data compression or vector quantization is an issue, for example speech recognition, image processing or pattern recognition. As a robustly converging alternative to the k-means clustering it is also used for cluster analysis.

Stephen Grossberg is a cognitive scientist, theoretical and computational psychologist, neuroscientist, mathematician, biomedical engineer, and neuromorphic technologist. He is the Wang Professor of Cognitive and Neural Systems and a Professor Emeritus of Mathematics & Statistics, Psychological & Brain Sciences, and Biomedical Engineering at Boston University.

Adaptive resonance theory (ART) is a theory developed by Stephen Grossberg and Gail Carpenter on aspects of how the brain processes information. It describes a number of artificial neural network models which use supervised and unsupervised learning methods, and address problems such as pattern recognition and prediction.

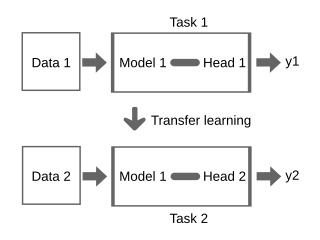

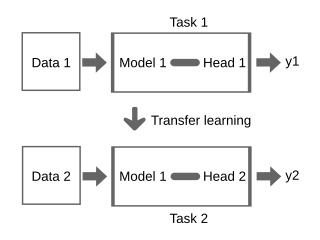

Transfer learning (TL) is a technique in machine learning (ML) in which knowledge learned from a task is re-used in order to boost performance on a related task. For example, for image classification, knowledge gained while learning to recognize cars could be applied when trying to recognize trucks. This topic is related to the psychological literature on transfer of learning, although practical ties between the two fields are limited. Reusing/transferring information from previously learned tasks to new tasks has the potential to significantly improve learning efficiency.

Spiking neural networks (SNNs) are artificial neural networks (ANN) that more closely mimic natural neural networks. In addition to neuronal and synaptic state, SNNs incorporate the concept of time into their operating model. The idea is that neurons in the SNN do not transmit information at each propagation cycle, but rather transmit information only when a membrane potential—an intrinsic quality of the neuron related to its membrane electrical charge—reaches a specific value, called the threshold. When the membrane potential reaches the threshold, the neuron fires, and generates a signal that travels to other neurons which, in turn, increase or decrease their potentials in response to this signal. A neuron model that fires at the moment of threshold crossing is also called a spiking neuron model.

Leonid Perlovsky is an Affiliated Research Professor at Northeastern University. His research involves cognitive algorithms and modeling of evolution of languages and cultures.

Yann André LeCun is a French-American computer scientist working primarily in the fields of machine learning, computer vision, mobile robotics and computational neuroscience. He is the Silver Professor of the Courant Institute of Mathematical Sciences at New York University and Vice-President, Chief AI Scientist at Meta.

Kunihiko Fukushima is a Japanese computer scientist, most noted for his work on artificial neural networks and deep learning. He is currently working part-time as a senior research scientist at the Fuzzy Logic Systems Institute in Fukuoka, Japan.

Deep learning is the subset of machine learning methods based on neural networks with representation learning. The adjective "deep" refers to the use of multiple layers in the network. Methods used can be either supervised, semi-supervised or unsupervised.

DeepDream is a computer vision program created by Google engineer Alexander Mordvintsev that uses a convolutional neural network to find and enhance patterns in images via algorithmic pareidolia, thus creating a dream-like appearance reminiscent of a psychedelic experience in the deliberately overprocessed images.

Fusion adaptive resonance theory (fusion ART) is a generalization of self-organizing neural networks known as the original Adaptive Resonance Theory models for learning recognition categories across multiple pattern channels. There is a separate stream of work on fusion ARTMAP, that extends fuzzy ARTMAP consisting of two fuzzy ART modules connected by an inter-ART map field to an extended architecture consisting of multiple ART modules.

In computer science, incremental learning is a method of machine learning in which input data is continuously used to extend the existing model's knowledge i.e. to further train the model. It represents a dynamic technique of supervised learning and unsupervised learning that can be applied when training data becomes available gradually over time or its size is out of system memory limits. Algorithms that can facilitate incremental learning are known as incremental machine learning algorithms.

Thomas Martinetz is a German physicist and neuro-informatician.

Nikola Kirilov Kasabov also known as Nikola Kirilov Kassabov is a Bulgarian and New Zealand computer scientist, academic and author. He is a professor emeritus of Knowledge Engineering at Auckland University of Technology, Founding Director of the Knowledge Engineering and Discovery Research Institute (KEDRI), George Moore Chair of Data Analytics at Ulster University, as well as visiting professor at both the Institute for Information and Communication Technologies (IICT) at the Bulgarian Academy of Sciences and Dalian University in China. He is also the Founder and Director of Knowledge Engineering Consulting.