Related Research Articles

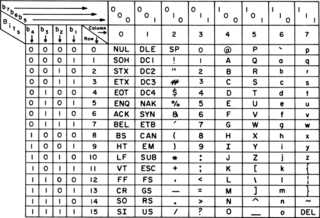

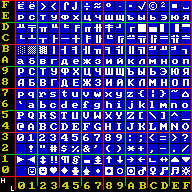

ASCII, an acronym for American Standard Code for Information Interchange, is a character encoding standard for electronic communication. ASCII codes represent text in computers, telecommunications equipment, and other devices. ASCII has just 128 code points, of which only 95 are printable characters, which severely limit its scope. The set of available punctuation had significant impact on the syntax of computer languages and text markup. ASCII hugely influenced the design of character sets used by modern computers, including Unicode which has over a million code points, but the first 128 of these are the same as ASCII.

The bit is the most basic unit of information in computing and digital communication. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either "1" or "0", but other representations such as true/false, yes/no, on/off, or +/− are also widely used.

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as the Internet Protocol refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness.

In computing and electronic systems, binary-coded decimal (BCD) is a class of binary encodings of decimal numbers where each digit is represented by a fixed number of bits, usually four or eight. Sometimes, special bit patterns are used for a sign or other indications.

In mathematics and computing, the hexadecimal numeral system is a positional numeral system that represents numbers using a radix (base) of sixteen. Unlike the decimal system representing numbers using ten symbols, hexadecimal uses sixteen distinct symbols, most often the symbols "0"–"9" to represent values 0 to 9 and "A"–"F" to represent values from ten to fifteen.

In computer science, an integer is a datum of integral data type, a data type that represents some range of mathematical integers. Integral data types may be of different sizes and may or may not be allowed to contain negative values. Integers are commonly represented in a computer as a group of binary digits (bits). The size of the grouping varies so the set of integer sizes available varies between different types of computers. Computer hardware nearly always provides a way to represent a processor register or memory address as an integer.

A MAC address is a unique identifier assigned to a network interface controller (NIC) for use as a network address in communications within a network segment. This use is common in most IEEE 802 networking technologies, including Ethernet, Wi-Fi, and Bluetooth. Within the Open Systems Interconnection (OSI) network model, MAC addresses are used in the medium access control protocol sublayer of the data link layer. As typically represented, MAC addresses are recognizable as six groups of two hexadecimal digits, separated by hyphens, colons, or without a separator.

In computing, a nibble (occasionally nybble, nyble, or nybl to match the spelling of byte) is a four-bit aggregation, or half an octet. It is also known as half-byte or tetrade. In a networking or telecommunications context, the nibble is often called a semi-octet, quadbit, or quartet. A nibble has sixteen (24) possible values. A nibble can be represented by a single hexadecimal digit (0–F) and called a hex digit.

In computing, endianness is the order in which bytes within a word of digital data are transmitted over a data communication medium or addressed in computer memory, counting only byte significance compared to earliness. Endianness is primarily expressed as big-endian (BE) or little-endian (LE), terms introduced by Danny Cohen into computer science for data ordering in an Internet Experiment Note published in 1980. The adjective endian has its origin in the writings of 18th century Anglo-Irish writer Jonathan Swift. In the 1726 novel Gulliver's Travels, he portrays the conflict between sects of Lilliputians divided into those breaking the shell of a boiled egg from the big end or from the little end. By analogy, a CPU may read a digital word big end first, or little end first.

A computer number format is the internal representation of numeric values in digital device hardware and software, such as in programmable computers and calculators. Numerical values are stored as groupings of bits, such as bytes and words. The encoding between numerical values and bit patterns is chosen for convenience of the operation of the computer; the encoding used by the computer's instruction set generally requires conversion for external use, such as for printing and display. Different types of processors may have different internal representations of numerical values and different conventions are used for integer and real numbers. Most calculations are carried out with number formats that fit into a processor register, but some software systems allow representation of arbitrarily large numbers using multiple words of memory.

EtherType is a two-octet field in an Ethernet frame. It is used to indicate which protocol is encapsulated in the payload of the frame and is used at the receiving end by the data link layer to determine how the payload is processed. The same field is also used to indicate the size of some Ethernet frames.

Dot-decimal notation is a presentation format for numerical data. It consists of a string of decimal numbers, using the full stop (dot) as a separation character.

Robert William Bemer was a computer scientist best known for his work at IBM during the late 1950s and early 1960s.

An organizationally unique identifier (OUI) is a 24-bit number that uniquely identifies a vendor, manufacturer, or other organization.

Intel hexadecimal object file format, Intel hex format or Intellec Hex is a file format that conveys binary information in ASCII text form, making it possible to store on non-binary media such as paper tape, punch cards, etc., to display on text terminals or be printed on line-oriented printers. The format is commonly used for programming microcontrollers, EPROMs, and other types of programmable logic devices and hardware emulators. In a typical application, a compiler or assembler converts a program's source code to machine code and outputs it into a object or executable file in hexadecimal format. In some applications, the Intel hex format is also used as a container format holding packets of stream data. Common file extensions used for the resulting files are .HEX or .H86. The HEX file is then read by a programmer to write the machine code into a PROM or is transferred to the target system for loading and execution. There are various tools to convert files between hexadecimal and binary format, and vice versa.

The octet is a unit of digital information in computing and telecommunications that consists of eight bits. The term is often used when the term byte might be ambiguous, as the byte has historically been used for storage units of a variety of sizes.

In computer architecture, 12-bit integers, memory addresses, or other data units are those that are 12 bits wide. Also, 12-bit central processing unit (CPU) and arithmetic logic unit (ALU) architectures are those that are based on registers, address buses, or data buses of that size.

In digital computing and telecommunications, a unit of information is the capacity of some standard data storage system or communication channel, used to measure the capacities of other systems and channels. In information theory, units of information are also used to measure information contained in messages and the entropy of random variables.

Tektronix hex format and Extended Tektronix hex format / Extended Tektronix Object Format are ASCII-based hexadecimal file formats, created by Tektronix, for conveying binary information for applications like programming microcontrollers, EPROMs, and other kinds of chips.

Werner Buchholz was a German-American computer scientist. After growing up in Europe, Buchholz moved to Canada and then to the United States. He worked for International Business Machines (IBM) in New York. In June 1956, he coined the term "byte" for a unit of digital information. In 1990, he was recognized as a computer pioneer by the Institute of Electrical and Electronics Engineers.

References

- 1 2 Bemer, Robert William (2000-08-08). "Why is a byte 8 bits? Or is it?". Computer History Vignettes. Archived from the original on 2017-04-03. Retrieved 2017-04-03.

[…] I came to work for IBM, and saw all the confusion caused by the 64-character limitation. Especially when we started to think about word processing, which would require both upper and lower case. […] I even made a proposal (in view of STRETCH, the very first computer I know of with an 8-bit byte) that would extend the number of punch card character codes to 256 […]. So some folks started thinking about 7-bit characters, but this was ridiculous. With IBM's STRETCH computer as background, handling 64-character words divisible into groups of 8 (I designed the character set for it, under the guidance of Dr. Werner Buchholz, the man who DID coin the term "byte" for an 8-bit grouping). […] It seemed reasonable to make a universal 8-bit character set, handling up to 256. In those days my mantra was "powers of 2 are magic". And so the group I headed developed and justified such a proposal […] The IBM 360 used 8-bit characters, although not ASCII directly. Thus Buchholz's "byte" caught on everywhere. I myself did not like the name for many reasons. The design had 8 bits moving around in parallel. But then came a new IBM part, with 9 bits for self-checking, both inside the CPU and in the tape drives. I exposed this 9-bit byte to the press in 1973. But long before that, when I headed software operations for Cie. Bull in France in 1965-66, I insisted that "byte" be deprecated in favor of "octet". […] It is justified by new communications methods that can carry 16, 32, 64, and even 128 bits in parallel. But some foolish people now refer to a "16-bit byte" because of this parallel transfer, which is visible in the UNICODE set. I'm not sure, but maybe this should be called a "hextet". […]

- 1 2 Donnerhacke, Lutz; Hartmann, Richard; Horn, Michael; Rechthien, Kay; Weber, Leon (2011-04-07). "draft-denog-v6ops-addresspartnaming-04 - Naming IPv6 address parts". Internet Draft. 04. Archived from the original on 2017-04-03. Retrieved 2017-04-03.

- ↑ "IPv4 and IPv6 address formats". www.ibm.com. Retrieved 2024-08-02.

- ↑ Davies, Trefor (22 March 2011). "Bit Nibble Byte Chomp – a call to action".

The Timico engineering team has started to use the word "chomp" to represent two bytes or the 4 Hex character block in IPv6. Chomp is clearly in the mould of bit, nibble and byte and I would be grateful if you could chew this one over with a view to supporting the idea – we are submitting it as a suggestion when the above Draft expires.

- ↑ IEEE Std 1754-1994 - IEEE Standard for a 32-bit Microcontroller Architecture. The Institute of Electrical and Electronics Engineers, Inc. 1994. pp. 5–7. doi:10.1109/IEEESTD.1995.79519. ISBN 978-1-55937-428-6 . Retrieved 2016-02-10. (NB. The standard defines doublets, quadlets, octlets and hexlets as 2, 4, 8 and 16 bytes, giving the numbers of bits (16, 32, 64 and 128) only as a secondary meaning.)

- ↑ Graziani, Rick (2012). IPv6 Fundamentals: A Straightforward Approach to Understanding IPv6. Cisco Press. p. 55. ISBN 978-0-13-303347-2.

- ↑ Coffeen, Tom (2014). IPv6 Address Planning: Designing an Address Plan for the Future. O'Reilly Media. p. 170. ISBN 978-1-4919-0326-1.

- ↑ Hinden, Robert M.; Deering, Stephen E. (December 1995). "IP Version 6 Addressing Architecture". RFC 1884 . Archived from the original on 2017-04-03. Retrieved 2017-04-03.

The preferred form is x:x:x:x:x:x:x:x, where the 'x's are the hexadecimal values of the eight 16-bit pieces of the address

- ↑ Das, Kaushik. "IPv6 Addressing".

IPv6 addresses are denoted by eight groups of hexadecimal quartets separated by colons in between them.

- ↑ Brewster, Ronald L. (1994). Data Communications and Networks, Vol. III. IEE telecommunications series. Vol. 31. Institution of Electrical Engineers. p. 155. ISBN 9780852968048 . Retrieved 2017-04-03.

A data symbol represents one quartet (4 bits) of binary data.

- ↑ Courbis, Paul; Lalande, Sébastien (2006-06-27) [1989]. Voyage au centre de la HP28c/s (in French) (2 ed.). Paris, France: Editions de la Règle à Calcul. OCLC 636072913. Archived from the original on 2016-08-06. Retrieved 2015-09-06.