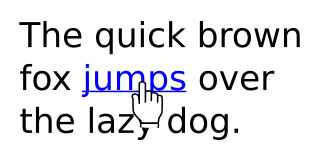

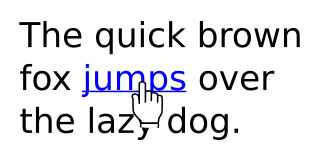

Hypertext is text displayed on a computer display or other electronic devices with references (hyperlinks) to other text that the reader can immediately access. Hypertext documents are interconnected by hyperlinks, which are typically activated by a mouse click, keypress set, or screen touch. Apart from text, the term "hypertext" is also sometimes used to describe tables, images, and other presentational content formats with integrated hyperlinks. Hypertext is one of the key underlying concepts of the World Wide Web, where Web pages are often written in the Hypertext Markup Language (HTML). As implemented on the Web, hypertext enables the easy-to-use publication of information over the Internet.

In computing, a hyperlink, or simply a link, is a digital reference to data that the user can follow or be guided to by clicking or tapping. A hyperlink points to a whole document or to a specific element within a document. Hypertext is text with hyperlinks. The text that is linked from is known as anchor text. A software system that is used for viewing and creating hypertext is a hypertext system, and to create a hyperlink is to hyperlink. A user following hyperlinks is said to navigate or browse the hypertext.

An HTML element is a type of HTML document component, one of several types of HTML nodes. The first used version of HTML was written by Tim Berners-Lee in 1993 and there have since been many versions of HTML. The current de facto standard is governed by the industry group WHATWG and is known as the HTML Living Standard.

This article presents a timeline of hypertext technology, including "hypermedia" and related human–computer interaction projects and developments from 1945 on. The term hypertext is credited to the author and philosopher Ted Nelson.

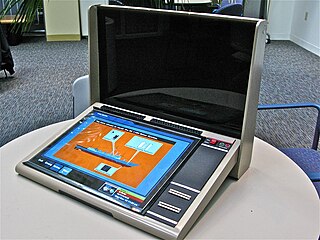

The Aspen Movie Map was a hypermedia system developed at MIT that enabled the user to take a virtual tour through the city of Aspen, Colorado. It was developed by a team working with Andrew Lippman in 1978 with funding from ARPA.

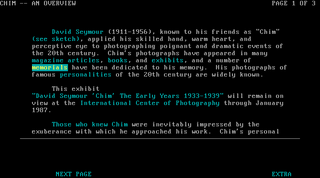

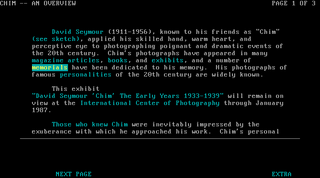

The Interactive Encyclopedia System, or TIES, was a hypertext system developed in the University of Maryland Human-Computer Interaction Lab by Ben Shneiderman in 1983. The earliest versions of TIES ran in DOS text mode, using the cursor arrow keys for navigating through information. A later version of HyperTIES for the Sun workstation was developed by Don Hopkins using the NeWS window system, with an authoring tool based on UniPress's Gosling Emacs text editor.

Hypermedia, an extension of the term hypertext, is a nonlinear medium of information that includes graphics, audio, video, plain text and hyperlinks. This designation contrasts with the broader term multimedia, which may include non-interactive linear presentations as well as hypermedia. It is also related to the field of electronic literature. The term was first used in a 1965 article written by Ted Nelson.

Inline linking is the use of a linked object, often an image, on one site by a web page belonging to a second site. One site is said to have an inline link to the other site where the object is located.

Hypertext fiction is a genre of electronic literature, characterized by the use of hypertext links that provide a new context for non-linearity in literature and reader interaction. The reader typically chooses links to move from one node of text to the next, and in this fashion arranges a story from a deeper pool of potential stories. Its spirit can also be seen in interactive fiction.

Intermedia was the third notable hypertext project to emerge from Brown University, after HES (1967) and FRESS (1969). Intermedia was started in 1985 by Norman Meyrowitz, who had been associated with sooner hypertext research at Brown. The Intermedia project coincided with the establishment of the Institute for Research in Information and Scholarship (IRIS). Some of the materials that came from Intermedia, authored by Meyrowitz, Nancy Garrett, and Karen Catlin were used in the development of HTML.

Jane Yellowlees Douglas is a pioneer author and scholar of hypertext fiction. She began writing about hypermedia in the late 1980s, very early in the development of the medium. Her 1993 fiction I Have Said Nothing, was one of the first published works of hypertext fiction.

Web Modeling Language, (WebML) is a visual notation and methodology for the design of a data-intensive web applications. It provides a graphical means to define the specifics of web application design within a structured design process. This process can be enhanced with the assistance of visual design tools.

Adaptive hypermedia (AH) uses hypermedia which is adaptive according to a user model. In contrast to regular hypermedia, where all users are offered the same set of hyperlinks, adaptive hypermedia (AH) tailors what the user is offered based on a model of the user's goals, preferences and knowledge, thus providing links or content most appropriate to the current user.

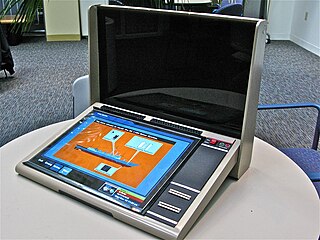

KMS, an abbreviation of Knowledge Management System, was a commercial second generation hypermedia system, originally created as a successor for the early hypermedia system ZOG. KMS was developed by Don McCracken and Rob Akscyn of Knowledge Systems, a 1981 spinoff from the Computer Science Department of Carnegie Mellon University.

The Electronic Document System (EDS) was an early hypertext system – also known as the Interactive Graphical Documents (IGD) hypermedia system – focused on creation of interactive documents such as equipment repair manuals or computer-aided instruction texts with embedded links and graphics. EDS was a 1978–1981 research project at Brown University by Steven Feiner, Sandor Nagy and Andries van Dam.

Hypertext is text displayed on a computer or other electronic device with references (hyperlinks) to other text that the reader can immediately access, usually by a mouse click or keypress sequence. Early conceptions of hypertext defined it as text that could be connected by a linking system to a range of other documents that were stored outside that text. In 1934 Belgian bibliographer, Paul Otlet, developed a blueprint for links that telescoped out from hypertext electrically to allow readers to access documents, books, photographs, and so on, stored anywhere in the world.

Microcosm was a hypermedia system, originally developed in 1988 by the Department of Electronics and Computer Science at the University of Southampton, with a small team of researchers in the Computer Science group: Wendy Hall, Andrew Fountain, Hugh Davis and Ian Heath. The system pre-dates the web and builds on early hypermedia systems, such as Ted Nelson's Project Xanadu and work of Douglas Engelbart. And like Intermedia or Hyper-G, which were other hypermedia systems created around the same time, Microcosm stores links between documents in a separate database.

Social navigation is a form of social computing introduced by Paul Dourish and Matthew Chalmers in 1994, who defined it as when "movement from one item to another is provoked as an artifact of the activity of another or a group of others". According to later research in 2002, "social navigation exploits the knowledge and experience of peer users of information resources" to guide users in the information space, and that it is becoming more difficult to navigate and search efficiently with all the digital information available from the World Wide Web and other sources. Studying others' navigational trails and understanding their behavior can help improve one's own search strategy by guiding them to make more informed decisions based on the actions of others.

In computer vision, object co-segmentation is a special case of image segmentation, which is defined as jointly segmenting semantically similar objects in multiple images or video frames.

Six Sex Scenes is a hypertext novella created by Adrienne Eisen and published on the web in 1996.