Related Research Articles

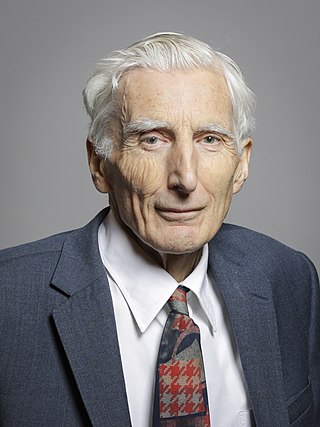

Martin John Rees, Baron Rees of Ludlow, is a British cosmologist and astrophysicist. He is the fifteenth Astronomer Royal, appointed in 1995, and was Master of Trinity College, Cambridge, from 2004 to 2012 and President of the Royal Society between 2005 and 2010. He has received various physics awards including the Wolf Prize in Physics in 2024 for fundamental contributions to high-energy astrophysics, galaxies and structure formation, and cosmology.

Sir John Theodore Houghton was a Welsh atmospheric physicist who was the co-chair of the Intergovernmental Panel on Climate Change's (IPCC) scientific assessment working group which shared the Nobel Peace Prize in 2007 with Al Gore. He was lead editor of the first three IPCC reports. He was professor in atmospheric physics at the University of Oxford, former Director General at the Met Office and founder of the Hadley Centre.

In the field of human factors and ergonomics, human reliability is the probability that a human performs a task to a sufficient standard. Reliability of humans can be affected by many factors such as age, physical health, mental state, attitude, emotions, personal propensity for certain mistakes, and cognitive biases.

Safety culture is the element of organizational culture which is concerned with the maintenance of safety and compliance with safety standards. It is informed by the organization's leadership and the beliefs, perceptions and values that employees share in relation to risks within the organization, workplace or community. Safety culture has been described in a variety of ways: notably, the National Academies of Science and the Association of Land Grant and Public Universities have published summaries on this topic in 2014 and 2016.

Alan John Watson, Baron Watson of Richmond is a UK-based broadcaster, Liberal Democrat politician and leadership communications consultant.

Douglas James Davies, is a Welsh Anglican theologian, anthropologist, religious leader and academic, specialising in the history, theology, and sociology of death. He is Professor in the Study of Religion at the University of Durham. His fields of expertise also include anthropology, the study of religion, the rituals and beliefs surrounding funerary rites and cremation around the globe, Mormonism and Mormon studies. His research interests cover identity and belief, and Anglican leadership.

Human error is an action that has been done but that was "not intended by the actor; not desired by a set of rules or an external observer; or that led the task or system outside its acceptable limits". Human error has been cited as a primary cause and contributing factor in disasters and accidents in industries as diverse as nuclear power, aviation, space exploration, and medicine. Prevention of human error is generally seen as a major contributor to reliability and safety of (complex) systems. Human error is one of the many contributing causes of risk events.

Charles Wayne Rees CBE FRS FRSC was a British organic chemist.

Hugh Nigel Kennedy is a British medievalist and academic. He specialises in the history of the early Islamic Middle East, Muslim Iberia and the Crusades. From 1997 to 2007, he was Professor of Middle Eastern History at the University of St Andrews. Since 2007, he has been Professor of Arabic at SOAS, University of London.

Dame Professor Averil Millicent Cameron, often cited as A. M. Cameron, is a British historian. She writes on Late Antiquity, Classics, and Byzantine Studies. She was Professor of Late Antique and Byzantine History at the University of Oxford, and the Warden of Keble College, Oxford, between 1994 and 2010.

The Swiss cheese model of accident causation is a model used in risk analysis and risk management. It likens human systems to multiple slices of Swiss cheese, which has randomly placed and sized holes in each slice, stacked side by side, in which the risk of a threat becoming a reality is mitigated by the differing layers and types of defenses which are "layered" behind each other. Therefore, in theory, lapses and weaknesses in one defense do not allow a risk to materialize, since other defenses also exist, to prevent a single point of failure.

Sir Cary Lynn Cooper, is an American-born British psychologist and 50th Anniversary Professor of Organizational Psychology and Health at the Manchester Business School, University of Manchester.

Farnham Grammar School is now called Farnham College which is located in Farnham, Surrey, southern England.

David John Finney, was a British statistician and Professor Emeritus of Statistics at the University of Edinburgh. He was Director of the Agricultural Research Council's Unit of Statistics from 1954 to 1984 and a former President of the Royal Statistical Society and of the Biometric Society. He was a pioneer in the development of systematic monitoring of drugs for detection of adverse reactions. He turned 100 in January 2017 and died on 12 November 2018 at the age of 101 following a short illness.

The healthcare error proliferation model is an adaptation of James Reason’s Swiss Cheese Model designed to illustrate the complexity inherent in the contemporary healthcare delivery system and the attribution of human error within these systems. The healthcare error proliferation model explains the etiology of error and the sequence of events typically leading to adverse outcomes. This model emphasizes the role organizational and external cultures contribute to error identification, prevention, mitigation, and defense construction.

Sir Peter Julius Lachmann was a British immunologist, specialising in the study of the complement system. He was emeritus Sheila Joan Smith Professor of Immunology at the University of Cambridge, a fellow of Christ's College, Cambridge and honorary fellow of Trinity College, Cambridge and of Imperial College. He was knighted for service to medical science in 2002.

Jeffrey Braithwaite BA [UNE], DipIR, MIR [Syd], MBA [Macq], PhD [UNSW], FIML, FACHSM, FAAHMS, FFPHRCP [UK], FAcSS [UK], Hon FRACMA is an Australian professor, health services and systems researcher, writer and commentator, with an international profile and affiliations. He is Founding Director of the Australian Institute of Health Innovation at Macquarie University, Sydney, Australia; Director of the Centre for Healthcare Resilience and Implementation Science, Australian Institute of Health Innovation; Professor of Health Systems Research, Macquarie University. His is President of the International Society for Quality in Healthcare.

Maritime resource management (MRM) or bridge resource management (BRM) is a set of human factors and soft skills training aimed at the maritime industry. The MRM training programme was launched in 1993 – at that time under the name bridge resource management – and aims at preventing accidents at sea caused by human error.

Ivan de Burgh Daly was a British experimental physiologist and animal physiologist who had a specialist knowledge of ECG use and was awarded a Beit Fellowship in this field in 1920. Together with Shellshear, he was the first in England to use thermionic valves in any biological context. In 1948, he was instrumental in the foundation of the Babraham Institute at the University of Cambridge. He was a leading authority on pulmonary and bronchial systems.

Just culture is a concept related to systems thinking which emphasizes that mistakes are generally a product of faulty organizational cultures, rather than solely brought about by the person or persons directly involved. In a just culture, after an incident, the question asked is, "What went wrong?" rather than "Who caused the problem?". A just culture is the opposite of a blame culture. A just culture is not the same as a no-blame culture as individuals may still be held accountable for their misconduct or negligence.

References

- ↑ Sumwait, Robert L. (1 May 2018). "The Age of Reason". NTSB Safety Compass. Archived from the original on 18 December 2020. Retrieved 21 February 2024.

- 1 2 Reason, James (1990). Human Error. Cambridge, England: Cambridge University Press. ISBN 978-0-521-30669-0.

- 1 2 Reason, James T. (1997). Managing the Risks of Organizational Accidents. Farnham, England: Ashgate Publishing. ISBN 978-1-84014-105-4.

- ↑ Reason, James (2013). A Life in Error: From Little Slips to Big Disasters. Farnham, England and Burlington, Vt.: Ashgate Publishing. ISBN 9781472418432.