A light stage is an active illumination system used for shape, texture, reflectance and motion capture often with structured light and a multi-camera setup.

A light stage is an active illumination system used for shape, texture, reflectance and motion capture often with structured light and a multi-camera setup.

The reflectance field over a human face was first captured in 1999 by Paul Debevec, Tim Hawkins et al and presented in SIGGRAPH 2000. The method they used to find the light that travels under the skin was based on the existing scientific knowledge that light reflecting off the air-to-oil retains its polarization while light that travels under the skin loses its polarization. [1]

Using this information, a light stage was built by Debevec et al., consisting of

Following great scientific success Debevec et al. constructed more elaborate versions of the light stage at the University of Southern California's (USC)'s Institute for Creative Technologies (ICT). Ghosh et al. built the seventh version of the USC light stage X. In 2014 President Barack Obama had his image and reflectance captured with the USC mobile light stage. [2]

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

Optics is the branch of physics that studies the behaviour and properties of light, including its interactions with matter and the construction of instruments that use or detect it. Optics usually describes the behaviour of visible, ultraviolet, and infrared light. Because light is an electromagnetic wave, other forms of electromagnetic radiation such as X-rays, microwaves, and radio waves exhibit similar properties.

In photography and videography, multi-exposure HDR capture is a technique that creates extended or high dynamic range (HDR) images by taking and combining multiple exposures of the same subject matter at different exposure levels. Combining multiple images in this way results in an image with a greater dynamic range than what would be possible by taking one single image. The technique can also be used to capture video by taking and combining multiple exposures for each frame of the video. The term "HDR" is used frequently to refer to the process of creating HDR images from multiple exposures. Many smartphones have an automated HDR feature that relies on computational imaging techniques to capture and combine multiple exposures.

Motion capture is the process of recording the movement of objects or people. It is used in military, entertainment, sports, medical applications, and for validation of computer vision and robots. In filmmaking and video game development, it refers to recording actions of human actors and using that information to animate digital character models in 2D or 3D computer animation. When it includes face and fingers or captures subtle expressions, it is often referred to as performance capture. In many fields, motion capture is sometimes called motion tracking, but in filmmaking and games, motion tracking usually refers more to match moving.

In physics, backscatter is the reflection of waves, particles, or signals back to the direction from which they came. It is usually a diffuse reflection due to scattering, as opposed to specular reflection as from a mirror, although specular backscattering can occur at normal incidence with a surface. Backscattering has important applications in astronomy, photography, and medical ultrasonography. The opposite effect is forward scatter, e.g. when a translucent material like a cloud diffuses sunlight, giving soft light.

The light field is a vector function that describes the amount of light flowing in every direction through every point in space. The space of all possible light rays is given by the five-dimensional plenoptic function, and the magnitude of each ray is given by its radiance. Michael Faraday was the first to propose that light should be interpreted as a field, much like the magnetic fields on which he had been working. The phrase light field was coined by Andrey Gershun in a classic 1936 paper on the radiometric properties of light in three-dimensional space.

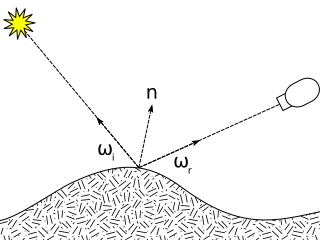

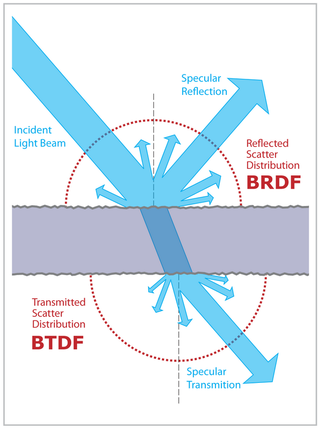

The bidirectional reflectance distribution function is a function of four real variables that defines how light is reflected at an opaque surface. It is employed in the optics of real-world light, in computer graphics algorithms, and in computer vision algorithms. The function takes an incoming light direction, , and outgoing direction, , and returns the ratio of reflected radiance exiting along to the irradiance incident on the surface from direction . Each direction is itself parameterized by azimuth angle and zenith angle , therefore the BRDF as a whole is a function of 4 variables. The BRDF has units sr−1, with steradians (sr) being a unit of solid angle.

Paul Ernest Debevec is a researcher in computer graphics at the University of Southern California's Institute for Creative Technologies. He is best known for his work in finding, capturing and synthesizing the bidirectional scattering distribution function utilizing the light stages his research team constructed to find and capture the reflectance field over the human face, high-dynamic-range imaging and image-based modeling and rendering.

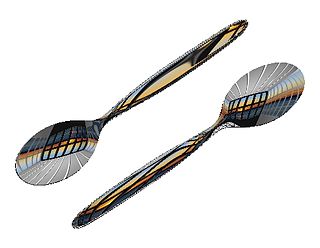

In computer graphics, environment mapping, or reflection mapping, is an efficient image-based lighting technique for approximating the appearance of a reflective surface by means of a precomputed texture. The texture is used to store the image of the distant environment surrounding the rendered object.

High-dynamic-range rendering, also known as high-dynamic-range lighting, is the rendering of computer graphics scenes by using lighting calculations done in high dynamic range (HDR). This allows preservation of details that may be lost due to limiting contrast ratios. Video games and computer-generated movies and special effects benefit from this as it creates more realistic scenes than with more simplistic lighting models.

Virtual cinematography is the set of cinematographic techniques performed in a computer graphics environment. It includes a wide variety of subjects like photographing real objects, often with stereo or multi-camera setup, for the purpose of recreating them as three-dimensional objects and algorithms for the automated creation of real and simulated camera angles. Virtual cinematography can be used to shoot scenes from otherwise impossible camera angles, create the photography of animated films, and manipulate the appearance of computer-generated effects.

Human image synthesis is technology that can be applied to make believable and even photorealistic renditions of human-likenesses, moving or still. It has effectively existed since the early 2000s. Many films using computer generated imagery have featured synthetic images of human-like characters digitally composited onto the real or other simulated film material. Towards the end of the 2010s deep learning artificial intelligence has been applied to synthesize images and video that look like humans, without need for human assistance, once the training phase has been completed, whereas the old school 7D-route required massive amounts of human worK.

3D rendering is the 3D computer graphics process of converting 3D models into 2D images on a computer. 3D renders may include photorealistic effects or non-photorealistic styles.

The definition of the BSDF is not well standardized. The term was probably introduced in 1980 by Bartell, Dereniak, and Wolfe. Most often it is used to name the general mathematical function which describes the way in which the light is scattered by a surface. However, in practice, this phenomenon is usually split into the reflected and transmitted components, which are then treated separately as BRDF and BTDF.

Structured light is the process of projecting a known pattern on to a scene. The way that these deform when striking surfaces allows vision systems to calculate the depth and surface information of the objects in the scene, as used in structured light 3D scanners.

Computer graphics is a sub-field of computer science which studies methods for digitally synthesizing and manipulating visual content. Although the term often refers to the study of three-dimensional computer graphics, it also encompasses two-dimensional graphics and image processing.

A beauty dish is a photographic lighting device that uses a parabolic reflector to distribute light towards a focal point. The light created is between that of a direct flash and a softbox, giving the image a wrapped, contrasted look, which adds a more dramatic effect.

The history of computer animation began as early as the 1940s and 1950s, when people began to experiment with computer graphics – most notably by John Whitney. It was only by the early 1960s when digital computers had become widely established, that new avenues for innovative computer graphics blossomed. Initially, uses were mainly for scientific, engineering and other research purposes, but artistic experimentation began to make its appearance by the mid-1960s – most notably by Dr Thomas Calvert. By the mid-1970s, many such efforts were beginning to enter into public media. Much computer graphics at this time involved 2-dimensional imagery, though increasingly as computer power improved, efforts to achieve 3-dimensional realism became the emphasis. By the late 1980s, photo-realistic 3D was beginning to appear in film movies, and by mid-1990s had developed to the point where 3D animation could be used for entire feature film production.

Hao Li is a computer scientist, innovator, and entrepreneur from Germany, working in the fields of computer graphics and computer vision. He is co-founder and CEO of Pinscreen, Inc, as well as associate professor of computer vision at the Mohamed Bin Zayed University of Artificial Intelligence (MBZUAI). He was previously a Distinguished Fellow at the University of California, Berkeley, an associate professor of computer science at the University of Southern California, and former director of the Vision and Graphics Lab at the USC Institute for Creative Technologies. He was also a visiting professor at Weta Digital and a research lead at Industrial Light & Magic / Lucasfilm.

A 3D selfie is a 3D-printed scale replica of a person or their face. These three-dimensional selfies are also known as 3D portraits, 3D figurines, 3D-printed figurines, mini-me figurines and miniature statues. In 2014 a first 3D printed bust of a President, Barack Obama, was made. 3D-digital-imaging specialists used handheld 3D scanners to create an accurate representation of the President.