Computer animation is the process used for digitally generating moving images. The more general term computer-generated imagery (CGI) encompasses both still images and moving images, while computer animation only refers to moving images. Modern computer animation usually uses 3D computer graphics.

Paul Ernest Debevec is a researcher in computer graphics at the University of Southern California's Institute for Creative Technologies. He is best known for his work in finding, capturing and synthesizing the bidirectional scattering distribution function utilizing the light stages his research team constructed to find and capture the reflectance field over the human face, high-dynamic-range imaging and image-based modeling and rendering.

High-dynamic-range rendering, also known as high-dynamic-range lighting, is the rendering of computer graphics scenes by using lighting calculations done in high dynamic range (HDR). This allows preservation of details that may be lost due to limiting contrast ratios. Video games and computer-generated movies and special effects benefit from this as it creates more realistic scenes than with more simplistic lighting models. HDRR was originally required to tone map the rendered image onto Standard Dynamic Range (SDR) displays, as the first HDR capable displays did not arrive until the 2010s. However if a modern HDR display is available, it is possible to instead display the HDRR with even greater contrast and realism.

Texture synthesis is the process of algorithmically constructing a large digital image from a small digital sample image by taking advantage of its structural content. It is an object of research in computer graphics and is used in many fields, amongst others digital image editing, 3D computer graphics and post-production of films.

Computer facial animation is primarily an area of computer graphics that encapsulates methods and techniques for generating and animating images or models of a character face. The character can be a human, a humanoid, an animal, a legendary creature or character, etc. Due to its subject and output type, it is also related to many other scientific and artistic fields from psychology to traditional animation. The importance of human faces in verbal and non-verbal communication and advances in computer graphics hardware and software have caused considerable scientific, technological, and artistic interests in computer facial animation.

Virtual cinematography is the set of cinematographic techniques performed in a computer graphics environment. It includes a wide variety of subjects like photographing real objects, often with stereo or multi-camera setup, for the purpose of recreating them as three-dimensional objects and algorithms for the automated creation of real and simulated camera angles. Virtual cinematography can be used to shoot scenes from otherwise impossible camera angles, create the photography of animated films, and manipulate the appearance of computer-generated effects.

Facial motion capture is the process of electronically converting the movements of a person's face into a digital database using cameras or laser scanners. This database may then be used to produce computer graphics (CG), computer animation for movies, games, or real-time avatars. Because the motion of CG characters is derived from the movements of real people, it results in a more realistic and nuanced computer character animation than if the animation were created manually.

Digital puppetry is the manipulation and performance of digitally animated 2D or 3D figures and objects in a virtual environment that are rendered in real-time by computers. It is most commonly used in filmmaking and television production but has also been used in interactive theme park attractions and live theatre.

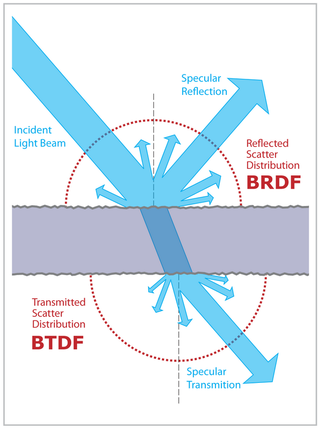

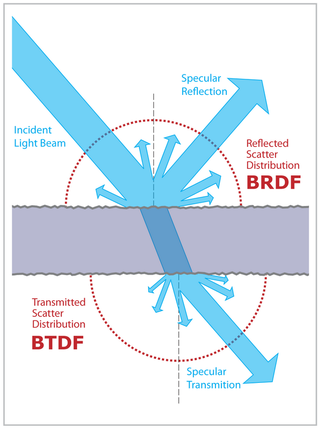

The definition of the BSDF is not well standardized. The term was probably introduced in 1980 by Bartell, Dereniak, and Wolfe. Most often it is used to name the general mathematical function which describes the way in which the light is scattered by a surface. However, in practice, this phenomenon is usually split into the reflected and transmitted components, which are then treated separately as BRDF and BTDF.

A virtual actor or also known as virtual human, virtual persona, or digital clone is the creation or re-creation of a human being in image and voice using computer-generated imagery and sound, that is often indistinguishable from the real actor.

Computer graphics deals with generating images and art with the aid of computers. Computer graphics is a core technology in digital photography, film, video games, digital art, cell phone and computer displays, and many specialized applications. A great deal of specialized hardware and software has been developed, with the displays of most devices being driven by computer graphics hardware. It is a vast and recently developed area of computer science. The phrase was coined in 1960 by computer graphics researchers Verne Hudson and William Fetter of Boeing. It is often abbreviated as CG, or typically in the context of film as computer generated imagery (CGI). The non-artistic aspects of computer graphics are the subject of computer science research.

The history of computer animation began as early as the 1940s and 1950s, when people began to experiment with computer graphics – most notably by John Whitney. It was only by the early 1960s when digital computers had become widely established, that new avenues for innovative computer graphics blossomed. Initially, uses were mainly for scientific, engineering and other research purposes, but artistic experimentation began to make its appearance by the mid-1960s – most notably by Dr. Thomas Calvert. By the mid-1970s, many such efforts were beginning to enter into public media. Much computer graphics at this time involved 2-D imagery, though increasingly as computer power improved, efforts to achieve 3-D realism became the emphasis. By the late 1980s, photo-realistic 3-D was beginning to appear in film movies, and by mid-1990s had developed to the point where 3-D animation could be used for entire feature film production.

A light stage is an active illumination system used for shape, texture, reflectance and motion capture often with structured light and a multi-camera setup.

Hao Li is a computer scientist, innovator, and entrepreneur from Germany, working in the fields of computer graphics and computer vision. He is co-founder and CEO of Pinscreen, Inc, as well as associate professor of computer vision at the Mohamed Bin Zayed University of Artificial Intelligence (MBZUAI). He was previously a Distinguished Fellow at the University of California, Berkeley, an associate professor of computer science at the University of Southern California, and former director of the Vision and Graphics Lab at the USC Institute for Creative Technologies. He was also a visiting professor at Weta Digital and a research lead at Industrial Light & Magic / Lucasfilm.

Michael F. Cohen is an American computer scientist and researcher in computer graphics. He is currently a Senior Fellow at Meta in their Generative AI Group. He was a senior research scientist at Microsoft Research for 21 years until he joined Facebook in 2015. In 1998, he received the ACM SIGGRAPH CG Achievement Award for his work in developing radiosity methods for realistic image synthesis. He was elected a Fellow of the Association for Computing Machinery in 2007 for his "contributions to computer graphics and computer vision." In 2019, he received the ACM SIGGRAPH Steven A. Coons Award for Outstanding Creative Contributions to Computer Graphics for “his groundbreaking work in numerous areas of research—radiosity, motion simulation & editing, light field rendering, matting & compositing, and computational photography”.

Deepfakes are images, videos, or audio which are edited or generated using artificial intelligence tools, and which may depict real or non-existent people. They are a type of synthetic media and modern form of a Media prank.

Video manipulation is a type of media manipulation that targets digital video using video processing and video editing techniques. The applications of these methods range from educational videos to videos aimed at (mass) manipulation and propaganda, a straightforward extension of the long-standing possibilities of photo manipulation. This form of computer-generated misinformation has contributed to fake news, and there have been instances when this technology was used during political campaigns. Other uses are less sinister; entertainment purposes and harmless pranks provide users with movie-quality artistic possibilities.

Synthetic media is a catch-all term for the artificial production, manipulation, and modification of data and media by automated means, especially through the use of artificial intelligence algorithms, such as for the purpose of misleading people or changing an original meaning. Synthetic media as a field has grown rapidly since the creation of generative adversarial networks, primarily through the rise of deepfakes as well as music synthesis, text generation, human image synthesis, speech synthesis, and more. Though experts use the term "synthetic media," individual methods such as deepfakes and text synthesis are sometimes not referred to as such by the media but instead by their respective terminology Significant attention arose towards the field of synthetic media starting in 2017 when Motherboard reported on the emergence of AI altered pornographic videos to insert the faces of famous actresses. Potential hazards of synthetic media include the spread of misinformation, further loss of trust in institutions such as media and government, the mass automation of creative and journalistic jobs and a retreat into AI-generated fantasy worlds. Synthetic media is an applied form of artificial imagination.

Identity replacement technology is any technology that is used to cover up all or parts of a person's identity, either in real life or virtually. This can include face masks, face authentication technology, and deepfakes on the Internet that spread fake editing of videos and images. Face replacement and identity masking are used by either criminals or law-abiding citizens. Identity replacement tech, when operated on by criminals, leads to heists or robbery activities. Law-abiding citizens utilize identity replacement technology to prevent government or various entities from tracking private information such as locations, social connections, and daily behaviors.

A virtual human is a software fictional character or human being. Virtual humans have been created as tools and artificial companions in simulation, video games, film production, human factors and ergonomic and usability studies in various industries, clothing industry, telecommunications (avatars), medicine, etc. These applications require domain-dependent simulation fidelity. A medical application might require an exact simulation of specific internal organs; film industry requires highest aesthetic standards, natural movements, and facial expressions; ergonomic studies require faithful body proportions for a particular population segment and realistic locomotion with constraints, etc.