Academic interests

Perceptron

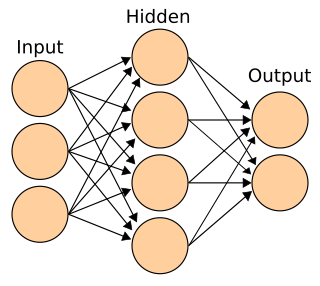

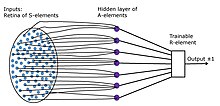

Rosenblatt is best known for the Perceptron, an electronic device which was constructed in accordance with biological principles and showed an ability to learn. Rosenblatt's perceptrons were initially simulated on an IBM 704 computer at Cornell Aeronautical Laboratory in 1957. [6] When a triangle was held before the perceptron's eye, it would pick up the image and convey it along a random succession of lines to the response units, where the image was registered. [7]

He developed and extended this approach in numerous papers and a book called Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms, published by Spartan Books in 1962. [8] He received international recognition for the Perceptron. The New York Times billed it as a revolution, with the headline "New Navy Device Learns By Doing", [9] and The New Yorker similarly admired the technological advancement. [7]

Rosenblatt proved four main theorems. The first theorem states that elementary perceptrons can solve any classification problem if there are no discrepancies in the training set (and sufficiently many independent A-elements). The fourth theorem states convergence of learning algorithm if this realisation of elementary perceptron can solve the problem.

Research on comparable devices was also being conducted in other places such as SRI, and many researchers had big expectations on what they could do. The initial excitement became somewhat reduced, however, when in 1969 Marvin Minsky and Seymour Papert published the book "Perceptrons". Minsky and Papert considered elementary perceptrons with restrictions on the neural inputs: a bounded number of connections or a relatively small diameter of A-units receptive fields. They proved that under these constraints, an elementary perceptron cannot solve some problems, such as the connectivity of input images or the parity of pixels in them. Thus, Rosenblatt proved omnipotence of the unrestricted elementary perceptrons, whereas Minsky and Papert demonstrated that abilities of perceptrons with restrictions are limited. These results are not contradictory, but the Minsky and Papert book was widely (and wrongly) cited as the proof of strong limitations of perceptrons. (For detailed elementary discussion of the first Rosenblatt's theorem and its relation to Minsky and Papert work we refer to a recent note. [10] )

After research on neural networks returned to the mainstream in the 1980s, new researchers started to study Rosenblatt's work again. This new wave of study on neural networks is interpreted by some researchers as being a contradiction of hypotheses presented in the book Perceptrons, and a confirmation of Rosenblatt's expectations.

The Mark I Perceptron, which is generally recognized as a forerunner to artificial intelligence, currently resides in the Smithsonian Institution in Washington D.C. [3] The Mark I was able to learn, recognize letters, and solve quite complex problems.

Principles of Neurodynamics (1962)

The neuron model employed is a direct descendant of that originally proposed by McCulloch and Pitts. The basic philosophical approach has been heavily influenced by the theories of Hebb and Hayek and the experimental findings of Lashley. The probabilistic approach is shared with theorists such as Ashby, Uttley, Minsky, MacKay, and von Neumann.

— Frank Rosenblatt, Principles Of Neurodynamics, page 5

Rosenblatt's book Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms, published by Spartan Books in 1962, summarized his work on perceptrons at the time. [11] The book was previously issued as an unclassified report No. 1196-G-8, on 1961 March 15, through the Defense Technical Information Center. [12]

The book is divided into four parts. The first gives an historical review of alternative approaches to brain modeling, the physiological and psychological considerations, and the basic definitions and concepts of the perceptron approach. The second covers three-layer series-coupled perceptrons: the mathematical underpinnings, performance results in psychological experiments, and a variety of perceptron variations. The third covers multi-layer and cross-coupled perceptrons, and the fourth back-coupled perceptrons and problems for future study.

Rosenblatt used the book to teach an interdisciplinary course entitled "Theory of Brain Mechanisms" that drew students from Cornell's Engineering and Liberal Arts colleges.

Rat brain experiments

Around the late 1960s, inspired by James V. McConnell's experiments with memory transfer in planarians, Rosenblatt began experiments within the Cornell Department of Entomology on the transfer of learned behavior via rat brain extracts. Rats were taught discrimination tasks such as Y-maze and two-lever Skinner box. Their brains were then extracted, and the extracts and their antibodies were injected into untrained rats that were subsequently tested in the discrimination tasks to determine whether or not there was behavior transfer from the trained to the untrained rats. [13] Rosenblatt spent his last several years on this problem and showed convincingly that the initial reports of larger effects were wrong and that any memory transfer was at most very small. [3]