An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data. An autoencoder learns two functions: an encoding function that transforms the input data, and a decoding function that recreates the input data from the encoded representation. The autoencoder learns an efficient representation (encoding) for a set of data, typically for dimensionality reduction.

Music and artificial intelligence (AI) is the development of music software programs which use AI to generate music. As with applications in other fields, AI in music also simulates mental tasks. A prominent feature is the capability of an AI algorithm to learn based on past data, such as in computer accompaniment technology, wherein the AI is capable of listening to a human performer and performing accompaniment. Artificial intelligence also drives interactive composition technology, wherein a computer composes music in response to a live performance. There are other AI applications in music that cover not only music composition, production, and performance but also how music is marketed and consumed. Several music player programs have also been developed to use voice recognition and natural language processing technology for music voice control. Current research includes the application of AI in music composition, performance, theory and digital sound processing.

Deep learning is the subset of machine learning methods based on neural networks with representation learning. The adjective "deep" refers to the use of multiple layers in the network. Methods used can be either supervised, semi-supervised or unsupervised.

In machine learning, feature learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task.

Feature engineering is a preprocessing step in supervised machine learning and statistical modeling which transforms raw data into a more effective set of inputs. Each input comprises several attributes, known as features. By providing models with relevant information, feature engineering significantly enhances their predictive accuracy and decision-making capability.

WaveNet is a deep neural network for generating raw audio. It was created by researchers at London-based AI firm DeepMind. The technique, outlined in a paper in September 2016, is able to generate relatively realistic-sounding human-like voices by directly modelling waveforms using a neural network method trained with recordings of real speech. Tests with US English and Mandarin reportedly showed that the system outperforms Google's best existing text-to-speech (TTS) systems, although as of 2016 its text-to-speech synthesis still was less convincing than actual human speech. WaveNet's ability to generate raw waveforms means that it can model any kind of audio, including music.

Neural architecture search (NAS) is a technique for automating the design of artificial neural networks (ANN), a widely used model in the field of machine learning. NAS has been used to design networks that are on par or outperform hand-designed architectures. Methods for NAS can be categorized according to the search space, search strategy and performance estimation strategy used:

Neural style transfer (NST) refers to a class of software algorithms that manipulate digital images, or videos, in order to adopt the appearance or visual style of another image. NST algorithms are characterized by their use of deep neural networks for the sake of image transformation. Common uses for NST are the creation of artificial artwork from photographs, for example by transferring the appearance of famous paintings to user-supplied photographs. Several notable mobile apps use NST techniques for this purpose, including DeepArt and Prisma. This method has been used by artists and designers around the globe to develop new artwork based on existent style(s).

Deep reinforcement learning is a subfield of machine learning that combines reinforcement learning (RL) and deep learning. RL considers the problem of a computational agent learning to make decisions by trial and error. Deep RL incorporates deep learning into the solution, allowing agents to make decisions from unstructured input data without manual engineering of the state space. Deep RL algorithms are able to take in very large inputs and decide what actions to perform to optimize an objective. Deep reinforcement learning has been used for a diverse set of applications including but not limited to robotics, video games, natural language processing, computer vision, education, transportation, finance and healthcare.

Timothy P. Lillicrap is a Canadian neuroscientist and AI researcher, adjunct professor at University College London, and staff research scientist at Google DeepMind, where he has been involved in the AlphaGo and AlphaZero projects mastering the games of Go, Chess and Shogi. His research focuses on machine learning and statistics for optimal control and decision making, as well as using these mathematical frameworks to understand how the brain learns. He has developed algorithms and approaches for exploiting deep neural networks in the context of reinforcement learning, and new recurrent memory architectures for one-shot learning.

An energy-based model (EBM) (also called a Canonical Ensemble Learning(CEL) or Learning via Canonical Ensemble (LCE)) is an application of canonical ensemble formulation of statistical physics for learning from data problems. The approach prominently appears in generative models (GMs).

An audio deepfake is a product of artificial intelligence used to create convincing speech sentences that sound like specific people saying things they did not say. This technology was initially developed for various applications to improve human life. For example, it can be used to produce audiobooks, and also to help people who have lost their voices to get them back. Commercially, it has opened the door to several opportunities. This technology can also create more personalized digital assistants and natural-sounding text-to-speech as well as speech translation services.

Self-supervised learning (SSL) is a paradigm in machine learning where a model is trained on a task using the data itself to generate supervisory signals, rather than relying on external labels provided by humans. In the context of neural networks, self-supervised learning aims to leverage inherent structures or relationships within the input data to create meaningful training signals. SSL tasks are designed so that solving it requires capturing essential features or relationships in the data. The input data is typically augmented or transformed in a way that creates pairs of related samples. One sample serves as the input, and the other is used to formulate the supervisory signal. This augmentation can involve introducing noise, cropping, rotation, or other transformations. Self-supervised learning more closely imitates the way humans learn to classify objects.

A vision transformer (ViT) is a transformer designed for computer vision. A ViT breaks down an input image into a series of patches, serialises each patch into a vector, and maps it to a smaller dimension with a single matrix multiplication. These vector embeddings are then processed by a transformer encoder as if they were token embeddings.

The Fashion MNIST dataset is a large freely available database of fashion images that is commonly used for training and testing various machine learning systems. Fashion-MNIST was intended to serve as a replacement for the original MNIST database for benchmarking machine learning algorithms, as it shares the same image size, data format and the structure of training and testing splits.

Deep learning speech synthesis refers to the application of deep learning models to generate natural-sounding human speech from written text (text-to-speech) or spectrum (vocoder). Deep neural networks (DNN) are trained using a large amount of recorded speech and, in the case of a text-to-speech system, the associated labels and/or input text.

Lyra is a lossy audio codec developed by Google that is designed for compressing speech at very low bitrates. Unlike most other audio formats, it compresses data using a machine learning-based algorithm.

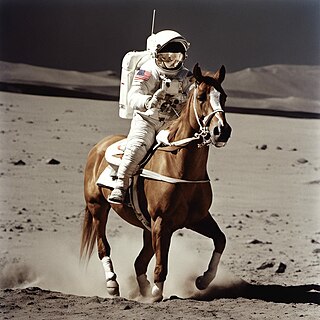

Stable Diffusion is a deep learning, text-to-image model released in 2022 based on diffusion techniques. The generative artificial intelligence technology is the premier product of Stability AI and is considered to be a part of the ongoing artificial intelligence boom.

A text-to-image model is a machine learning model which takes an input natural language description and produces an image matching that description.