Tru64 UNIX is a discontinued 64-bit UNIX operating system for the Alpha instruction set architecture (ISA), currently owned by Hewlett-Packard (HP). Previously, Tru64 UNIX was a product of Compaq, and before that, Digital Equipment Corporation (DEC), where it was known as Digital UNIX.

A Beowulf cluster is a computer cluster of what are normally identical, commodity-grade computers networked into a small local area network with libraries and programs installed which allow processing to be shared among them. The result is a high-performance parallel computing cluster from inexpensive personal computer hardware.

MOSIX is a proprietary distributed operating system. Although early versions were based on older UNIX systems, since 1999 it focuses on Linux clusters and grids. In a MOSIX cluster/grid there is no need to modify or to link applications with any library, to copy files or login to remote nodes, or even to assign processes to different nodes – it is all done automatically, like in an SMP.

openMosix was a free cluster management system that provided single-system image (SSI) capabilities, e.g. automatic work distribution among nodes. It allowed program processes to migrate to machines in the node's network that would be able to run that process faster. It was particularly useful for running parallel applications having low to moderate input/output (I/O). It was released as a Linux kernel patch, but was also available on specialized Live CDs. openMosix development has been halted by its developers, but the LinuxPMI project is continuing development of the former openMosix code.

In distributed computing, a single system image (SSI) cluster is a cluster of machines that appears to be one single system. The concept is often considered synonymous with that of a distributed operating system, but a single image may be presented for more limited purposes, just job scheduling for instance, which may be achieved by means of an additional layer of software over conventional operating system images running on each node. The interest in SSI clusters is based on the perception that they may be simpler to use and administer than more specialized clusters.

Kerrighed is an open source single-system image (SSI) cluster software project. The project started in October 1998 at the Paris research group The French National Institute for Research in Computer Science and Control. From 2006 to 2011, the project was mainly developed by Kerlabs. In January, 2012 the Linux clustering mission of Kerlabs was adopted by a new company: We Cluster, Inc. headquartered in Pacific Grove, California. January 18, 2012: Kerrighed 3.0 has been ported to Ubuntu 12.04 with Linux Kernel v3.2.

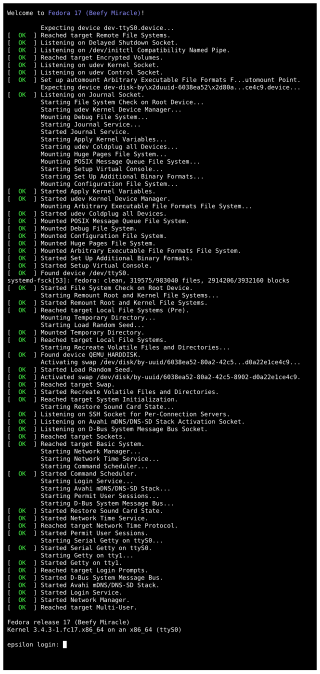

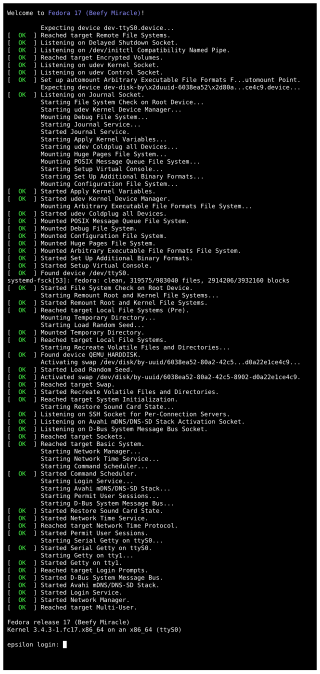

udev is a device manager for the Linux kernel. As the successor of devfsd and hotplug, udev primarily manages device nodes in the /dev directory. At the same time, udev also handles all user space events raised when hardware devices are added into the system or removed from it, including firmware loading as required by certain devices.

Lustre is a type of parallel distributed file system, generally used for large-scale cluster computing. The name Lustre is a portmanteau word derived from Linux and cluster. Lustre file system software is available under the GNU General Public License and provides high performance file systems for computer clusters ranging in size from small workgroup clusters to large-scale, multi-site systems. Since June 2005, Lustre has consistently been used by at least half of the top ten, and more than 60 of the top 100 fastest supercomputers in the world, including the world's No. 1 ranked TOP500 supercomputer in November 2022, Frontier, as well as previous top supercomputers such as Fugaku, Titan and Sequoia.

In database computing, Oracle Real Application Clusters (RAC) — an option for the Oracle Database software produced by Oracle Corporation and introduced in 2001 with Oracle9i — provides software for clustering and high availability in Oracle database environments. Oracle Corporation includes RAC with the Enterprise Edition, provided the nodes are clustered using Oracle Clusterware.

HAL is a software subsystem for UNIX-like operating systems providing hardware abstraction.

A diskless shared-root cluster is a way to manage several machines at the same time. Instead of each having its own operating system (OS) on its local disk, there is only one image of the OS available on a server, and all the nodes use the same image.

A clustered file system is a file system which is shared by being simultaneously mounted on multiple servers. There are several approaches to clustering, most of which do not employ a clustered file system. Clustered file systems can provide features like location-independent addressing and redundancy which improve reliability or reduce the complexity of the other parts of the cluster. Parallel file systems are a type of clustered file system that spread data across multiple storage nodes, usually for redundancy or performance.

In Unix-like operating systems, a device file or special file is an interface to a device driver that appears in a file system as if it were an ordinary file. There are also special files in DOS, OS/2, and Windows. These special files allow an application program to interact with a device by using its device driver via standard input/output system calls. Using standard system calls simplifies many programming tasks, and leads to consistent user-space I/O mechanisms regardless of device features and functions.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software.

LOCUS is a discontinued distributed operating system developed at UCLA during the 1980s. It was notable for providing an early implementation of the single-system image idea, where a cluster of machines appeared to be one larger machine.

Locus Computing Corporation was formed in 1982 by Gerald J. Popek, Charles S. Kline and Gregory I. Thiel to commercialize the technologies developed for the LOCUS distributed operating system at UCLA. Locus was notable for commercializing single-system image software and producing the Merge package which allowed the use of DOS and Windows 3.1 software on Unix systems.

NonStop Clusters (NSC) was an add-on package for SCO UnixWare that allowed creation of fault-tolerant single-system image clusters of machines running UnixWare. NSC was one of the first commercially available highly available clustering solutions for commodity hardware.

Linoma Software was a developer of secure managed file transfer and IBM i software solutions. The company was acquired by HelpSystems in June 2016; HelpSystems changed its name to Fortra in November 2022. Mid-sized companies, large enterprises and government entities use Linoma's software products to protect sensitive data and comply with data security regulations such as PCI DSS, HIPAA/HITECH, SOX, GLBA and state privacy laws. Linoma's software runs on a variety of platforms including Windows, Linux, UNIX, IBM i, AIX, Solaris, HP-UX and Mac OS X.

Systemd is a software suite that provides an array of system components for Linux operating systems. The main aim is to unify service configuration and behavior across Linux distributions. Its primary component is a "system and service manager" – an init system used to bootstrap user space and manage user processes. It also provides replacements for various daemons and utilities, including device management, login management, network connection management, and event logging. The name systemd adheres to the Unix convention of naming daemons by appending the letter d. It also plays on the term "System D", which refers to a person's ability to adapt quickly and improvise to solve problems.

Proxmox Virtual Environment is a hyper-converged infrastructure open-source software. It is a hosted hypervisor that can run operating systems including Linux and Windows on x64 hardware. It is a Debian-based Linux distribution with a modified Ubuntu LTS kernel and allows deployment and management of virtual machines and containers. Proxmox VE includes a web console and command-line tools, and provides a REST API for third-party tools. Two types of virtualization are supported: container-based with LXC, and full virtualization with KVM. It includes a web-based management interface.