A spatial network is a graph in which the vertices or edges are spatial elements associated with geometric objects, i.e. the nodes are located in a space equipped with a certain metric. The simplest mathematical realization is a lattice or a random geometric graph, where nodes are distributed uniformly at random over a two-dimensional plane; a pair of nodes are connected if the Euclidean distance is smaller than a given neighborhood radius. Transportation and mobility networks, Internet, mobile phone networks, power grids, social and contact networks and neural networks are all examples where the underlying space is relevant and where the graph's topology alone does not contain all the information. Characterizing and understanding the structure, resilience and the evolution of spatial networks is crucial for many different fields ranging from urbanism to epidemiology.

A qutrit is a unit of quantum information that is realized by a quantum system described by a superposition of three mutually orthogonal quantum states.

A dusty plasma is a plasma containing millimeter (10−3) to nanometer (10−9) sized particles suspended in it. Dust particles are charged and the plasma and particles behave as a plasma. Dust particles may form larger particles resulting in "grain plasmas". Due to the additional complexity of studying plasmas with charged dust particles, dusty plasmas are also known as complex plasmas.

Atom optics is the area of physics which deals with beams of cold, slowly moving neutral atoms, as a special case of a particle beam. Like an optical beam, the atomic beam may exhibit diffraction and interference, and can be focused with a Fresnel zone plate or a concave atomic mirror. Several scientific groups work in this field.

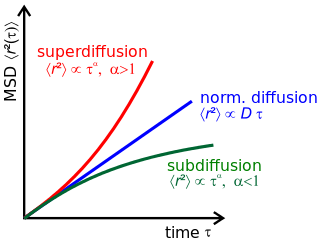

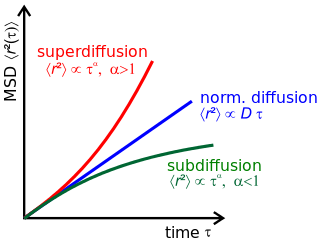

Anomalous diffusion is a diffusion process with a non-linear relationship between the mean squared displacement (MSD), σr2, and time, in contrast to a typical diffusion process, in which the MSD is a linear function of time. Physically, the MSD can be considered the amount of space the particle has "explored" in the system. An example of anomalous diffusion in nature is the subdiffusion that has been observed in the cell nuclues, plasma membrane and cytoplasm.

A sonic black hole, sometimes called a dumb hole, is a phenomenon in which phonons are unable to escape from a fluid that is flowing more quickly than the local speed of sound. They are called sonic, or acoustic, black holes because these trapped phonons are analogous to light in astrophysical (gravitational) black holes. Physicists are interested in them because they have many properties similar to astrophysical black holes and, in particular, emit a phononic version of Hawking radiation. The border of a sonic black hole, at which the flow speed changes from being greater than the speed of sound to less than the speed of sound, is called the event horizon. At this point the frequency of phonons approaches zero.

José W. F. Valle is a Brazilian-Spanish physicist and a Full Professor at the Spanish Council for Scientific Research CSIC. He is known for his numerous contributions to theoretical astroparticle and high energy physics, especially neutrino physics.

Human dynamics refer to a branch of complex systems research in statistical physics such as the movement of crowds and queues and other systems of complex human interactions including statistical modelling of human networks, including interactions over communications networks.

Nicholas Harrison FRSC FinstP is an English theoretical physicist known for his work on developing theory and computational methods for discovering and optimising advanced materials. He is the Professor of Computational Materials Science in the Department of Chemistry at Imperial College London where he is co-director of the Institute of Molecular science and Engineering.

Relativistic heavy-ion collisions produce very large numbers of subatomic particles in all directions. In such collisions, flow refers to how energy, momentum, and number of these particles varies with direction, and elliptic flow is a measure of how the flow is not uniform in all directions when viewed along the beam-line. Elliptic flow is strong evidence for the existence of quark–gluon plasma, and has been described as one of the most important observations measured at the Relativistic Heavy Ion Collider (RHIC).

Nam Chang-hee is a South Korean plasma physicist. Nam is specializing in the exploration of relativistic laser-matter interactions using femtosecond PW lasers. Currently he is professor of physics at Gwangju Institute of Science and Technology and director of the Center for Relativistic Laser Science as a part of the Institute for Basic Science (IBS).

Matjaž Perc is Professor of Physics at the University of Maribor, Slovenia, and director of the Complex Systems Center Maribor. He is member of Academia Europaea and among top 1% most cited physicists according to Thomson Reuters Highly Cited Researchers. He is Outstanding Referee of the Physical Review and Physical Review Letters journals, and Distinguished Referee of EPL. He received the Young Scientist Award for Socio-and Econophysics in 2015. His research has been widely reported in the media and professional literature.

Quantum machine learning is an emerging interdisciplinary research area at the intersection of quantum physics and machine learning. The most common use of the term refers to machine learning algorithms for the analysis of classical data executed on a quantum computer, i.e. quantum-enhanced machine learning. While machine learning algorithms are used to compute immense quantities of data, quantum machine learning increases such capabilities intelligently, by creating opportunities to conduct analysis on quantum states and systems. This includes hybrid methods that involve both classical and quantum processing, where computationally difficult subroutines are outsourced to a quantum device. These routines can be more complex in nature and executed faster with the assistance of quantum devices. Furthermore, quantum algorithms can be used to analyze quantum states instead of classical data. Beyond quantum computing, the term "quantum machine learning" is often associated with classical machine learning methods applied to data generated from quantum experiments, such as learning quantum phase transitions or creating new quantum experiments. Quantum machine learning also extends to a branch of research that explores methodological and structural similarities between certain physical systems and learning systems, in particular neural networks. For example, some mathematical and numerical techniques from quantum physics are applicable to classical deep learning and vice versa. Finally, researchers investigate more abstract notions of learning theory with respect to quantum information, sometimes referred to as "quantum learning theory".

A quantum heat engine is a device that generates power from the heat flow between hot and cold reservoirs. The operation mechanism of the engine can be described by the laws of quantum mechanics. The first realization of a quantum heat engine was pointed out by Scovil and Schulz-DuBois in 1959, showing the connection of efficiency of the Carnot engine and the 3-level maser. Quantum refrigerators share the structure of quantum heat engines with the purpose of pumping heat from a cold to a hot bath consuming power first suggested by Geusic, Schulz-DuBois, De Grasse and Scovil. When the power is supplied by a laser the process is termed optical pumping or laser cooling, suggested by Weinland and Hench. Surprisingly heat engines and refrigerators can operate up to the scale of a single particle thus justifying the need for a quantum theory termed quantum thermodynamics.

Randomized benchmarking is a method for assessing the capabilities of quantum computing hardware platforms through estimating the average error rates that are measured under the implementation of long sequences of random quantum gate operations. It is the standard used by quantum hardware developers such as IBM and Google to test the validity of quantum operations, which in turn is used to improve the functionality of the hardware. The original theory of randomized benchmarking assumed the implementation of sequences of Haar-random or pseudo-random operations, but this had several practical limitations. The standard method of randomized benchmarking (RB) applied today is normally a more efficient version of the protocol based on uniformly random Clifford operations, which was proposed in 2006 by Dankert et al. as an application of the theory of unitary t-designs. In current usage randomized benchmarking sometimes refers to the broader family of generalizations of the 2005 protocol involving different random gate sets that can identify various features of the strength and type of errors affecting the elementary quantum gate operations. Randomized benchmarking protocols are an important means of verifying and validating quantum operations and are also routinely used for the optimization of quantum control procedures

Applying classical methods of machine learning to the study of quantum systems is the focus of an emergent area of physics research. A basic example of this is quantum state tomography, where a quantum state is learned from measurement. Other examples include learning Hamiltonians, learning quantum phase transitions, and automatically generating new quantum experiments. Classical machine learning is effective at processing large amounts of experimental or calculated data in order to characterize an unknown quantum system, making its application useful in contexts including quantum information theory, quantum technologies development, and computational materials design. In this context, it can be used for example as a tool to interpolate pre-calculated interatomic potentials or directly solving the Schrödinger equation with a variational method.

The PK-4 or (Plasmakristall-4) laboratory is a joint Russian-European laboratory for the investigation of dusty/complex plasmas on board the International Space Station (ISS), with the principal investigators at the Institute of Materials Science at the German Aerospace Center (DLR) and the Russian Institute for High Energy Densities of the Russian Academy of Sciences. It is the third laboratory on board the ISS to study complex plasmas, after the PKE Nefedov and PK-3 Plus experiments. In contrast to the previous setups, the geometry was significantly changed and is more suited to study flowing complex plasmas.

Gregor Eugen Morfill is a German physicist who works in basic astrophysical research and deals with complex plasmas and plasma medicine.

Jürgen Meyer-ter-Vehn is a German theoretical physicist who specializes in laser-plasma interactions at the Max Planck Institute for Quantum Optics. He published under the name Meyer until 1973.