Prelinguistic development (birth – 1 year)

Perception

Children do not utter their first words until they are about 1 year old, but already at birth they can tell some utterances in their native language from utterances in languages with different prosodic features. [3]

1 month

Categorical perception

Infants as young as 1 month perceive some speech sounds as speech categories (they display categorical perception of speech). For example, the sounds /b/ and /p/ differ in the amount of breathiness that follows the opening of the lips. Using a computer generated continuum in breathiness between /b/ and /p/, Eimas et al. (1971) showed that English-learning infants paid more attention to differences near the boundary between /b/ and /p/ than to equal-sized differences within the /b/-category or within the /p/-category. [4] Their measure, monitoring infant sucking-rate, became a major experimental method for studying infant speech perception.

Infants up to 10–12 months can distinguish not only native sounds but also nonnative contrasts. Older children and adults lose the ability to discriminate some nonnative contrasts. [5] Thus, it seems that exposure to one's native language causes the perceptual system to be restructured. The restructuring reflects the system of contrasts in the native language.

4 months

At 4 months, infants still prefer infant-directed speech to adult-directed speech. Whereas 1-month-olds only exhibit this preference if the full speech signal is played to them, 4-month-old infants prefer infant-directed speech even when just the pitch contours are played. [6] This shows that between 1 and 4 months of age, infants improve in tracking the suprasegmental information in the speech directed at them. By 4 months, finally, infants have learned which features they have to pay attention to at the suprasegmental level.

5 months

Babies prefer to hear their own name to similar-sounding words. [7] It is possible that they have associated the meaning “me” with their name, although it is also possible that they simply recognize the form because of its high frequency.

6 months

With increasing exposure to the ambient language, infants learn not to pay attention to sound distinctions that are not meaningful in their native language, e.g., two acoustically different versions of the vowel /i/ that simply differ because of inter-speaker variability. By 6 months of age infants have learned to treat acoustically different sounds that are representations of the same sound category, such as an /i/ spoken by a male versus a female speaker, as members of the same phonological category /i/. [8]

Statistical learning

Infants are able to extract meaningful distinctions in the language they are exposed to from statistical properties of that language. For example, if English-learning infants are exposed to a prevoiced /d/ to voiceless unaspirated /t/ continuum (similar to the /d/ - /t/ distinction in Spanish) with the majority of the tokens occurring near the endpoints of the continuum, i.e., showing extreme prevoicing versus long voice onset times (bimodal distribution) they are better at discriminating these sounds than infants who are exposed primarily to tokens from the center of the continuum (unimodal distribution). [9]

These results show that at the age of 6 months infants are sensitive to how often certain sounds occur in the language they are exposed to and they can learn which cues are important to pay attention to from these differences in frequency of occurrence. In natural language exposure this means typical sounds in a language (such as prevoiced /d/ in Spanish) occur often and infants can learn them from mere exposure to them in the speech they hear. All of this occurs before infants are aware of the meaning of any of the words they are exposed to, and therefore the phenomenon of statistical learning has been used to argue for the fact that infants can learn sound contrasts without meaning being attached to them.

At 6 months, infants are also able to make use of prosodic features of the ambient language to break the speech stream they are exposed to into meaningful units, e.g., they are better able to distinguish sounds that occur in stressed vs. unstressed syllables. [10] This means that at 6 months infants have some knowledge of the stress patterns in the speech they are exposed and they have learned that these patterns are meaningful.

7 months

At 7.5 months English-learning infants have been shown to be able to segment words from speech that show a strong-weak (i.e., trochaic) stress pattern, which is the most common stress pattern in the English language, but they were not able to segment out words that follow a weak-strong pattern. In the sequence ‘guitar is’ these infants thus heard ‘taris’ as the word-unit because it follows a strong-weak pattern. [11] The process that allows infants to use prosodic cues in speech input to learn about language structure has been termed “prosodic bootstrapping”. [12]

8 months

While children generally don't understand the meaning of most single words yet, they understand the meaning of certain phrases they hear a lot, such as “Stop it,” or “Come here.” [13]

9 months

Infants can distinguish native from nonnative language input using phonetic and phonotactic patterns alone, i.e., without the help of prosodic cues. [14] They seem to have learned their native language's phonotactics, i.e., which combinations of sounds are possible in the language.

10-12 months

Infants now can no longer discriminate most nonnative sound contrasts that fall within the same sound category in their native language. [15] Their perceptual system has been tuned to the contrasts relevant in their native language. As for word comprehension, Fenson et al. (1994) tested 10-11-month-old children's comprehension vocabulary size and found a range from 11 words to 154 words. [13] At this age, children normally have not yet begun to speak and thus have no production vocabulary. So clearly, comprehension vocabulary develops before production vocabulary.

Production

Stages of pre-speech vocal development

Even though children do not produce their first words until they are approximately 12 months old, the ability to produce speech sounds starts to develop at a much younger age. Stark (1980) distinguishes five stages of early speech development: [16]

0-6 weeks: Reflexive vocalizations

These earliest vocalizations include crying and vegetative sounds such as breathing, sucking or sneezing. For these vegetative sounds, infants’ vocal cords vibrate and air passes through their vocal apparatus, thus familiarizing infants with processes involved in later speech production.

6-16 weeks: Cooing and laughter

Infants produce cooing sounds when they are content. Cooing is often triggered by social interaction with caregivers and resembles the production of vowels.

16-30 weeks: Vocal play

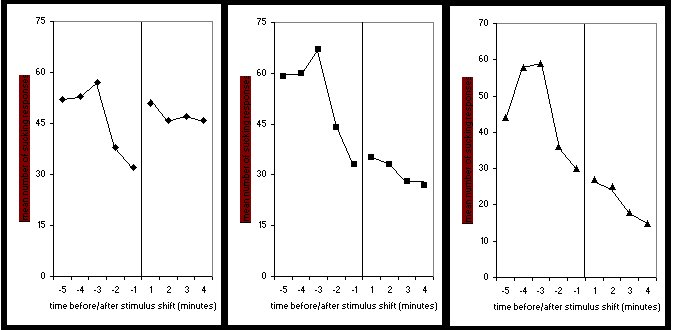

Infants produce a variety of vowel- and consonant-like sounds that they combine into increasingly longer sequences. The production of vowel sounds (already in the first 2 months) precedes the production of consonants, with the first back consonants (e.g., [g], [k]) being produced around 2–3 months, and front consonants (e.g., [m], [n], [p]) starting to appear around 6 months of age. As for pitch contours in early infant utterances, infants between 3 and 9 months of age produce primarily flat, falling and rising-falling contours. Rising pitch contours would require the infants to raise subglottal pressure during the vocalization or to increase vocal fold length or tension at the end of the vocalization, or both. At 3 to 9 months infants don't seem to be able to control these movements yet. [17]

6-10 months: Reduplicated babbling (or canonical babbling)

Reduplicated babbling (or canonical babbling [18] ) contains consonant-vowel (CV) syllables that are repeated in reduplicated series of the same consonant and vowel (e.g., [bababa]). At this stage, infants’ productions resemble speech much more closely in timing and vocal behaviors than at earlier stages. Starting around 6 months babies also show an influence of the ambient language in their babbling, i.e., babies’ babbling sounds different depending on which languages they hear. For example, French learning 9-10 month-olds have been found to produce a bigger proportion of prevoiced stops (which exist in French but not English) in their babbling than English learning infants of the same age. [19] This phenomenon of babbling being influenced by the language being acquired has been called babbling drift. [20]

10-14 months: Nonreduplicated babbling (or variegated babbling)

In Nonreduplicated babbling (or variegated babbling [18] ) , infants now combine different vowels and consonants into syllable strings. At this stage, infants also produce various stress and intonation patterns. During this transitional period from babbling to the first word children also produce “protowords”, i.e., invented words that are used consistently to express specific meanings, but that are not real words in the children's target language. [21] Around 12–14 months of age children produce their first word. Infants close to one year of age are able to produce rising pitch contours in addition to flat, falling, and rising-falling pitch contours. [17]