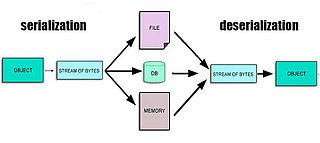

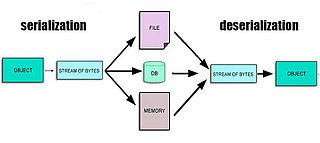

In computing, serialization is the process of translating a data structure or object state into a format that can be stored or transmitted and reconstructed later. When the resulting series of bits is reread according to the serialization format, it can be used to create a semantically identical clone of the original object. For many complex objects, such as those that make extensive use of references, this process is not straightforward. Serialization of objects does not include any of their associated methods with which they were previously linked.

The Semantic Web, sometimes known as Web 3.0, is an extension of the World Wide Web through standards set by the World Wide Web Consortium (W3C). The goal of the Semantic Web is to make Internet data machine-readable.

The Resource Description Framework (RDF) is a method to describe and exchange graph data. It was originally designed as a data model for metadata by the World Wide Web Consortium (W3C). It provides a variety of syntax notations and data serialization formats, of which the most widely used is Turtle.

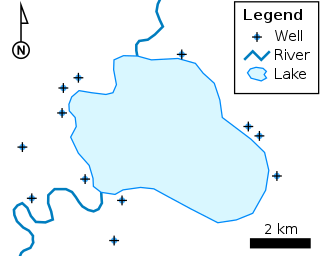

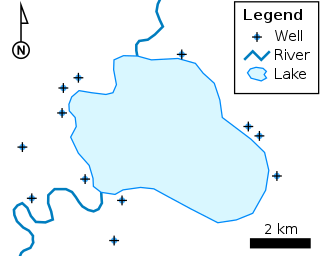

The Geography Markup Language (GML) is the XML grammar defined by the Open Geospatial Consortium (OGC) to express geographical features. GML serves as a modeling language for geographic systems as well as an open interchange format for geographic transactions on the Internet. Key to GML's utility is its ability to integrate all forms of geographic information, including not only conventional "vector" or discrete objects, but coverages and sensor data.

XML Linking Language, or XLink, is an XML markup language and W3C specification that provides methods for creating internal and external links within XML documents, and associating metadata with those links.

RDF Schema (Resource Description Framework Schema, variously abbreviated as RDFS, RDF(S), RDF-S, or RDF/S) is a set of classes with certain properties using the RDF extensible knowledge representation data model, providing basic elements for the description of ontologies. It uses various forms of RDF vocabularies, intended to structure RDF resources. RDF and RDFS can be saved in a triplestore, then one can extract some knowledge from them using a query language, like SPARQL.

The Extensible Metadata Platform (XMP) is an ISO standard, originally created by Adobe Systems Inc., for the creation, processing and interchange of standardized and custom metadata for digital documents and data sets.

Catalogue Service for the Web (CSW), sometimes seen as Catalogue Service - Web, is a standard for exposing a catalogue of geospatial records in XML on the Internet. The catalogue is made up of records that describe geospatial data, geospatial services, and related resources.

RDFa or Resource Description Framework in Attributes is a W3C Recommendation that adds a set of attribute-level extensions to HTML, XHTML and various XML-based document types for embedding rich metadata within Web documents. The Resource Description Framework (RDF) data-model mapping enables its use for embedding RDF subject-predicate-object expressions within XHTML documents. It also enables the extraction of RDF model triples by compliant user agents.

DOAP is an RDF Schema and XML vocabulary to describe software projects, in particular free and open source software.

An entity–attribute–value model (EAV) is a data model optimized for the space-efficient storage of sparse—or ad-hoc—property or data values, intended for situations where runtime usage patterns are arbitrary, subject to user variation, or otherwise unforeseeable using a fixed design. The use-case targets applications which offer a large or rich system of defined property types, which are in turn appropriate to a wide set of entities, but where typically only a small, specific selection of these are instantiated for a given entity. Therefore, this type of data model relates to the mathematical notion of a sparse matrix. EAV is also known as object–attribute–value model, vertical database model, and open schema.

The Ontology Definition MetaModel (ODM) is an Object Management Group (OMG) specification to make the concepts of Model-Driven Architecture applicable to the engineering of ontologies. Hence, it links Common Logic (CL), the Web Ontology Language (OWL), and the Resource Description Framework (RDF).

A metadata standard is a requirement which is intended to establish a common understanding of the meaning or semantics of the data, to ensure correct and proper use and interpretation of the data by its owners and users. To achieve this common understanding, a number of characteristics, or attributes of the data have to be defined, also known as metadata.

The Office Open XML file formats are a set of file formats that can be used to represent electronic office documents. There are formats for word processing documents, spreadsheets and presentations as well as specific formats for material such as mathematical formulas, graphics, bibliographies etc.

Metadata Authority Description Schema (MADS) is an XML schema developed by the United States Library of Congress' Network Development and Standards Office that provides an authority element set to complement the Metadata Object Description Schema (MODS).

The Sensor Observation Service (SOS) is a web service to query real-time sensor data and sensor data time series and is part of the Sensor Web. The offered sensor data consists of data directly from the sensors, which are encoded in the Sensor Model Language (SensorML), and the measured values in the Observations and Measurements encoding format. The web service as well as both file formats are open standards and specifications of the same name defined by the Open Geospatial Consortium (OGC).

The Asset Description Metadata Schema (ADMS) is a common metadata vocabulary to describe standards, so-called interoperability assets, on the Web.

The Thing Description (TD) (or W3C WoT Thing Description (TD)) is a royalty-free, open information model with a JSON based representation format for the Internet of Things (IoT). A TD provides a unified way to describe the capabilities of an IoT device or service with its offered data model and functions, protocol usage, and further metadata. Using Thing Descriptions help reduce the complexity of integrating IoT devices and their capabilities into IoT applications.