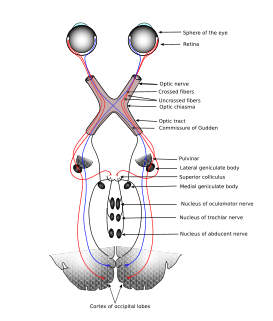

Perception is the organization, identification, and interpretation of sensory information in order to represent and understand the presented information or environment. All perception involves signals that go through the nervous system, which in turn result from physical or chemical stimulation of the sensory system. Vision involves light striking the retina of the eye; smell is mediated by odor molecules; and hearing involves pressure waves.

In music, harmony is the process by which individual sounds are joined together or composed into whole units or compositions. Often, the term harmony refers to simultaneously occurring frequencies, pitches, or chords. However, harmony is generally understood to involve both vertical harmony (chords) and horizontal harmony (melody).

In music, timbre, also known as tone color or tone quality, is the perceived sound quality of a musical note, sound or tone. Timbre distinguishes different types of sound production, such as choir voices and musical instruments. It also enables listeners to distinguish different instruments in the same category.

Pitch is a perceptual property of sounds that allows their ordering on a frequency-related scale, or more commonly, pitch is the quality that makes it possible to judge sounds as "higher" and "lower" in the sense associated with musical melodies. Pitch is a major auditory attribute of musical tones, along with duration, loudness, and timbre.

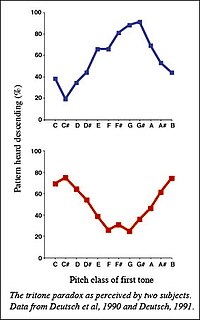

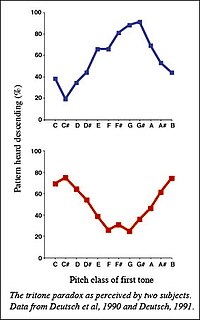

The tritone paradox is an auditory illusion in which a sequentially played pair of Shepard tones separated by an interval of a tritone, or half octave, is heard as ascending by some people and as descending by others. Different populations tend to favor one of a limited set of different spots around the chromatic circle as central to the set of "higher" tones. Roger Shepard in 1963 had argued that such tone pairs would be heard ambiguously as either ascending or descending. However, psychology of music researcher Diana Deutsch in 1986 discovered that when the judgments of individual listeners were considered separately, their judgments depended on the positions of the tones along the chromatic circle. For example, one listener would hear the tone pair C–F♯ as ascending and the tone pair G–C♯ as descending. Yet another listener would hear the tone pair C–F♯ as descending and the tone pair G–C♯ as ascending. Furthermore, the way these tone pairs were perceived varied depending on the listener's language or dialect.

The illusory continuity of tones is the auditory illusion caused when a tone is interrupted for a short time, during which a narrow band of noise is played. The noise has to be of a sufficiently high level to effectively mask the gap, unless it is a gap transfer illusion. Whether the tone is of constant, rising or decreasing pitch, the ear perceives the tone as continuous if the discontinuity is masked by noise. Because the human ear is very sensitive to sudden changes, however, it is necessary for the success of the illusion that the amplitude of the tone in the region of the discontinuity not decrease or increase too abruptly. While the inner mechanisms of this illusion is not well understood, there is evidence that supports activation of primarily the auditory cortex is present.

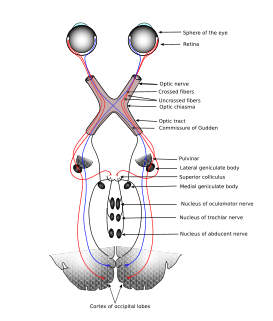

The sensory nervous system is a part of the nervous system responsible for processing sensory information. A sensory system consists of sensory neurons, neural pathways, and parts of the brain involved in sensory perception. Commonly recognized sensory systems are those for vision, hearing, touch, taste, smell, and balance. Senses are transducers from the physical world to the realm of the mind where people interpret the information, creating their perception of the world around them.

Stimulus modality, also called sensory modality, is one aspect of a stimulus or what is perceived after a stimulus. For example, the temperature modality is registered after heat or cold stimulate a receptor. Some sensory modalities include: light, sound, temperature, taste, pressure, and smell. The type and location of the sensory receptor activated by the stimulus plays the primary role in coding the sensation. All sensory modalities work together to heighten stimuli sensation when necessary.

Iconic memory is the visual sensory memory register pertaining to the visual domain and a fast-decaying store of visual information. It is a component of the visual memory system which also includes visual short-term memory (VSTM) and long-term memory (LTM). Iconic memory is described as a very brief, pre-categorical, high capacity memory store. It contributes to VSTM by providing a coherent representation of our entire visual perception for a very brief period of time. Iconic memory assists in accounting for phenomena such as change blindness and continuity of experience during saccades. Iconic memory is no longer thought of as a single entity but instead, is composed of at least two distinctive components. Classic experiments including Sperling's partial report paradigm as well as modern techniques continue to provide insight into the nature of this SM store.

Subitizing is the rapid, accurate, and confident judgments of numbers performed for small numbers of items. The term was coined in 1949 by E.L. Kaufman et al., and is derived from the Latin adjective subitus and captures a feeling of immediately knowing how many items lie within the visual scene, when the number of items present falls within the subitizing range. Sets larger than about four items cannot be subitized unless the items appear in a pattern with which the person is familiar. Large, familiar sets might be counted one-by-one. A person could also estimate the number of a large set—a skill similar to, but different from, subitizing.

Multisensory integration, also known as multimodal integration, is the study of how information from the different sensory modalities may be integrated by the nervous system. A coherent representation of objects combining modalities enables animals to have meaningful perceptual experiences. Indeed, multisensory integration is central to adaptive behavior because it allows animals to perceive a world of coherent perceptual entities. Multisensory integration also deals with how different sensory modalities interact with one another and alter each other's processing.

In music, consonance and dissonance are categorizations of simultaneous or successive sounds. Within the Western tradition, some listeners associate consonance with sweetness, pleasantness, and acceptability, and dissonance with harshness, unpleasantness, or unacceptability, although there is broad acknowledgement that this depends also on familiarity and musical expertise. The terms form a structural dichotomy in which they define each other by mutual exclusion: a consonance is what is not dissonant, and a dissonance is what is not consonant. However, a finer consideration shows that the distinction forms a gradation, from the most consonant to the most dissonant. In casual discourse, as Hindemith stressed, "The two concepts have never been completely explained, and for a thousand years the definitions have varied". The term sonance has been proposed to encompass or refer indistinctly to the terms consonance and dissonance.

In audiology and psychoacoustics the concept of critical bands, introduced by Harvey Fletcher in 1933 and refined in 1940, describes the frequency bandwidth of the "auditory filter" created by the cochlea, the sense organ of hearing within the inner ear. Roughly, the critical band is the band of audio frequencies within which a second tone will interfere with the perception of the first tone by auditory masking.

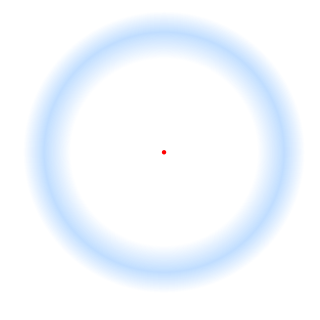

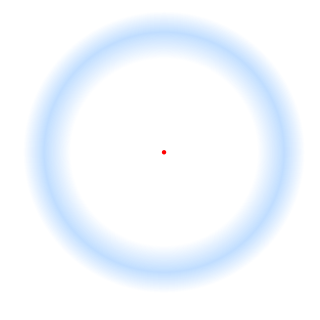

In vision, filling-in phenomena are those responsible for the completion of missing information across the physiological blind spot, and across natural and artificial scotomata. There is also evidence for similar mechanisms of completion in normal visual analysis. Classical demonstrations of perceptual filling-in involve filling in at the blind spot in monocular vision, and images stabilized on the retina either by means of special lenses, or under certain conditions of steady fixation. For example, naturally in monocular vision at the physiological blind spot, the percept is not a hole in the visual field, but the content is “filled-in” based on information from the surrounding visual field. When a textured stimulus is presented centered on but extending beyond the region of the blind spot, a continuous texture is perceived. This partially inferred percept is paradoxically considered more reliable than a percept based on external input..

Auditory masking occurs when the perception of one sound is affected by the presence of another sound.

In physics, sound is a vibration that propagates as an acoustic wave, through a transmission medium such as a gas, liquid or solid. In human physiology and psychology, sound is the reception of such waves and their perception by the brain. Only acoustic waves that have frequencies lying between about 20 Hz and 20 kHz, the audio frequency range, elicit an auditory percept in humans. In air at atmospheric pressure, these represent sound waves with wavelengths of 17 meters (56 ft) to 1.7 centimeters (0.67 in). Sound waves above 20 kHz are known as ultrasound and are not audible to humans. Sound waves below 20 Hz are known as infrasound. Different animal species have varying hearing ranges.

The somatosensory system is the network of neural structures in the brain and body that produce the perception of touch, as well as temperature, body position (proprioception), and pain. It is a subset of the sensory nervous system, which also represents visual, auditory, olfactory, and gustatory stimuli. Somatosensation begins when mechano- and thermosensitive structures in the skin or internal organs sense physical stimuli such as pressure on the skin. Activation of these structures, or receptors, leads to activation of peripheral sensory neurons that convey signals to the spinal cord as patterns of action potentials. Sensory information is then processed locally in the spinal cord to drive reflexes, and is also conveyed to the brain for conscious perception of touch and proprioception. Note, somatosensory information from the face and head enters the brain through peripheral sensory neurons in the cranial nerves, such as the trigeminal nerve.

Psychoacoustics is the branch of psychophysics involving the scientific study of sound perception and audiology—how humans perceive various sounds. More specifically, it is the branch of science studying the psychological responses associated with sound. Psychoacoustics is an interdisciplinary field of many areas, including psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science.

Haptic memory is the form of sensory memory specific to touch stimuli. Haptic memory is used regularly when assessing the necessary forces for gripping and interacting with familiar objects. It may also influence one's interactions with novel objects of an apparently similar size and density. Similar to visual iconic memory, traces of haptically acquired information are short lived and prone to decay after approximately two seconds. Haptic memory is best for stimuli applied to areas of the skin that are more sensitive to touch. Haptics involves at least two subsystems; cutaneous, or everything skin related, and kinesthetic, or joint angle and the relative location of body. Haptics generally involves active, manual examination and is quite capable of processing physical traits of objects and surfaces.

Ernst Terhardt is a German engineer and psychoacoustician who made significant contributions in diverse areas of audio communication including pitch perception, music cognition, and Fourier transformation. He was professor in the area of acoustic communication at the Institute of Electroacoustics, Technical University of Munich, Germany.