Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

A Linux distribution is an operating system made from a software collection that includes the Linux kernel and often a package management system. They are often obtained from the website of each distribution, which are available for a wide variety of systems ranging from embedded devices and personal computers to servers and powerful supercomputers.

An operating system (OS) is system software that manages computer hardware and software resources, and provides common services for computer programs.

Plan 9 from Bell Labs is a distributed operating system which originated from the Computing Science Research Center (CSRC) at Bell Labs in the mid-1980s and built on UNIX concepts first developed there in the late 1960s. Since 2000, Plan 9 has been free and open-source. The final official release was in early 2015.

OS-9 is a family of real-time, process-based, multitasking, multi-user operating systems, developed in the 1980s, originally by Microware Systems Corporation for the Motorola 6809 microprocessor. It was purchased by Radisys Corp in 2001, and was purchased again in 2013 by its current owner Microware LP.

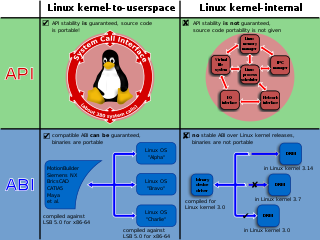

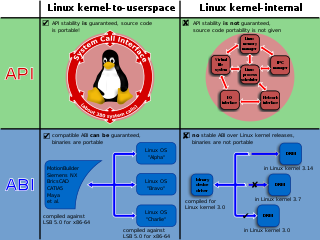

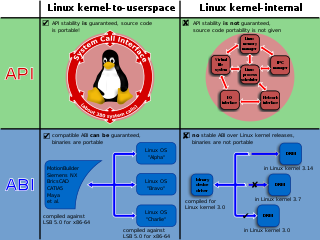

In computer software, an application binary interface (ABI) is an interface between two binary program modules. Often, one of these modules is a library or operating system facility, and the other is a program that is being run by a user.

In computing, cross-platform software is computer software that is designed to work in several computing platforms. Some cross-platform software requires a separate build for each platform, but some can be directly run on any platform without special preparation, being written in an interpreted language or compiled to portable bytecode for which the interpreters or run-time packages are common or standard components of all supported platforms.

A computing platform, digital platform, or software platform is the infrastructure on which software is executed. While the individual components of a computing platform may be obfuscated under layers of abstraction, the summation of the required components comprise the computing platform.

In computing, a system call is the programmatic way in which a computer program requests a service from the operating system on which it is executed. This may include hardware-related services, creation and execution of new processes, and communication with integral kernel services such as process scheduling. System calls provide an essential interface between a process and the operating system.

In software engineering, porting is the process of adapting software for the purpose of achieving some form of execution in a computing environment that is different from the one that a given program was originally designed for. The term is also used when software/hardware is changed to make them usable in different environments.

Hardware abstractions are sets of routines in software that provide programs with access to hardware resources through programming interfaces. The programming interface allows all devices in a particular class C of hardware devices to be accessed through identical interfaces even though C may contain different subclasses of devices that each provide a different hardware interface.

In computers, a printer driver or a print processor is a piece of software on a computer that converts the data to be printed to a format that a printer can understand. The purpose of printer drivers is to allow applications to do printing without being aware of the technical details of each printer model.

Inferno is a distributed operating system started at Bell Labs and now developed and maintained by Vita Nuova Holdings as free software under the MIT License. Inferno was based on the experience gained with Plan 9 from Bell Labs, and the further research of Bell Labs into operating systems, languages, on-the-fly compilers, graphics, security, networking and portability. The name of the operating system, many of its associated programs, and that of the current company, were inspired by Dante Alighieri's Divine Comedy. In Italian, Inferno means "hell", of which there are nine circles in Dante's Divine Comedy.

In computing, an abstraction layer or abstraction level is a way of hiding the working details of a subsystem. Examples of software models that use layers of abstraction include the OSI model for network protocols, OpenGL, and other graphics libraries, which allow the separation of concerns to facilitate interoperability and platform independence.

The GNU toolchain is a broad collection of programming tools produced by the GNU Project. These tools form a toolchain used for developing software applications and operating systems.

Linux is both a open-source Unix-like kernel and generic name for a family of open-source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991, by Linus Torvalds. Linux is typically packaged as a Linux distribution (distro), which includes the kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses and recommends the name "GNU/Linux" to emphasize the use and importance of GNU software in many distributions, causing some controversy.

The following is a timeline of virtualization development. In computing, virtualization is the use of a computer to simulate another computer. Through virtualization, a host simulates a guest by exposing virtual hardware devices, which may be done through software or by allowing access to a physical device connected to the machine.

Unix is a family of multitasking, multi-user computer operating systems that derive from the original AT&T Unix, whose development started in 1969 at the Bell Labs research center by Ken Thompson, Dennis Ritchie, and others.

NetBSD is a free and open-source Unix-like operating system based on the Berkeley Software Distribution (BSD). It was the first open-source BSD descendant officially released after 386BSD was forked. It continues to be actively developed and is available for many platforms, including servers, desktops, handheld devices, and embedded systems.

The following outline is provided as an overview of and topical guide to the Perl programming language: