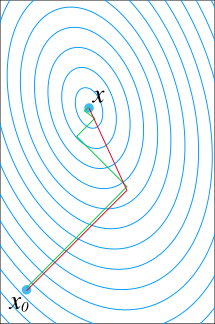

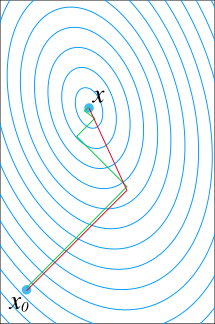

In computational mathematics, an iterative method is a mathematical procedure that uses an initial value to generate a sequence of improving approximate solutions for a class of problems, in which the n-th approximation is derived from the previous ones. A specific implementation of an iterative method, including the termination criteria, is an algorithm of the iterative method. An iterative method is called convergent if the corresponding sequence converges for given initial approximations. A mathematically rigorous convergence analysis of an iterative method is usually performed; however, heuristic-based iterative methods are also common.

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis. It is the study of numerical methods that attempt at finding approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics, numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in medicine and biology.

In linear algebra, the Cholesky decomposition or Cholesky factorization is a decomposition of a Hermitian, positive-definite matrix into the product of a lower triangular matrix and its conjugate transpose, which is useful for efficient numerical solutions, e.g., Monte Carlo simulations. It was discovered by André-Louis Cholesky for real matrices, and posthumously published in 1924. When it is applicable, the Cholesky decomposition is roughly twice as efficient as the LU decomposition for solving systems of linear equations.

In numerical analysis, the minimum degree algorithm is an algorithm used to permute the rows and columns of a symmetric sparse matrix before applying the Cholesky decomposition, to reduce the number of non-zeros in the Cholesky factor. This results in reduced storage requirements and means that the Cholesky factor can be applied with fewer arithmetic operations.

In mathematics, the conjugate gradient method is an algorithm for the numerical solution of particular systems of linear equations, namely those whose matrix is positive-definite. The conjugate gradient method is often implemented as an iterative algorithm, applicable to sparse systems that are too large to be handled by a direct implementation or other direct methods such as the Cholesky decomposition. Large sparse systems often arise when numerically solving partial differential equations or optimization problems.

In numerical analysis, a multigrid method is an algorithm for solving differential equations using a hierarchy of discretizations. They are an example of a class of techniques called multiresolution methods, very useful in problems exhibiting multiple scales of behavior. For example, many basic relaxation methods exhibit different rates of convergence for short- and long-wavelength components, suggesting these different scales be treated differently, as in a Fourier analysis approach to multigrid. MG methods can be used as solvers as well as preconditioners.

In mathematics, preconditioning is the application of a transformation, called the preconditioner, that conditions a given problem into a form that is more suitable for numerical solving methods. Preconditioning is typically related to reducing a condition number of the problem. The preconditioned problem is then usually solved by an iterative method.

In numerical analysis and linear algebra, lower–upper (LU) decomposition or factorization factors a matrix as the product of a lower triangular matrix and an upper triangular matrix. The product sometimes includes a permutation matrix as well. LU decomposition can be viewed as the matrix form of Gaussian elimination. Computers usually solve square systems of linear equations using LU decomposition, and it is also a key step when inverting a matrix or computing the determinant of a matrix. The LU decomposition was introduced by the Polish mathematician Tadeusz Banachiewicz in 1938.

Numerical linear algebra, sometimes called applied linear algebra, is the study of how matrix operations can be used to create computer algorithms which efficiently and accurately provide approximate answers to questions in continuous mathematics. It is a subfield of numerical analysis, and a type of linear algebra. Computers use floating-point arithmetic and cannot exactly represent irrational data, so when a computer algorithm is applied to a matrix of data, it can sometimes increase the difference between a number stored in the computer and the true number that it is an approximation of. Numerical linear algebra uses properties of vectors and matrices to develop computer algorithms that minimize the error introduced by the computer, and is also concerned with ensuring that the algorithm is as efficient as possible.

In numerical linear algebra, an incomplete LU factorization of a matrix is a sparse approximation of the LU factorization often used as a preconditioner.

In numerical mathematics, relaxation methods are iterative methods for solving systems of equations, including nonlinear systems.

In numerical linear algebra, the alternating-direction implicit (ADI) method is an iterative method used to solve Sylvester matrix equations. It is a popular method for solving the large matrix equations that arise in systems theory and control, and can be formulated to construct solutions in a memory-efficient, factored form. It is also used to numerically solve parabolic and elliptic partial differential equations, and is a classic method used for modeling heat conduction and solving the diffusion equation in two or more dimensions. It is an example of an operator splitting method.

Lis is a scalable parallel software library for solving discretized linear equations and eigenvalue problems that mainly arise in the numerical solution of partial differential equations by using iterative methods. Although it is designed for parallel computers, the library can be used without being conscious of parallel processing.

Jinchao Xu is an American-Chinese mathematician. He is currently the Verne M. Willaman Professor in the Department of Mathematics at the Pennsylvania State University, University Park. He is known for his work on multigrid methods, domain decomposition methods, finite element methods, and more recently deep neural networks.

Locally Optimal Block Preconditioned Conjugate Gradient (LOBPCG) is a matrix-free method for finding the largest eigenvalues and the corresponding eigenvectors of a symmetric generalized eigenvalue problem

In numerical mathematics, hierarchical matrices (H-matrices) are used as data-sparse approximations of non-sparse matrices. While a sparse matrix of dimension can be represented efficiently in units of storage by storing only its non-zero entries, a non-sparse matrix would require units of storage, and using this type of matrices for large problems would therefore be prohibitively expensive in terms of storage and computing time. Hierarchical matrices provide an approximation requiring only units of storage, where is a parameter controlling the accuracy of the approximation. In typical applications, e.g., when discretizing integral equations, preconditioning the resulting systems of linear equations, or solving elliptic partial differential equations, a rank proportional to with a small constant is sufficient to ensure an accuracy of . Compared to many other data-sparse representations of non-sparse matrices, hierarchical matrices offer a major advantage: the results of matrix arithmetic operations like matrix multiplication, factorization or inversion can be approximated in operations, where

Matrix Toolkit Java (MTJ) is an open-source Java software library for performing numerical linear algebra. The library contains a full set of standard linear algebra operations for dense matrices based on BLAS and LAPACK code. Partial set of sparse operations is provided through the Templates project. The library can be configured to run as a pure Java library or use BLAS machine-optimized code through the Java Native Interface.

In numerical analysis, the mixed finite element method, is a type of finite element method in which extra fields to be solved are introduced during the posing a partial differential equation problem. Somewhat related is the hybrid finite element method. The extra fields are constrained by using Lagrange multiplier fields. To be distinguished from the mixed finite element method, usual finite element methods that do not introduce such extra fields are also called irreducible or primal finite element methods. The mixed finite element method is efficient for some problems that would be numerically ill-posed if discretized by using the irreducible finite element method; one example of such problems is to compute the stress and strain fields in an almost incompressible elastic body.

In numerical linear algebra, the Bartels–Stewart algorithm is used to numerically solve the Sylvester matrix equation . Developed by R.H. Bartels and G.W. Stewart in 1971, it was the first numerically stable method that could be systematically applied to solve such equations. The algorithm works by using the real Schur decompositions of and to transform into a triangular system that can then be solved using forward or backward substitution. In 1979, G. Golub, C. Van Loan and S. Nash introduced an improved version of the algorithm, known as the Hessenberg–Schur algorithm. It remains a standard approach for solving Sylvester equations when is of small to moderate size.