In digital signal processing, spatial anti-aliasing is a technique for minimizing the distortion artifacts (aliasing) when representing a high-resolution image at a lower resolution. Anti-aliasing is used in digital photography, computer graphics, digital audio, and many other applications.

Texture mapping is a method for mapping a texture on a computer-generated graphic. Texture here can be high frequency detail, surface texture, or color.

Shading refers to the depiction of depth perception in 3D models or illustrations by varying the level of darkness. Shading tries to approximate local behavior of light on the object's surface and is not to be confused with techniques of adding shadows, such as shadow mapping or shadow volumes, which fall under global behavior of light.

The GeForce 3 series (NV20) is the third generation of Nvidia's GeForce line of graphics processing units (GPUs). Introduced in February 2001, it advanced the GeForce architecture by adding programmable pixel and vertex shaders, multisample anti-aliasing and improved the overall efficiency of the rendering process.

In computer graphics, mipmaps or pyramids are pre-calculated, optimized sequences of images, each of which is a progressively lower resolution representation of the previous. The height and width of each image, or level, in the mipmap is a factor of two smaller than the previous level. Mipmaps do not have to be square. They are intended to increase rendering speed and reduce aliasing artifacts. A high-resolution mipmap image is used for high-density samples, such as for objects close to the camera; lower-resolution images are used as the object appears farther away. This is a more efficient way of downfiltering (minifying) a texture than sampling all texels in the original texture that would contribute to a screen pixel; it is faster to take a constant number of samples from the appropriately downfiltered textures. Mipmaps are widely used in 3D computer games, flight simulators, other 3D imaging systems for texture filtering, and 2D and 3D GIS software. Their use is known as mipmapping. The letters MIP in the name are an acronym of the Latin phrase multum in parvo, meaning "much in little".

The RIVA 128, or "NV3", was a consumer graphics processing unit created in 1997 by Nvidia. It was the first to integrate 3D acceleration in addition to traditional 2D and video acceleration. Its name is an acronym for Real-time Interactive Video and Animation accelerator.

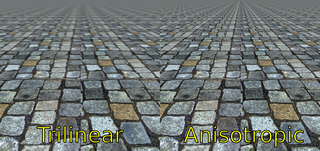

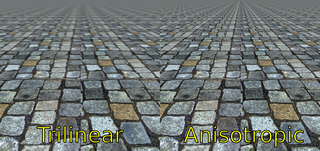

In 3D computer graphics, anisotropic filtering is a method of enhancing the image quality of textures on surfaces of computer graphics that are at oblique viewing angles with respect to the camera where the projection of the texture appears to be non-orthogonal.

In mathematics, bilinear interpolation is a method for interpolating functions of two variables using repeated linear interpolation. It is usually applied to functions sampled on a 2D rectilinear grid, though it can be generalized to functions defined on the vertices of arbitrary convex quadrilaterals.

In computer graphics, texture filtering or texture smoothing is the method used to determine the texture color for a texture mapped pixel, using the colors of nearby texels.

The S3 ViRGE (Video and Rendering Graphics Engine) graphics chipset was one of the first 2D/3D accelerators designed for the mass market.

A lightmap is a data structure used in lightmapping, a form of surface caching in which the brightness of surfaces in a virtual scene is pre-calculated and stored in texture maps for later use. Lightmaps are most commonly applied to static objects in applications that use real-time 3D computer graphics, such as video games, in order to provide lighting effects such as global illumination at a relatively low computational cost.

In computer graphics, pixelation is caused by displaying a bitmap or a section of a bitmap at such a large size that individual pixels, small single-colored square display elements that comprise the bitmap, are visible. Such an image is said to be pixelated.

Pixel art scaling algorithms are graphical filters that attempt to enhance the appearance of hand-drawn 2D pixel art graphics. These algorithms are a form of automatic image enhancement. Pixel art scaling algorithms employ methods significantly different than the common methods of image rescaling, which have the goal of preserving the appearance of images.

The Xenos is a custom graphics processing unit (GPU) designed by ATI, used in the Xbox 360 video game console developed and produced for Microsoft. Developed under the codename "C1", it is in many ways related to the R520 architecture and therefore very similar to an ATI Radeon X1800 XT series of PC graphics cards as far as features and performance are concerned. However, the Xenos introduced new design ideas that were later adopted in the TeraScale microarchitecture, such as the unified shader architecture. The package contains two separate dies, the GPU and an eDRAM, featuring a total of 337 million transistors.

In computer graphics and digital imaging, imagescaling refers to the resizing of a digital image. In video technology, the magnification of digital material is known as upscaling or resolution enhancement.

The ATI Rage is a series of graphics chipsets developed by ATI Technologies offering graphical user interface (GUI) 2D acceleration, video acceleration, and 3D acceleration developed by ATI Technologies. It is the successor to the ATI Mach series of 2D accelerators.

The RSX 'Reality Synthesizer' is a proprietary graphics processing unit (GPU) codeveloped by Nvidia and Sony for the PlayStation 3 game console. It is based on the Nvidia 7800GTX graphics processor and, according to Nvidia, is a G70/G71 hybrid architecture with some modifications. The RSX has separate vertex and pixel shader pipelines. The GPU makes use of 256 MB GDDR3 RAM clocked at 650 MHz with an effective transmission rate of 1.3 GHz and up to 224 MB of the 3.2 GHz XDR main memory via the CPU . Although it carries the majority of the graphics processing, the Cell Broadband Engine, the console's CPU, is also used complementarily for some graphics-related computational loads of the console.

The term post-processing is used in the video and film industry for quality-improvement image processing methods used in video playback devices, such as stand-alone DVD-Video players; video playing software; and transcoding software. It is also commonly used in real-time 3D rendering to add additional effects.

InfiniteReality refers to a 3D graphics hardware architecture and a family of graphics systems that implemented the aforementioned hardware architecture that was developed and manufactured by Silicon Graphics from 1996 to 2005. The InfiniteReality was positioned as Silicon Graphics' high-end visualization hardware for their MIPS/IRIX platform and was used exclusively in their Onyx family of visualization systems, which are sometimes referred to as "graphics supercomputers" or "visualization supercomputers". The InfiniteReality was marketed to and used by large organizations such as companies and universities that are involved in computer simulation, digital content creation, engineering and research.

This is a glossary of terms relating to computer graphics.