In statistics, the score test assesses constraints on statistical parameters based on the gradient of the likelihood function—known as the score—evaluated at the hypothesized parameter value under the null hypothesis. Intuitively, if the restricted estimator is near the maximum of the likelihood function, the score should not differ from zero by more than sampling error. While the finite sample distributions of score tests are generally unknown, they have an asymptotic χ2-distribution under the null hypothesis as first proved by C. R. Rao in 1948, a fact that can be used to determine statistical significance.

In statistics, homogeneity and its opposite, heterogeneity, arise in describing the properties of a dataset, or several datasets. They relate to the validity of the often convenient assumption that the statistical properties of any one part of an overall dataset are the same as any other part. In meta-analysis, which combines the data from several studies, homogeneity measures the differences or similarities between the several studies.

In econometrics, the seemingly unrelated regressions (SUR) or seemingly unrelated regression equations (SURE) model, proposed by Arnold Zellner in (1962), is a generalization of a linear regression model that consists of several regression equations, each having its own dependent variable and potentially different sets of exogenous explanatory variables. Each equation is a valid linear regression on its own and can be estimated separately, which is why the system is called seemingly unrelated, although some authors suggest that the term seemingly related would be more appropriate, since the error terms are assumed to be correlated across the equations.

In statistics, the Breusch–Pagan test, developed in 1979 by Trevor Breusch and Adrian Pagan, is used to test for heteroskedasticity in a linear regression model. It was independently suggested with some extension by R. Dennis Cook and Sanford Weisberg in 1983. Derived from the Lagrange multiplier test principle, it tests whether the variance of the errors from a regression is dependent on the values of the independent variables. In that case, heteroskedasticity is present.

In statistics, generalized least squares (GLS) is a method used to estimate the unknown parameters in a linear regression model. It is used when there is a non-zero amount of correlation between the residuals in the regression model. GLS is employed to improve statistical efficiency and reduce the risk of drawing erroneous inferences, as compared to conventional least squares and weighted least squares methods. It was first described by Alexander Aitken in 1935.

In statistics, the Durbin–Watson statistic is a test statistic used to detect the presence of autocorrelation at lag 1 in the residuals from a regression analysis. It is named after James Durbin and Geoffrey Watson. The small sample distribution of this ratio was derived by John von Neumann. Durbin and Watson applied this statistic to the residuals from least squares regressions, and developed bounds tests for the null hypothesis that the errors are serially uncorrelated against the alternative that they follow a first order autoregressive process. Note that the distribution of this test statistic does not depend on the estimated regression coefficients and the variance of the errors.

The topic of heteroskedasticity-consistent (HC) standard errors arises in statistics and econometrics in the context of linear regression and time series analysis. These are also known as heteroskedasticity-robust standard errors, Eicker–Huber–White standard errors, to recognize the contributions of Friedhelm Eicker, Peter J. Huber, and Halbert White.

In econometrics and statistics, a structural break is an unexpected change over time in the parameters of regression models, which can lead to huge forecasting errors and unreliability of the model in general. This issue was popularised by David Hendry, who argued that lack of stability of coefficients frequently caused forecast failure, and therefore we must routinely test for structural stability. Structural stability − i.e., the time-invariance of regression coefficients − is a central issue in all applications of linear regression models.

In statistics, regression validation is the process of deciding whether the numerical results quantifying hypothesized relationships between variables, obtained from regression analysis, are acceptable as descriptions of the data. The validation process can involve analyzing the goodness of fit of the regression, analyzing whether the regression residuals are random, and checking whether the model's predictive performance deteriorates substantially when applied to data that were not used in model estimation.

In statistics, a generalized estimating equation (GEE) is used to estimate the parameters of a generalized linear model with a possible unmeasured correlation between observations from different timepoints. Although some believe that Generalized estimating equations are robust in everything even with the wrong choice of working-correlation matrix, Generalized estimating equations are only robust to loss of consistency with the wrong choice.

In statistics, the Breusch–Godfrey test is used to assess the validity of some of the modelling assumptions inherent in applying regression-like models to observed data series. In particular, it tests for the presence of serial correlation that has not been included in a proposed model structure and which, if present, would mean that incorrect conclusions would be drawn from other tests or that sub-optimal estimates of model parameters would be obtained.

In statistics, the Goldfeld–Quandt test checks for heteroscedasticity in regression analyses. It does this by dividing a dataset into two parts or groups, and hence the test is sometimes called a two-group test. The Goldfeld–Quandt test is one of two tests proposed in a 1965 paper by Stephen Goldfeld and Richard Quandt. Both a parametric and nonparametric test are described in the paper, but the term "Goldfeld–Quandt test" is usually associated only with the former.

A Newey–West estimator is used in statistics and econometrics to provide an estimate of the covariance matrix of the parameters of a regression-type model where the standard assumptions of regression analysis do not apply. It was devised by Whitney K. Newey and Kenneth D. West in 1987, although there are a number of later variants. The estimator is used to try to overcome autocorrelation, and heteroskedasticity in the error terms in the models, often for regressions applied to time series data. The abbreviation "HAC," sometimes used for the estimator, stands for "heteroskedasticity and autocorrelation consistent." There are a number of HAC estimators described in, and HAC estimator does not refer uniquely to Newey-West. One version of Newey-West Bartlett requires the user to specify the bandwidth and usage of the Bartlett Kernel from Kernel density estimation

Halbert Lynn White Jr. was the Chancellor’s Associates Distinguished Professor of Economics at the University of California, San Diego, and a Fellow of the Econometric Society and the American Academy of Arts and Sciences.

Trevor Stanley Breusch is an Australian econometrician and was until his retirement Professor of Econometrics and Deputy Director of Crawford School of Public Policy at the Australian National University. He is noted for the Breusch–Pagan test from the paper "A simple test for heteroscedasticity and random coefficient variation". Another contribution to econometrics is the serial correlation Lagrange multiplier test, often called Breusch–Godfrey test after Breusch and Leslie G. Godfrey, which can be used to identify autocorrelation in the errors of a regression model.

Anil K. Bera is an Indian-American econometrician. He is Professor of Economics at University of Illinois at Urbana–Champaign's Department of Economics. He is most noted for his work with Carlos Jarque on the Jarque–Bera test.

In econometrics, the Park test is a test for heteroscedasticity. The test is based on the method proposed by Rolla Edward Park for estimating linear regression parameters in the presence of heteroscedastic error terms.

In statistics, the Glejser test for heteroscedasticity, developed in 1969 by Herbert Glejser, regresses the residuals on the explanatory variable that is thought to be related to the heteroscedastic variance. After it was found not to be asymptotically valid under asymmetric disturbances, similar improvements have been independently suggested by Im, and Machado and Santos Silva.

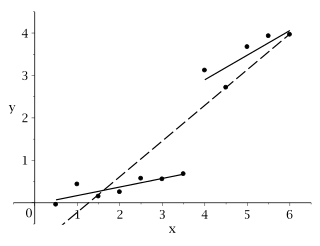

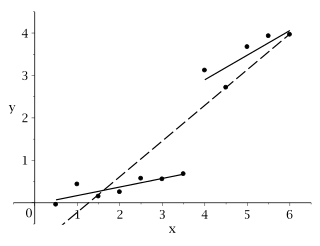

In statistics, a sequence of random variables is homoscedastic if all its random variables have the same finite variance; this is also known as homogeneity of variance. The complementary notion is called heteroscedasticity, also known as heterogeneity of variance. The spellings homoskedasticity and heteroskedasticity are also frequently used. Assuming a variable is homoscedastic when in reality it is heteroscedastic results in unbiased but inefficient point estimates and in biased estimates of standard errors, and may result in overestimating the goodness of fit as measured by the Pearson coefficient.