In linguistics, syntax is the set of rules, principles, and processes that govern the structure of sentences in a given language, usually including word order. The term syntax is also used to refer to the study of such principles and processes. The goal of many syntacticians is to discover the syntactic rules common to all languages.

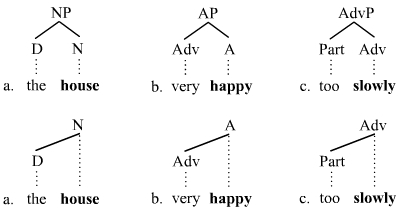

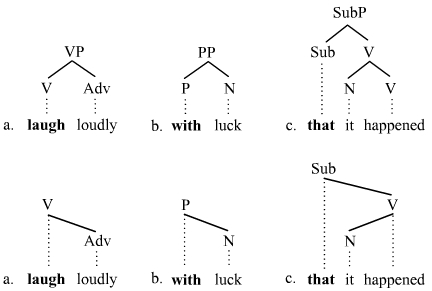

A syntactic category is a syntactic unit that theories of syntax assume. Word classes, largely corresponding to traditional parts of speech are syntactic categories. In phrase structure grammars, the phrasal categories are also syntactic categories. Dependency grammars, however, do not acknowledge phrasal categories.

In everyday speech, a phrase is any group of words, often carrying a special idiomatic meaning; in this sense it is synonymous with expression and phraseme. In linguistic analysis, a phrase is a group of words that functions as a constituent in the syntax of a sentence, a single unit within a grammatical hierarchy. A phrase typically appears within a clause, but it is possible also for a phrase to be a clause or to contain a clause within it. There are also types of phrases like noun phrase and prepositional phrase.

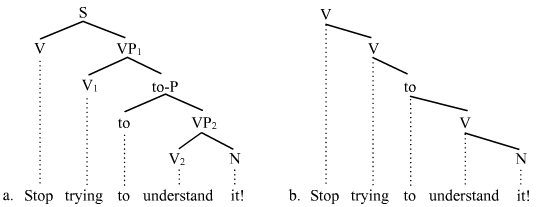

Phrase structure rules are a type of rewrite rule used to describe a given language's syntax and are closely associated with the early stages of transformational grammar, proposed by Noam Chomsky in 1957. They are used to break down a natural language sentence into its constituent parts, also known as syntactic categories, including both lexical categories and phrasal categories. A grammar that uses phrase structure rules is a type of phrase structure grammar. Phrase structure rules as they are commonly employed operate according to the constituency relation, and a grammar that employs phrase structure rules is therefore a constituency grammar; as such, it stands in contrast to dependency grammars, which are based on the dependency relation.

A noun phrase, or nominal (phrase), is a phrase that has a noun as its head or performs the same grammatical function as a noun. Noun phrases are very common cross-linguistically, and they may be the most frequently occurring phrase type.

A parse tree or parsing tree or derivation tree or concrete syntax tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term parse tree itself is used primarily in computational linguistics; in theoretical syntax, the term syntax tree is more common.

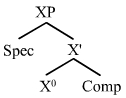

In linguistics, X-bar theory is a theory of syntactic category formation. It embodies two independent claims: one, that phrases may contain intermediate constituents projected from a head X; and two, that this system of projected constituency may be common to more than one category.

In linguistics, the minimalist program (MP) is a major line of inquiry that has been developing inside generative grammar since the early 1990s, starting with a 1993 paper by Noam Chomsky.

In linguistics, the head or nucleus of a phrase is the word that determines the syntactic category of that phrase. For example, the head of the noun phrase boiling hot water is the noun water. Analogously, the head of a compound is the stem that determines the semantic category of that compound. For example, the head of the compound noun handbag is bag, since a handbag is a bag, not a hand. The other elements of the phrase or compound modify the head, and are therefore the head's dependents. Headed phrases and compounds are called endocentric, whereas exocentric ("headless") phrases and compounds lack a clear head. Heads are crucial to establishing the direction of branching. Head-initial phrases are right-branching, head-final phrases are left-branching, and head-medial phrases combine left- and right-branching.

Dependency grammar (DG) is a class of modern grammatical theories that are all based on the dependency relation and that can be traced back primarily to the work of Lucien Tesnière. Dependency is the notion that linguistic units, e.g. words, are connected to each other by directed links. The (finite) verb is taken to be the structural center of clause structure. All other syntactic units (words) are either directly or indirectly connected to the verb in terms of the directed links, which are called dependencies. Dependency grammar differs from phrase structure grammar in that while it can identify phrases it tends to overlook phrasal nodes. A dependency structure is determined by the relation between a word and its dependents. Dependency structures are flatter than phrase structures in part because they lack a finite verb phrase constituent, and they are thus well suited for the analysis of languages with free word order, such as Czech or Warlpiri.

The term phrase structure grammar was originally introduced by Noam Chomsky as the term for grammar studied previously by Emil Post and Axel Thue. Some authors, however, reserve the term for more restricted grammars in the Chomsky hierarchy: context-sensitive grammars or context-free grammars. In a broader sense, phrase structure grammars are also known as constituency grammars. The defining trait of phrase structure grammars is thus their adherence to the constituency relation, as opposed to the dependency relation of dependency grammars.

A sentence diagram is a pictorial representation of the grammatical structure of a sentence. The term "sentence diagram" is used more when teaching written language, where sentences are diagrammed. The model shows the relations between words and the nature of sentence structure and can be used as a tool to help recognize which potential sentences are actual sentences.

In theoretical linguistics, a distinction is made between endocentric and exocentric constructions. A grammatical construction is said to be endocentric if it fulfils the same linguistic function as one of its parts, and exocentric if it does not. The distinction reaches back at least to Bloomfield's work of the 1930s. Such a distinction is possible only in phrase structure grammars, since in dependency grammars all constructions are necessarily endocentric.

Lucien Tesnière was a prominent and influential French linguist. He was born in Mont-Saint-Aignan on May 13, 1893. As a maître de conférences in Strasbourg (1924), and later professor in Montpellier (1937), he published many papers and books on Slavic languages. However, his importance in the history of linguistics is based mainly on his development of an approach to the syntax of natural languages that would become known as dependency grammar. He presented his theory in his book Éléments de syntaxe structurale, published posthumously in 1959. In the book he proposes a sophisticated formalization of syntactic structures, supported by many examples from a diversity of languages. Tesnière died in Montpellier on December 6, 1954.

In linguistics, antisymmetry is a theory of syntactic linearization presented in Richard S. Kayne's 1994 monograph The Antisymmetry of Syntax. The crux of this theory is that hierarchical structure in natural language maps universally onto a particular surface linearization, namely specifier-head-complement branching order. The theory derives a version of X-bar theory. Kayne hypothesizes that all phrases whose surface order is not specifier-head-complement have undergone syntactic movements that disrupt this underlying order. Subsequently, there have also been attempts at deriving specifier-complement-head as the basic word order.

Merge is one of the basic operations in the Minimalist Program, a leading approach to generative syntax, when two syntactic objects are combined to form a new syntactic unit. Merge also has the property of recursion in that it may apply to its own output: the objects combined by Merge are either lexical items or sets that were themselves formed by Merge. This recursive property of Merge has been claimed to be a fundamental characteristic that distinguishes language from other cognitive faculties. As Noam Chomsky (1999) puts it, Merge is "an indispensable operation of a recursive system ... which takes two syntactic objects A and B and forms the new object G={A,B}" (p. 2).

Syntactic movement is the means by which some theories of syntax address discontinuities. Movement was first postulated by structuralist linguists who expressed it in terms of discontinuous constituents or displacement. Some constituents appear to have been displaced from the position in which they receive important features of interpretation. The concept of movement is controversial and is associated with so-called transformational or derivational theories of syntax. Representational theories, in contrast, reject the notion of movement and often instead address discontinuities in terms of feature passing or persistent structural identities.

Scrambling is a syntactic phenomenon wherein sentences can be formulated using a variety of different word orders without any change in meaning. Scrambling often results in a discontinuity since the scrambled expression can end up at a distance from its head. Scrambling does not occur in English, but it is frequent in languages with freer word order, such as German, Russian, Persian and Turkic languages. The term was coined by Haj Ross in his 1967 dissertation and is widely used in present work, particularly with the generative tradition.

In syntax, shifting occurs when two or more constituents appearing on the same side of their common head exchange positions in a sense to obtain non-canonical order. The most widely acknowledged type of shifting is heavy NP shift, but shifting involving a heavy NP is just one manifestation of the shifting mechanism. Shifting occurs in most if not all European languages, and it may in fact be possible in all natural languages including sign languages.. Shifting is not inversion, and inversion is not shifting, but the two mechanisms are similar insofar as they are both present in languages like English that have relatively strict word order. The theoretical analysis of shifting varies in part depending on the theory of sentence structure that one adopts. If one assumes relatively flat structures, shifting does not result in a discontinuity. Shifting is often motivated by the relative weight of the constituents involved. The weight of a constituent is determined by a number of factors: e.g., number of words, contrastive focus, and semantic content.

In linguistics, a discontinuity occurs when a given word or phrase is separated from another word or phrase that it modifies in such a manner that a direct connection cannot be established between the two without incurring crossing lines in the tree structure. The terminology that is employed to denote discontinuities varies depending on the theory of syntax at hand. The terms discontinuous constituent, displacement, long distance dependency, unbounded dependency, and projectivity violation are largely synonymous with the term discontinuity. There are various types of discontinuities, the most prominent and widely studied of these being topicalization, wh-fronting, scrambling, and extraposition.