Euler's formula, named after Leonhard Euler, is a mathematical formula in complex analysis that establishes the fundamental relationship between the trigonometric functions and the complex exponential function. Euler's formula states that, for any real number x, one has

In mathematics, the Laplace transform, named after its discoverer Pierre-Simon Laplace, is an integral transform that converts a function of a real variable to a function of a complex variable .

In mathematics, the mean value theorem states, roughly, that for a given planar arc between two endpoints, there is at least one point at which the tangent to the arc is parallel to the secant through its endpoints. It is one of the most important results in real analysis. This theorem is used to prove statements about a function on an interval starting from local hypotheses about derivatives at points of the interval.

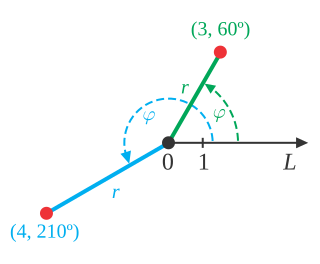

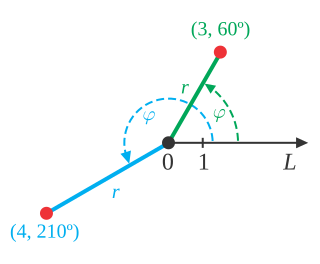

In mathematics, the polar coordinate system is a two-dimensional coordinate system in which each point on a plane is determined by a distance from a reference point and an angle from a reference direction. The reference point is called the pole, and the ray from the pole in the reference direction is the polar axis. The distance from the pole is called the radial coordinate, radial distance or simply radius, and the angle is called the angular coordinate, polar angle, or azimuth. Angles in polar notation are generally expressed in either degrees or radians.

In mathematics and physics, Laplace's equation is a second-order partial differential equation named after Pierre-Simon Laplace, who first studied its properties. This is often written as

In mathematical analysis, the Dirac delta function, also known as the unit impulse, is a generalized function on the real numbers, whose value is zero everywhere except at zero, and whose integral over the entire real line is equal to one. Since there is no function having this property, to model the delta "function" rigorously involves the use of limits or, as is common in mathematics, measure theory and the theory of distributions.

A Fourier series is an expansion of a periodic function into a sum of trigonometric functions. The Fourier series is an example of a trigonometric series, but not all trigonometric series are Fourier series. By expressing a function as a sum of sines and cosines, many problems involving the function become easier to analyze because trigonometric functions are well understood. For example, Fourier series were first used by Joseph Fourier to find solutions to the heat equation. This application is possible because the derivatives of trigonometric functions fall into simple patterns. Fourier series cannot be used to approximate arbitrary functions, because most functions have infinitely many terms in their Fourier series, and the series do not always converge. Well-behaved functions, for example smooth functions, have Fourier series that converge to the original function. The coefficients of the Fourier series are determined by integrals of the function multiplied by trigonometric functions, described in Common forms of the Fourier series below.

In calculus, and more generally in mathematical analysis, integration by parts or partial integration is a process that finds the integral of a product of functions in terms of the integral of the product of their derivative and antiderivative. It is frequently used to transform the antiderivative of a product of functions into an antiderivative for which a solution can be more easily found. The rule can be thought of as an integral version of the product rule of differentiation; it is indeed derived using the product rule.

In vector calculus, the Jacobian matrix of a vector-valued function of several variables is the matrix of all its first-order partial derivatives. When this matrix is square, that is, when the function takes the same number of variables as input as the number of vector components of its output, its determinant is referred to as the Jacobian determinant. Both the matrix and the determinant are often referred to simply as the Jacobian in literature.

In mathematics, a Green's function is the impulse response of an inhomogeneous linear differential operator defined on a domain with specified initial conditions or boundary conditions.

In mathematics, the Mellin transform is an integral transform that may be regarded as the multiplicative version of the two-sided Laplace transform. This integral transform is closely connected to the theory of Dirichlet series, and is often used in number theory, mathematical statistics, and the theory of asymptotic expansions; it is closely related to the Laplace transform and the Fourier transform, and the theory of the gamma function and allied special functions.

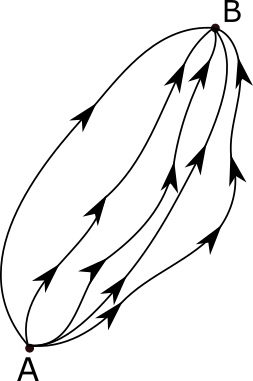

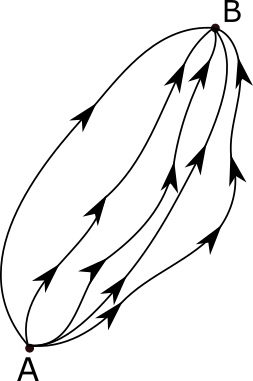

The path integral formulation is a description in quantum mechanics that generalizes the stationary action principle of classical mechanics. It replaces the classical notion of a single, unique classical trajectory for a system with a sum, or functional integral, over an infinity of quantum-mechanically possible trajectories to compute a quantum amplitude.

In probability theory, a distribution is said to be stable if a linear combination of two independent random variables with this distribution has the same distribution, up to location and scale parameters. A random variable is said to be stable if its distribution is stable. The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution, after Paul Lévy, the first mathematician to have studied it.

In mathematics, a change of variables is a basic technique used to simplify problems in which the original variables are replaced with functions of other variables. The intent is that when expressed in new variables, the problem may become simpler, or equivalent to a better understood problem.

In calculus, the Leibniz integral rule for differentiation under the integral sign states that for an integral of the form

In mathematics (specifically multivariable calculus), a multiple integral is a definite integral of a function of several real variables, for instance, f(x, y) or f(x, y, z). Physical (natural philosophy) interpretation: S any surface, V any volume, etc.. Incl. variable to time, position, etc.

Common integrals in quantum field theory are all variations and generalizations of Gaussian integrals to the complex plane and to multiple dimensions. Other integrals can be approximated by versions of the Gaussian integral. Fourier integrals are also considered.

In integral calculus, the tangent half-angle substitution is a change of variables used for evaluating integrals, which converts a rational function of trigonometric functions of into an ordinary rational function of by setting . This is the one-dimensional stereographic projection of the unit circle parametrized by angle measure onto the real line. The general transformation formula is:

In calculus, the integral of the secant function can be evaluated using a variety of methods and there are multiple ways of expressing the antiderivative, all of which can be shown to be equivalent via trigonometric identities,

In mathematics, calculus on Euclidean space is a generalization of calculus of functions in one or several variables to calculus of functions on Euclidean space as well as a finite-dimensional real vector space. This calculus is also known as advanced calculus, especially in the United States. It is similar to multivariable calculus but is somewhat more sophisticated in that it uses linear algebra more extensively and covers some concepts from differential geometry such as differential forms and Stokes' formula in terms of differential forms. This extensive use of linear algebra also allows a natural generalization of multivariable calculus to calculus on Banach spaces or topological vector spaces.