Measurement is the quantification of attributes of an object or event, which can be used to compare with other objects or events. In other words, measurement is a process of determining how large or small a physical quantity is as compared to a basic reference quantity of the same kind. The scope and application of measurement are dependent on the context and discipline. In natural sciences and engineering, measurements do not apply to nominal properties of objects or events, which is consistent with the guidelines of the International vocabulary of metrology published by the International Bureau of Weights and Measures. However, in other fields such as statistics as well as the social and behavioural sciences, measurements can have multiple levels, which would include nominal, ordinal, interval and ratio scales.

Statistics is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.

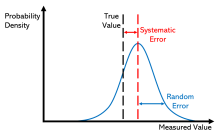

Accuracy and precision are two measures of observational error. Accuracy is how close a given set of measurements are to their true value, while precision is how close the measurements are to each other.

Uncertainty or Incertitude refers to epistemic situations involving imperfect or unknown information. It applies to predictions of future events, to physical measurements that are already made, or to the unknown. Uncertainty arises in partially observable or stochastic environments, as well as due to ignorance, indolence, or both. It arises in any number of fields, including insurance, philosophy, physics, statistics, economics, finance, medicine, psychology, sociology, engineering, metrology, meteorology, ecology and information science.

A vernier scale, named after Pierre Vernier, is a visual aid to take an accurate measurement reading between two graduation markings on a linear scale by using mechanical interpolation, thereby increasing resolution and reducing measurement uncertainty by using vernier acuity to reduce human estimation error. It may be found on many types of instrument measuring linear or angular quantities, but in particular on a vernier caliper, which measures internal and external diameters.

Significant figures, also referred to as significant digits or sig figs, are specific digits within a number written in positional notation that carry both reliability and necessity in conveying a particular quantity. When presenting the outcome of a measurement, if the number of digits exceeds what the measurement instrument can resolve, only the number of digits within the resolution's capability are dependable and therefore considered significant.

A variable is considered dependent if it depends on a independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule, on the values of other variables. Independent variables, in turn, are not seen as depending on any other variable in the scope of the experiment in question. In this sense, some common independent variables are time, space, density, mass, fluid flow rate, and previous values of some observed value of interest to predict future values.

In statistics and optimization, errors and residuals are two closely related and easily confused measures of the deviation of an observed value of an element of a statistical sample from its "true value". The error of an observation is the deviation of the observed value from the true value of a quantity of interest. The residual is the difference between the observed value and the estimated value of the quantity of interest. The distinction is most important in regression analysis, where the concepts are sometimes called the regression errors and regression residuals and where they lead to the concept of studentized residuals. In econometrics, "errors" are also called disturbances.

In analytical chemistry, a calibration curve, also known as a standard curve, is a general method for determining the concentration of a substance in an unknown sample by comparing the unknown to a set of standard samples of known concentration. A calibration curve is one approach to the problem of instrument calibration; other standard approaches may mix the standard into the unknown, giving an internal standard. The calibration curve is a plot of how the instrumental response, the so-called analytical signal, changes with the concentration of the analyte.

In statistics, imputation is the process of replacing missing data with substituted values. When substituting for a data point, it is known as "unit imputation"; when substituting for a component of a data point, it is known as "item imputation". There are three main problems that missing data causes: missing data can introduce a substantial amount of bias, make the handling and analysis of the data more arduous, and create reductions in efficiency. Because missing data can create problems for analyzing data, imputation is seen as a way to avoid pitfalls involved with listwise deletion of cases that have missing values. That is to say, when one or more values are missing for a case, most statistical packages default to discarding any case that has a missing value, which may introduce bias or affect the representativeness of the results. Imputation preserves all cases by replacing missing data with an estimated value based on other available information. Once all missing values have been imputed, the data set can then be analysed using standard techniques for complete data. There have been many theories embraced by scientists to account for missing data but the majority of them introduce bias. A few of the well known attempts to deal with missing data include: hot deck and cold deck imputation; listwise and pairwise deletion; mean imputation; non-negative matrix factorization; regression imputation; last observation carried forward; stochastic imputation; and multiple imputation.

Regression dilution, also known as regression attenuation, is the biasing of the linear regression slope towards zero, caused by errors in the independent variable.

Instrument error refers to the error of a measuring instrument, or the difference between the actual value and the value indicated by the instrument. There can be errors of various types, and the overall error is the sum of the individual errors.

In metrology, measurement uncertainty is the expression of the statistical dispersion of the values attributed to a measured quantity. All measurements are subject to uncertainty and a measurement result is complete only when it is accompanied by a statement of the associated uncertainty, such as the standard deviation. By international agreement, this uncertainty has a probabilistic basis and reflects incomplete knowledge of the quantity value. It is a non-negative parameter.

In the science of measurement, the least count of a measuring instrument is the smallest value in the measured quantity that can be resolved on the instrument's scale. The least count is related to the precision of an instrument; an instrument that can measure smaller changes in a value relative to another instrument, has a smaller "least count" value and so is more precise. Any measurement made by the instrument can be considered repeatable to no less than the resolution of the least count. The least count of an instrument is inversely proportional to the precision of the instrument.

Experimental uncertainty analysis is a technique that analyses a derived quantity, based on the uncertainties in the experimentally measured quantities that are used in some form of mathematical relationship ("model") to calculate that derived quantity. The model used to convert the measurements into the derived quantity is usually based on fundamental principles of a science or engineering discipline.

In statistics, errors-in-variables models or measurement error models are regression models that account for measurement errors in the independent variables. In contrast, standard regression models assume that those regressors have been measured exactly, or observed without error; as such, those models account only for errors in the dependent variables, or responses.

Industrial process data validation and reconciliation, or more briefly, process data reconciliation (PDR), is a technology that uses process information and mathematical methods in order to automatically ensure data validation and reconciliation by correcting measurements in industrial processes. The use of PDR allows for extracting accurate and reliable information about the state of industry processes from raw measurement data and produces a single consistent set of data representing the most likely process operation.

In statistics, linear regression is a linear approach for modelling a predictive relationship between a scalar response and one or more explanatory variables, which are measured without error. The case of one explanatory variable is called simple linear regression; for more than one, the process is called multiple linear regression. This term is distinct from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable. If the explanatory variables are measured with error then errors-in-variables models are required, also known as measurement error models.