Character encoding is the process of assigning numbers to graphical characters, especially the written characters of human language, allowing them to be stored, transmitted, and transformed using digital computers. The numerical values that make up a character encoding are known as "code points" and collectively comprise a "code space", a "code page", or a "character map".

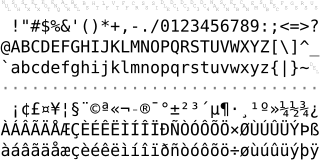

ISO/IEC 8859-1:1998, Information technology — 8-bit single-byte coded graphic character sets — Part 1: Latin alphabet No. 1, is part of the ISO/IEC 8859 series of ASCII-based standard character encodings, first edition published in 1987. ISO/IEC 8859-1 encodes what it refers to as "Latin alphabet no. 1", consisting of 191 characters from the Latin script. This character-encoding scheme is used throughout the Americas, Western Europe, Oceania, and much of Africa. It is the basis for some popular 8-bit character sets and the first two blocks of characters in Unicode.

Unicode, formally The Unicode Standard, is an information technology standard for the consistent encoding, representation, and handling of text expressed in most of the world's writing systems. The standard, which is maintained by the Unicode Consortium, defines as of the current version (15.0) 149,186 characters covering 161 modern and historic scripts, as well as symbols, thousands of emoji, and non-visual control and formatting codes.

UTF-8 is a variable-length character encoding standard used for electronic communication. Defined by the Unicode Standard, the name is derived from UnicodeTransformation Format – 8-bit.

UTF-16 (16-bit Unicode Transformation Format) is a character encoding capable of encoding all 1,112,064 valid code points of Unicode (in fact this number of code points is dictated by the design of UTF-16). The encoding is variable-length, as code points are encoded with one or two 16-bit code units. UTF-16 arose from an earlier obsolete fixed-width 16-bit encoding, now known as UCS-2 (for 2-byte Universal Character Set), once it became clear that more than 216 (65,536) code points were needed.

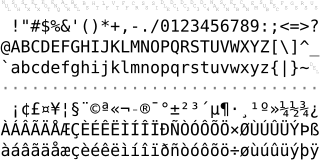

ISO/IEC 8859-15:1999, Information technology — 8-bit single-byte coded graphic character sets — Part 15: Latin alphabet No. 9, is part of the ISO/IEC 8859 series of ASCII-based standard character encodings, first edition published in 1999. It is informally referred to as Latin-9. It is similar to ISO 8859-1, and thus also intended for “Western European” languages, but replaces some less common symbols with the euro sign and some letters that were deemed necessary: This encoding is by far most used, close to half the use, by German, though this is the least used encoding for German.

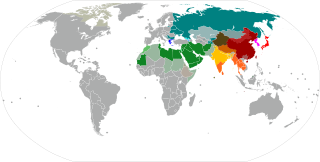

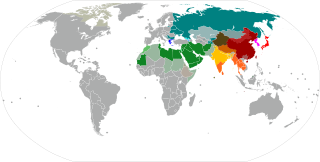

The Brahmic scripts, also known as Indic scripts, are a family of abugida writing systems. They are used throughout the Indian subcontinent, Southeast Asia and parts of East Asia. They are descended from the Brahmi script of ancient India and are used by various languages in several language families in South, East and Southeast Asia: Indo-Aryan, Dravidian, Tibeto-Burman, Mongolic, Austroasiatic, Austronesian, and Tai. They were also the source of the dictionary order (gojūon) of Japanese kana.

The byte order mark (BOM) is a particular usage of the special Unicode character, U+FEFFZERO WIDTH NO-BREAK SPACE, whose appearance as a magic number at the start of a text stream can signal several things to a program reading the text:

Mojibake is the garbled text that is the result of text being decoded using an unintended character encoding. The result is a systematic replacement of symbols with completely unrelated ones, often from a different writing system.

ISO/IEC 8859-2:1999, Information technology — 8-bit single-byte coded graphic character sets — Part 2: Latin alphabet No. 2, is part of the ISO/IEC 8859 series of ASCII-based standard character encodings, first edition published in 1987. It is informally referred to as "Latin-2". It is generally intended for Central or "Eastern European" languages that are written in the Latin script. Note that ISO/IEC 8859-2 is very different from code page 852 which is also referred to as "Latin-2" in Czech and Slovak regions. Code page 912 is an extension. Almost half the use of the encoding is for Polish, and it's the main legacy encoding for Polish, while virtually all use of it has been replaced by UTF-8.

UTF-7 is an obsolete variable-length character encoding for representing Unicode text using a stream of ASCII characters. It was originally intended to provide a means of encoding Unicode text for use in Internet E-mail messages that was more efficient than the combination of UTF-8 with quoted-printable.

In computing, JIS encoding refers to several Japanese Industrial Standards for encoding the Japanese language. Strictly speaking, the term means either:

GB 18030 is a Chinese government standard, described as Information Technology — Chinese coded character set and defines the required language and character support necessary for software in China. GB18030 is the registered Internet name for the official character set of the People's Republic of China (PRC) superseding GB2312. As a Unicode Transformation Format, GB18030 supports both simplified and traditional Chinese characters. It is also compatible with legacy encodings including GB2312, CP936, and GBK 1.0.

VISCII is an unofficially-defined modified ASCII character encoding for using the Vietnamese language with computers. It should not be confused with the similarly-named officially registered VSCII encoding. VISCII keeps the 95 printable characters of ASCII unmodified, but it replaces 6 of the 33 control characters with printable characters. It adds 128 precomposed characters. Unicode and the Windows-1258 code page are now used for virtually all Vietnamese computer data, but legacy VSCII and VISCII files may need conversion.

Indian Standard Code for Information Interchange (ISCII) is a coding scheme for representing various writing systems of India. It encodes the main Indic scripts and a Roman transliteration. The supported scripts are: Bengali–Assamese, Devanagari, Gujarati, Gurmukhi, Kannada, Malayalam, Oriya, Tamil, and Telugu. ISCII does not encode the writing systems of India that are based on Persian, but its writing system switching codes nonetheless provide for Kashmiri, Sindhi, Urdu, Persian, Pashto and Arabic. The Persian-based writing systems were subsequently encoded in the PASCII encoding.

Windows-1251 is an 8-bit character encoding, designed to cover languages that use the Cyrillic script such as Russian, Ukrainian, Belarusian, Bulgarian, Serbian Cyrillic, Macedonian and other languages.

In Unix and Unix-like operating systems, iconv is a command-line program and a standardized application programming interface (API) used to convert between different character encodings. "It can convert from any of these encodings to any other, through Unicode conversion."

The "Indian languages TRANSliteration" (ITRANS) is an ASCII transliteration scheme for Indic scripts, particularly for the Devanagari script.

The Universal Coded Character Set is a standard set of characters defined by the international standard ISO/IEC 10646, Information technology — Universal Coded Character Set (UCS), which is the basis of many character encodings, improving as characters from previously unrepresented typing systems are added.

Tamil All Character Encoding (TACE16) is a 16-bit Unicode-based character encoding scheme for Tamil language.