In information theory and coding theory with applications in computer science and telecommunication, error detection and correction (EDAC) or error control are techniques that enable reliable delivery of digital data over unreliable communication channels. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases.

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds.

The data link layer, or layer 2, is the second layer of the seven-layer OSI model of computer networking. This layer is the protocol layer that transfers data between nodes on a network segment across the physical layer. The data link layer provides the functional and procedural means to transfer data between network entities and may also provide the means to detect and possibly correct errors that can occur in the physical layer.

Computer science is the study of the theoretical foundations of information and computation and their implementation and application in computer systems. One well known subject classification system for computer science is the ACM Computing Classification System devised by the Association for Computing Machinery.

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data.

Theoretical computer science (TCS) is a subset of general computer science and mathematics that focuses on mathematical aspects of computer science such as the theory of computation, lambda calculus, and type theory.

PubMed is a free search engine accessing primarily the MEDLINE database of references and abstracts on life sciences and biomedical topics. The United States National Library of Medicine (NLM) at the National Institutes of Health maintain the database as part of the Entrez system of information retrieval.

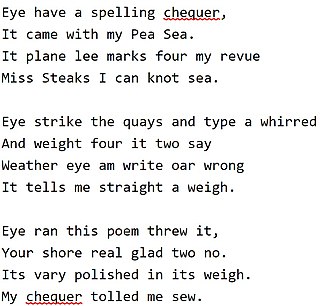

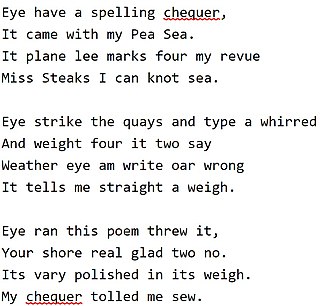

In software, a spell checker is a software feature that checks for misspellings in a text. Spell-checking features are often embedded in software or services, such as a word processor, email client, electronic dictionary, or search engine.

SCIgen is a paper generator that uses context-free grammar to randomly generate nonsense in the form of computer science research papers. Its original data source was a collection of computer science papers downloaded from CiteSeer. All elements of the papers are formed, including graphs, diagrams, and citations. Created by scientists at the Massachusetts Institute of Technology, its stated aim is "to maximize amusement, rather than coherence." Originally created in 2005 to expose the lack of scrutiny of submissions to conferences, the generator subsequently became used, primarily by Chinese academics, to create large numbers of fraudulent conference submissions, leading to the retraction of 122 SCIgen generated papers and the creation of detection software to combat its use.

PubMed Central (PMC) is a free digital repository that archives open access full-text scholarly articles that have been published in biomedical and life sciences journals. As one of the major research databases developed by the National Center for Biotechnology Information (NCBI), PubMed Central is more than a document repository. Submissions to PMC are indexed and formatted for enhanced metadata, medical ontology, and unique identifiers which enrich the XML structured data for each article. Content within PMC can be linked to other NCBI databases and accessed via Entrez search and retrieval systems, further enhancing the public's ability to discover, read and build upon its biomedical knowledge.

The Journal of Molecular Biology is a biweekly peer-reviewed scientific journal covering all aspects of molecular biology. It was established in 1959 and is published by Elsevier. The editor-in-chief is Peter Wright.

The Journal of Chemical Information and Modeling is a peer-reviewed scientific journal published by the American Chemical Society. It was established in 1961 as the Journal of Chemical Documentation, renamed in 1975 to Journal of Chemical Information and Computer Sciences, and obtained its current name in 2005. The journal covers the fields of computational chemistry and chemical informatics. The editor-in-chief is Kenneth M. Merz Jr.. The journal supports Open Science approaches.

Dynamic program analysis is the analysis of computer software that is performed by executing programs on a real or virtual processor. For dynamic program analysis to be effective, the target program must be executed with sufficient test inputs to cover almost all possible outputs. Use of software testing measures such as code coverage helps increase the chance that an adequate slice of the program's set of possible behaviors has been observed. Also, care must be taken to minimize the effect that instrumentation has on the execution of the target program. Dynamic analysis is in contrast to static program analysis. Unit tests, integration tests, system tests and acceptance tests use dynamic testing.

The Journal of Heredity is a peer-reviewed scientific journal concerned with heredity in a biological sense, covering all aspects of genetics. It is published by Oxford University Press on behalf of the American Genetic Association.

In statistics and machine learning, ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone. Unlike a statistical ensemble in statistical mechanics, which is usually infinite, a machine learning ensemble consists of only a concrete finite set of alternative models, but typically allows for much more flexible structure to exist among those alternatives.

Archives of Biochemistry and Biophysics is a biweekly peer-reviewed scientific journal that covers research on all aspects of biochemistry and biophysics. It is published by Elsevier and as of 2012, the editors-in-chief are Paul Fitzpatrick, Helmut Sies, Jian-Ping Jin, and Henry Jay Forman.

Frederick J. Damerau was a pioneer of research on natural language processing and data mining.

In linguistic morphology and information retrieval, stemming is the process of reducing inflected words to their word stem, base or root form—generally a written word form. The stem need not be identical to the morphological root of the word; it is usually sufficient that related words map to the same stem, even if this stem is not in itself a valid root. Algorithms for stemming have been studied in computer science since the 1960s. Many search engines treat words with the same stem as synonyms as a kind of query expansion, a process called conflation.

Radiation Measurements is a monthly peer-reviewed scientific journal covering research on nuclear science and radiation physics. It was established in 1994 and is published by Elsevier.

Christopher D Paice was one of the pioneers of research into stemming. The Paice-Husk stemmer was published in 1990 and his method of evaluation of stemmer performance by means of Error Rate with Respect to Truncation (ERRT) was the first direct method of comparing under-stemming and over-stemming errors. Apart from his pioneering work on stemming algorithms and evaluation methods he made other research contributions in the area of Information Retrieval, anaphora resolution and automatic abstracting.