In phonology, an allophone is one of multiple possible spoken sounds – or phones – or signs used to pronounce a single phoneme in a particular language. For example, in English, the voiceless plosive and the aspirated form are allophones for the phoneme, while these two are considered to be different phonemes in some languages such as Thai. On the other hand, in Spanish, and are allophones for the phoneme, while these two are considered to be different phonemes in English.

Modern Hebrew has 25 to 27 consonants and 5 to 10 vowels, depending on the speaker and the analysis.

In phonology and linguistics, a phoneme is a unit of phone that can distinguish one word from another in a particular language.

The Speech Assessment Methods Phonetic Alphabet (SAMPA) is a computer-readable phonetic script using 7-bit printable ASCII characters, based on the International Phonetic Alphabet (IPA). It was originally developed in the late 1980s for six European languages by the EEC ESPRIT information technology research and development program. As many symbols as possible have been taken over from the IPA; where this is not possible, other signs that are available are used, e.g. [@] for schwa, [2] for the vowel sound found in French deux, and [9] for the vowel sound found in French neuf.

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech. The reverse process is speech recognition.

Transcription in the linguistic sense is the systematic representation of spoken language in written form. The source can either be utterances or preexisting text in another writing system.

Phonetic transcription is the visual representation of speech sounds by means of symbols. The most common type of phonetic transcription uses a phonetic alphabet, such as the International Phonetic Alphabet.

A phonemic orthography is an orthography in which the graphemes correspond to the phonemes of the language. Natural languages rarely have perfectly phonemic orthographies; a high degree of grapheme–phoneme correspondence can be expected in orthographies based on alphabetic writing systems, but they differ in how complete this correspondence is. English orthography, for example, is alphabetic but highly nonphonemic; it was once mostly phonemic during the Middle English stage, when the modern spellings originated, but spoken English changed rapidly while the orthography was much more stable, resulting in the modern nonphonemic situation. On the contrary the Albanian, Serbian/Croatian/Bosnian/Montenegrin, Romanian, Italian, Turkish, Spanish, Finnish, Czech, Latvian, Esperanto, Korean and Swahili orthographic systems come much closer to being consistent phonemic representations.

In the phonology of the Romanian language, the phoneme inventory consists of seven vowels, two or four semivowels, and twenty consonants. In addition, as with other languages, other phonemes can occur occasionally in interjections or recent borrowings.

Australian English (AuE) is a non-rhotic variety of English spoken by most native-born Australians. Phonologically, it is one of the most regionally homogeneous language varieties in the world. Australian English is notable for vowel length contrasts which are absent from most English dialects.

Portuguese orthography is based on the Latin alphabet and makes use of the acute accent, the circumflex accent, the grave accent, the tilde, and the cedilla to denote stress, vowel height, nasalization, and other sound changes. The diaeresis was abolished by the last Orthography Agreement. Accented letters and digraphs are not counted as separate characters for collation purposes.

ARPABET is a set of phonetic transcription codes developed by Advanced Research Projects Agency (ARPA) as a part of their Speech Understanding Research project in the 1970s. It represents phonemes and allophones of General American English with distinct sequences of ASCII characters. Two systems, one representing each segment with one character and the other with one or two (case-insensitive), were devised, the latter being far more widely adopted.

MBROLA is speech synthesis software as a worldwide collaborative project. The MBROLA project web page provides diphone databases for many spoken languages.

The CMU Pronouncing Dictionary is an open-source pronouncing dictionary originally created by the Speech Group at Carnegie Mellon University (CMU) for use in speech recognition research.

Audio mining is a technique by which the content of an audio signal can be automatically analyzed and searched. It is most commonly used in the field of automatic speech recognition, where the analysis tries to identify any speech within the audio. The term ‘audio mining’ is sometimes used interchangeably with audio indexing, phonetic searching, phonetic indexing, speech indexing, audio analytics, speech analytics, word spotting, and information retrieval. Audio indexing, however, is mostly used to describe the pre-process of audio mining, in which the audio file is broken down into a searchable index of words.

eSpeak is a free and open-source, cross-platform, compact, software speech synthesizer. It uses a formant synthesis method, providing many languages in a relatively small file size. eSpeakNG is a continuation of the original developer's project with more feedback from native speakers.

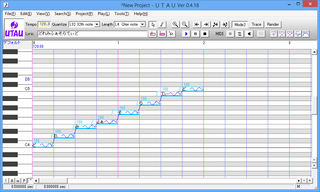

UTAU is a Japanese singing synthesizer application created by Ameya/Ayame (飴屋/菖蒲). This program is similar to the VOCALOID software, with the difference being it is shareware instead of under a third party licensing.

Speech tempo is a measure of the number of speech units of a given type produced within a given amount of time. Speech tempo is believed to vary within the speech of one person according to contextual and emotional factors, between speakers and also between different languages and dialects. However, there are many problems involved in investigating this variance scientifically.

The Persian Speech Corpus is a Modern Persian speech corpus for speech synthesis. The corpus contains phonetic and orthographic transcriptions of about 2.5 hours of Persian speech aligned with recorded speech on the phoneme level, including annotations of word boundaries. Previous spoken corpora of Persian include FARSDAT, which consists of read aloud speech from newspaper texts from 100 Persian speakers and the Telephone FARsi Spoken language DATabase (TFARSDAT) which comprises seven hours of read and spontaneous speech produced by 60 native speakers of Persian from ten regions of Iran.

The PCVC Speech Dataset is a Modern Persian speech corpus for speech recognition and also speaker recognition. The dataset contains sound samples of Modern Persian combination of vowel and consonant phonemes from different speakers. Every sound sample contains just one consonant and one vowel So it is somehow labeled in phoneme level. This dataset consists of 23 Persian consonants and 6 vowels. The sound samples are all possible combinations of vowels and consonants. The sample rate of all speech samples is 48000 which means there are 48000 sound samples in every 1 second. Every sound sample starts with consonant then continues with vowel. In each sample, in average, 0.5 second of each sample is speech and the rest is silence. Each sound sample ends with silence. All of sound samples are denoised with "Adaptive noise reduction" algorithm. Compared to Farsdat speech dataset and Persian speech corpus it is more easy to use because it is prepared in .mat data files. Also it is more based on phoneme based separation and all samples are denoised.