Related Research Articles

Corpus linguistics is the study of language as a language is expressed in its text corpus, its body of "real world" text. Corpus linguistics proposes that reliable language analysis is more feasible with corpora collected in the field in its natural context ("realia"), and with minimal experimental interference.

In linguistics, a corpus or text corpus is a language resource consisting of a large and structured set of texts. In corpus linguistics, they are used to do statistical analysis and hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory.

Word-sense disambiguation (WSD) is an open problem in computational linguistics concerned with identifying which sense of a word is used in a sentence. The solution to this issue impacts other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference.

Dr. Hermann Moisl is a retired Senior Lecturer and Visiting Fellow in Linguistics at Newcastle University. He was educated at various institutes, including Trinity College Dublin and the University of Oxford.

The American National Corpus (ANC) is a text corpus of American English containing 22 million words of written and spoken data produced since 1990. Currently, the ANC includes a range of genres, including emerging genres such as email, tweets, and web data that are not included in earlier corpora such as the British National Corpus. It is annotated for part of speech and lemma, shallow parse, and named entities.

In linguistics, a treebank is a parsed text corpus that annotates syntactic or semantic sentence structure. The construction of parsed corpora in the early 1990s revolutionized computational linguistics, which benefitted from large-scale empirical data. The exploitation of treebank data has been important ever since the first large-scale treebank, The Penn Treebank, was published. However, although originating in computational linguistics, the value of treebanks is becoming more widely appreciated in linguistics research as a whole. For example, annotated treebank data has been crucial in syntactic research to test linguistic theories of sentence structure against large quantities of naturally occurring examples.

The British National Corpus (BNC) is a 100-million-word text corpus of samples of written and spoken English from a wide range of sources. The corpus covers British English of the late 20th century from a wide variety of genres, with the intention that it be a representative sample of spoken and written British English of that time.

Trend analysis is the widespread practice of collecting information and attempting to spot a pattern. In some fields of study, the term "trend analysis" has more formally defined meanings.

Beryl T. (Sue) Atkins is a British lexicographer, specialising in computational lexicography, who pioneered the creation of bilingual dictionaries from corpus data.

In linguistics, statistical semantics applies the methods of statistics to the problem of determining the meaning of words or phrases, ideally through unsupervised learning, to a degree of precision at least sufficient for the purpose of information retrieval.

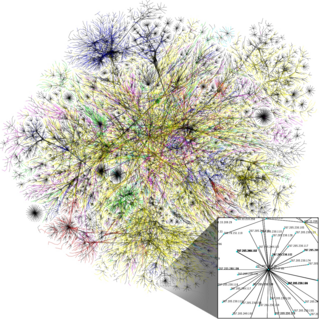

Internet linguistics is a domain of linguistics advocated by the English linguist David Crystal. It studies new language styles and forms that have arisen under the influence of the Internet and of other new media, such as Short Message Service (SMS) text messaging. Since the beginning of human-computer interaction (HCI) leading to computer-mediated communication (CMC) and Internet-mediated communication (IMC), experts, such as Gretchen McCulloch have acknowledged that linguistics has a contributing role in it, in terms of web interface and usability. Studying the emerging language on the Internet can help improve conceptual organization, translation and web usability. Such study aims to benefit both linguists and web users combined.

The knowledge acquisition bottleneck is perhaps the major impediment to solving the word sense disambiguation (WSD) problem. Unsupervised learning methods rely on knowledge about word senses, which is barely formulated in dictionaries and lexical databases. Supervised learning methods depend heavily on the existence of manually annotated examples for every word sense, a requisite that can so far be met only for a handful of words for testing purposes, as it is done in the Senseval exercises.

The Europarl Corpus is a corpus that consists of the proceedings of the European Parliament from 1996 to 2012. In its first release in 2001, it covered eleven official languages of the European Union. With the political expansion of the EU the official languages of the ten new member states have been added to the corpus data. The latest release (2012) comprised up to 60 million words per language with the newly added languages being slightly underrepresented as data for them is only available from 2007 onwards. This latest version includes 21 European languages: Romanic, Germanic, Slavic, Finno-Ugric, Baltic, and Greek.

Sketch Engine is a corpus manager and text analysis software developed by Lexical Computing Limited since 2003. Its purpose is to enable people studying language behaviour to search large text collections according to complex and linguistically motivated queries. Sketch Engine gained its name after one of the key features, word sketches: one-page, automatic, corpus-derived summaries of a word's grammatical and collocational behaviour. Currently, it supports and provides corpora in 90+ languages.

A corpus manager is a tool for multilingual corpus analysis, which allows effective searching in corpora.

The Bulgarian National Corpus (BulNC) is a large representative corpus of Bulgarian comprising about 200,000 texts and amounting to over 1 billion words.

Adam Kilgarriff was a corpus linguist, lexicographer, and co-author of Sketch Engine.

A word sketch is a one-page, automatic, corpus-derived summary of a word’s grammatical and collocational behaviour. Word sketches were first introduced by the British corpus linguist Adam Kilgarriff and exploited within the Sketch Engine corpus management system. They are an extension of the general collocation concept used in corpus linguistics in that they group collocations according to particular grammatical relations. The collocation candidates in a word sketch are sorted either by their frequency or using a lexicographic association score like Dice, T-score or MI-score.

SkELL is a free corpus-based web tool that allows language learners and teachers find authentic sentences for specific target word(s). For any word or a phrase, SkELL displays a concordance that lists example sentences drawn from a special text corpus crawled from the World Wide Web, which has been cleaned of spam and includes only high-quality texts covering everyday, standard, formal, and professional language. There are versions of SkELL for English, Russian, German, Italian, Czech and Estonian.

References

- 1 2 Jakubíček, Miloš; Kilgarriff, Adam; Kovář, Vojtěch; Rychlý, Pavel; Suchomel, Vít (July 2013). The Tenten Corpus Family (PDF). 7th International Corpus Linguistics Conference CL. Lancaster, UK: Lancaster University. pp. 125–127. Retrieved 13 June 2017.

- ↑ Baroni, Marco; Kilgarriff, Adam; Kovář, Vojtěch; Rychlý, Pavel; Suchomel, Vít (July 2013). Large linguistically-processed web corpora for multiple languages (PDF). 11th Conference of the European Chapter of the Association for Computational Linguistics: Posters & Demonstrations. Association for Computational Linguistics. Trento, Italy: Lancaster University. pp. 87–90. Retrieved 13 June 2017.

- ↑ Kilgarriff, Adam; Reddy, Siva; Pomikálek, Jan; Avinesh, PVS (May 2010). A Corpus Factory for Many Languages. 7th Language Resources and Evaluation Conference. Valletta, Malta: ELRA. Retrieved 13 June 2017.

- ↑ Sharoff, Serge (2006). "Creating general-purpose corpora using automated search engine queries" (PDF). In Baroni, Marco; Bernardini, Silvia (eds.). Wacky! Working papers on the Web as Corpus. Bologna, Italy: GEDIT. pp. 63–98. ISBN 978-88-6027-004-7.

- ↑ Suchomel, Vít; Pomikálek, Jan (17 April 2012). "Efficient web crawling for large text corpora" (PDF). Proceedings of the seventh Web as Corpus Workshop (WAC7). 7th Web as Corpus Workshop. Lyon, France: Association for Computational Linguistics (ACL) on Web as Corpus. pp. 39–43. Retrieved 13 June 2017.

- 1 2 Pomikálek, Jan (2011). Removing boilerplate and duplicate content from web corpora (PhD). Faculty of Informatics, Masaryk University. Retrieved 17 April 2017.

- ↑ "TenTen Corpus Family". www.sketchengine.eu. Sketch Engine. Retrieved 23 October 2018.

- ↑ Belinkov, Y., Habash, N., Kilgarriff, A., Ordan, N., Roth, R., & Suchomel, V. (2013). arTen-Ten: a new, vast corpus for Arabic. Proceedings of WACL.

- ↑ "A new Belarusian corpus (beTenTen)". Sketch Engine . Lexical Computing. 2018-02-26. Retrieved 2018-04-06.

- ↑ Kilgarriff, A., Jakubíček, M., Pomikalek, J., Sardinha, T. B., & Whitelock, P. (2014). PtTenTen: a corpus for Portuguese lexicography. Working with Portuguese Corpora, 111-30.

- ↑ Suchomel, Vít (December 7–9, 2012). "Recent Czech Web Corpora". In Horák, A.; Rychlý, P. (eds.). Proceedings of Recent Advances in Slavonic Natural Language Processing, RASLAN 2012. Tribun EU. pp. 77–83.

- ↑ Kilgarriff, Adam (2012). "Getting to Know Your Corpus". Text, Speech and Dialogue. Lecture Notes in Computer Science. 7499. pp. 3–15. CiteSeerX 10.1.1.452.8074 . doi:10.1007/978-3-642-32790-2_1. ISBN 978-3-642-32789-6.

- ↑ Kilgarriff, A., & Renau, I. (2013). esTenTen, a vast web corpus of Peninsular and American Spanish. Procedia - Social and Behavioral Sciences, 95, 12-19.

- ↑ SRDANOVIĆ, I. (2016). A Research Project on Language Resources for Learners of Japanese. Inter Faculty, 6.

- ↑ Baisa, Vít; Suchomel, Vít (2015). "Turkic Language Support in Sketch Engine". Proceedings of the international conference "Turkic Languages processing: TurkLang 2015". Kazan: Academy of Sciences of the Republic of Tatarstan Press. pp. 214–223. ISBN 978-5-9690-0262-3 – via IS MU.