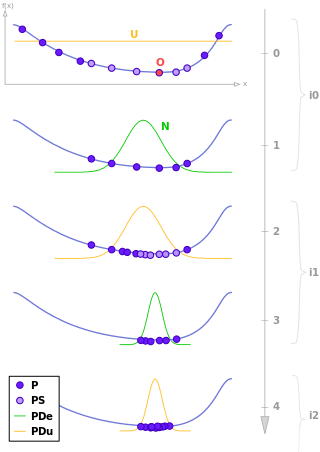

Examples of chromosomes

Chromosomes for binary codings

In their classical form, GAs use bit strings and map the decision variables to be optimized onto them. An example for one Boolean and three integer decision variables with the value ranges , and may illustrate this:

| decision variable: | |||||||||||||||||

| bits: | 0 | 1 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 |

| position: | 17 | 16 | 15 | 14 | 13 | 12 | 11 | 10 | 9 | 8 | 7 | 6 | 5 | 4 | 3 | 2 | 1 |

Note that the negative number here is given in two's complement. This straight forward representation uses five bits to represent the three values of , although two bits would suffice. This is a significant redundancy. An improved alternative, where 28 is to be added for the genotype-phenotype mapping, could look like this:

| decision variable: | ||||||||||||||

| bits: | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 |

| position: | 14 | 13 | 12 | 11 | 10 | 9 | 8 | 7 | 6 | 5 | 4 | 3 | 2 | 1 |

with .

Chromosomes with real-valued or integer genes

For the processing of tasks with real-valued or mixed-integer decision variables, EAs such as the evolution strategy [15] or the real-coded GAs [16] [17] [18] are suited. In the case of mixed-integer values, rounding is often used, but this represents some violation of the redundancy requirement. If the necessary precisions of the real values can be reasonably narrowed down, this violation can be remedied by using integer-coded GAs. [19] [20] For this purpose, the valid digits of real values are mapped to integers by multiplication with a suitable factor. For example, 12.380 becomes the integer 12380 by multiplying by 1000. This must of course be taken into account in genotype-phenotype mapping for evaluation and result presentation. A common form is a chromosome consisting of a list or an array of integer or real values.

Chromosomes for permutations

Combinatorial problems are mainly concerned with finding an optimal sequence of a set of elementary items. As an example, consider the problem of the traveling salesman who wants to visit a given number of cities exactly once on the shortest possible tour. The simplest and most obvious mapping onto a chromosome is to number the cities consecutively, to interpret a resulting sequence as permutation and to store it directly in a chromosome, where one gene corresponds to the ordinal number of a city. [21] Then, however, the variation operators may only change the gene order and not remove or duplicate any genes. [22] The chromosome thus contains the path of a possible tour to the cities. As an example the sequence of nine cities may serve, to which the following chromosome corresponds:

| 3 | 5 | 7 | 1 | 4 | 2 | 9 | 6 | 8 |

In addition to this encoding frequently called path representation, there are several other ways of representing a permutation, for example the ordinal representation or the matrix representation. [22] [23]

Chromosomes for co-evolution

When a genetic representation contains, in addition to the decision variables, additional information that influences evolution and/or the mapping of the genotype to the phenotype and is itself subject to evolution, this is referred to as co-evolution. A typical example is the evolution strategy (ES), which includes one or more mutation step sizes as strategy parameters in each chromosome. [15] Another example is an additional gene to control a selection heuristic for resource allocation in a scheduling tasks. [24]

This approach is based on the assumption that good solutions are based on an appropriate selection of strategy parameters or on control gene(s) that influences genotype-phenotype mapping. The success of the ES gives evidence to this assumption.

Chromosomes for complex representations

The chromosomes presented above are well suited for processing tasks of continuous, mixed-integer, pure-integer or combinatorial optimization. For a combination of these optimization areas, on the other hand, it becomes increasingly difficult to map them to simple strings of values, depending on the task. The following extension of the gene concept is proposed by the EA GLEAM (General Learning Evolutionary Algorithm and Method) for this purpose: [25] A gene is considered to be the description of an element or elementary trait of the phenotype, which may have multiple parameters. For this purpose, gene types are defined that contain as many parameters of the appropriate data type as are required to describe the particular element of the phenotype. A chromosome now consists of genes as data objects of the gene types, whereby, depending on the application, each gene type occurs exactly once as a gene or can be contained in the chromosome any number of times. The latter leads to chromosomes of dynamic length, as they are required for some problems. [26] [27] The gene type definitions also contain information on the permissible value ranges of the gene parameters, which are observed during chromosome generation and by corresponding mutations, so they cannot lead to lethal mutations. For tasks with a combinatorial part, there are suitable genetic operators that can move or reposition genes as a whole, i.e. with their parameters.

A scheduling task is used as an illustration, in which workflows are to be scheduled that require different numbers of heterogeneous resources. A workflow specifies which work steps can be processed in parallel and which have to be executed one after the other. In this context, heterogeneous resources mean different processing times at different costs in addition to different processing capabilities. [24] Each scheduling operation therefore requires one or more parameters that determine the resource selection, where the value ranges of the parameters depend on the number of alternative resources available for each work step. A suitable chromosome provides one gene type per work step and in this case one corresponding gene, which has one parameter for each required resource. The order of genes determines the order of scheduling operations and, therefore, the precedence in case of allocation conflicts. The exemplary gene type definition of work step 15 with two resources, for which there are four and seven alternatives respectively, would then look as shown in the left image. Since the parameters represent indices in lists of available resources for the respective work step, their value range starts at 0. The right image shows an example of three genes of a chromosome belonging to the gene types in list representation.

Chromosomes for tree representations

Tree representations in a chromosome are used by genetic programming, an EA type for generating computer programs or circuits. [10] The trees correspond to the syntax trees generated by a compiler as internal representation when translating a computer program. The adjacent figure shows the syntax tree of a mathematical expression as an example. Mutation operators can rearrange, change or delete subtrees depending on the represented syntax structure. Recombination is performed by exchanging suitable subtrees. [28]