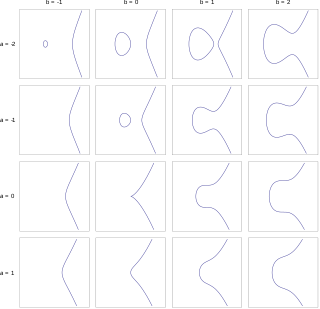

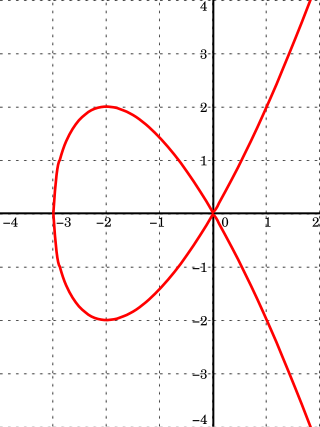

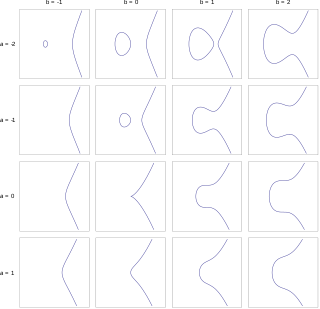

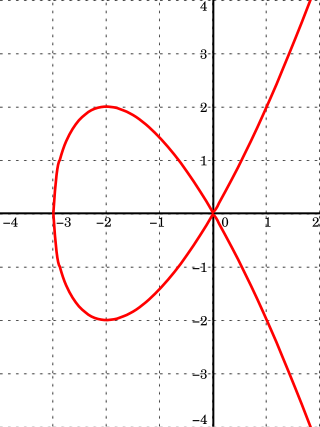

In mathematics, an elliptic curve is a smooth, projective, algebraic curve of genus one, on which there is a specified point O. An elliptic curve is defined over a field K and describes points in K2, the Cartesian product of K with itself. If the field's characteristic is different from 2 and 3, then the curve can be described as a plane algebraic curve which consists of solutions (x, y) for:

In information theory and coding theory, Reed–Solomon codes are a group of error-correcting codes that were introduced by Irving S. Reed and Gustave Solomon in 1960. They have many applications, including consumer technologies such as MiniDiscs, CDs, DVDs, Blu-ray discs, QR codes, Data Matrix, data transmission technologies such as DSL and WiMAX, broadcast systems such as satellite communications, DVB and ATSC, and storage systems such as RAID 6.

The Merkle–Hellman knapsack cryptosystem was one of the earliest public key cryptosystems. It was published by Ralph Merkle and Martin Hellman in 1978. A polynomial time attack was published by Adi Shamir in 1984. As a result, the cryptosystem is now considered insecure.

In mathematics, an affine algebraic plane curve is the zero set of a polynomial in two variables. A projective algebraic plane curve is the zero set in a projective plane of a homogeneous polynomial in three variables. An affine algebraic plane curve can be completed in a projective algebraic plane curve by homogenizing its defining polynomial. Conversely, a projective algebraic plane curve of homogeneous equation h(x, y, t) = 0 can be restricted to the affine algebraic plane curve of equation h(x, y, 1) = 0. These two operations are each inverse to the other; therefore, the phrase algebraic plane curve is often used without specifying explicitly whether it is the affine or the projective case that is considered.

In computer science, a one-way function is a function that is easy to compute on every input, but hard to invert given the image of a random input. Here, "easy" and "hard" are to be understood in the sense of computational complexity theory, specifically the theory of polynomial time problems. This has nothing to do with whether the function is one-to-one; finding any one input with the desired image is considered a successful inversion.

A commitment scheme is a cryptographic primitive that allows one to commit to a chosen value while keeping it hidden to others, with the ability to reveal the committed value later. Commitment schemes are designed so that a party cannot change the value or statement after they have committed to it: that is, commitment schemes are binding. Commitment schemes have important applications in a number of cryptographic protocols including secure coin flipping, zero-knowledge proofs, and secure computation.

In cryptography, the Elliptic Curve Digital Signature Algorithm (ECDSA) offers a variant of the Digital Signature Algorithm (DSA) which uses elliptic-curve cryptography.

Hyperelliptic curve cryptography is similar to elliptic curve cryptography (ECC) insofar as the Jacobian of a hyperelliptic curve is an abelian group in which to do arithmetic, just as we use the group of points on an elliptic curve in ECC.

KCDSA is a digital signature algorithm created by a team led by the Korea Internet & Security Agency (KISA). It is an ElGamal variant, similar to the Digital Signature Algorithm and GOST R 34.10-94. The standard algorithm is implemented over , but an elliptic curve variant (EC-KCDSA) is also specified.

In cryptography, a one-way compression function is a function that transforms two fixed-length inputs into a fixed-length output. The transformation is "one-way", meaning that it is difficult given a particular output to compute inputs which compress to that output. One-way compression functions are not related to conventional data compression algorithms, which instead can be inverted exactly or approximately to the original data.

In mathematics and computing, universal hashing refers to selecting a hash function at random from a family of hash functions with a certain mathematical property. This guarantees a low number of collisions in expectation, even if the data is chosen by an adversary. Many universal families are known, and their evaluation is often very efficient. Universal hashing has numerous uses in computer science, for example in implementations of hash tables, randomized algorithms, and cryptography.

An important aspect in the study of elliptic curves is devising effective ways of counting points on the curve. There have been several approaches to do so, and the algorithms devised have proved to be useful tools in the study of various fields such as number theory, and more recently in cryptography and Digital Signature Authentication. While in number theory they have important consequences in the solving of Diophantine equations, with respect to cryptography, they enable us to make effective use of the difficulty of the discrete logarithm problem (DLP) for the group , of elliptic curves over a finite field , where q = pk and p is a prime. The DLP, as it has come to be known, is a widely used approach to public key cryptography, and the difficulty in solving this problem determines the level of security of the cryptosystem. This article covers algorithms to count points on elliptic curves over fields of large characteristic, in particular p > 3. For curves over fields of small characteristic more efficient algorithms based on p-adic methods exist.

In cryptography, Very Smooth Hash (VSH) is a provably secure cryptographic hash function invented in 2005 by Scott Contini, Arjen Lenstra, and Ron Steinfeld. Provably secure means that finding collisions is as difficult as some known hard mathematical problem. Unlike other provably secure collision-resistant hashes, VSH is efficient and usable in practice. Asymptotically, it only requires a single multiplication per log(n) message-bits and uses RSA-type arithmetic. Therefore, VSH can be useful in embedded environments where code space is limited.

In cryptography, the fast syndrome-based hash functions (FSB) are a family of cryptographic hash functions introduced in 2003 by Daniel Augot, Matthieu Finiasz, and Nicolas Sendrier. Unlike most other cryptographic hash functions in use today, FSB can to a certain extent be proven to be secure. More exactly, it can be proven that breaking FSB is at least as difficult as solving a certain NP-complete problem known as regular syndrome decoding so FSB is provably secure. Though it is not known whether NP-complete problems are solvable in polynomial time, it is often assumed that they are not.

In cryptography, cryptographic hash functions can be divided into two main categories. In the first category are those functions whose designs are based on mathematical problems, and whose security thus follows from rigorous mathematical proofs, complexity theory and formal reduction. These functions are called provably secure cryptographic hash functions. To construct these is very difficult, and few examples have been introduced. Their practical use is limited.

In cryptography, SWIFFT is a collection of provably secure hash functions. It is based on the concept of the fast Fourier transform (FFT). SWIFFT is not the first hash function based on the FFT, but it sets itself apart by providing a mathematical proof of its security. It also uses the LLL basis reduction algorithm. It can be shown that finding collisions in SWIFFT is at least as difficult as finding short vectors in cyclic/ideal lattices in the worst case. By giving a security reduction to the worst-case scenario of a difficult mathematical problem, SWIFFT gives a much stronger security guarantee than most other cryptographic hash functions.

In mathematics, elliptic curve primality testing techniques, or elliptic curve primality proving (ECPP), are among the quickest and most widely used methods in primality proving. It is an idea put forward by Shafi Goldwasser and Joe Kilian in 1986 and turned into an algorithm by A. O. L. Atkin in the same year. The algorithm was altered and improved by several collaborators subsequently, and notably by Atkin and François Morain, in 1993. The concept of using elliptic curves in factorization had been developed by H. W. Lenstra in 1985, and the implications for its use in primality testing followed quickly.

In discrete mathematics, ideal lattices are a special class of lattices and a generalization of cyclic lattices. Ideal lattices naturally occur in many parts of number theory, but also in other areas. In particular, they have a significant place in cryptography. Micciancio defined a generalization of cyclic lattices as ideal lattices. They can be used in cryptosystems to decrease by a square root the number of parameters necessary to describe a lattice, making them more efficient. Ideal lattices are a new concept, but similar lattice classes have been used for a long time. For example, cyclic lattices, a special case of ideal lattices, are used in NTRUEncrypt and NTRUSign.

Network coding has been shown to optimally use bandwidth in a network, maximizing information flow but the scheme is very inherently vulnerable to pollution attacks by malicious nodes in the network. A node injecting garbage can quickly affect many receivers. The pollution of network packets spreads quickly since the output of honest node is corrupted if at least one of the incoming packets is corrupted.

In cryptography, a public key exchange algorithm is a cryptographic algorithm which allows two parties to create and share a secret key, which they can use to encrypt messages between themselves. The ring learning with errors key exchange (RLWE-KEX) is one of a new class of public key exchange algorithms that are designed to be secure against an adversary that possesses a quantum computer. This is important because some public key algorithms in use today will be easily broken by a quantum computer if such computers are implemented. RLWE-KEX is one of a set of post-quantum cryptographic algorithms which are based on the difficulty of solving certain mathematical problems involving lattices. Unlike older lattice based cryptographic algorithms, the RLWE-KEX is provably reducible to a known hard problem in lattices.