A forecast bias occurs when there are consistent differences between actual outcomes and previously generated forecasts of those quantities; that is: forecasts may have a general tendency to be too high or too low. A normal property of a good forecast is that it is not biased. [1]

As a quantitative measure , the "forecast bias" can be specified as a probabilistic or statistical property of the forecast error. A typical measure of bias of forecasting procedure is the arithmetic mean or expected value of the forecast errors, but other measures of bias are possible. For example, a median-unbiased forecast would be one where half of the forecasts are too low and half too high: see Bias of an estimator.

In contexts where forecasts are being produced on a repetitive basis, the performance of the forecasting system may be monitored using a tracking signal, which provides an automatically maintained summary of the forecasts produced up to any given time. This can be used to monitor for deteriorating performance of the system.

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on observed data: thus the rule, the quantity of interest and its result are distinguished. For example, the sample mean is a commonly used estimator of the population mean.

In a set of measurements, accuracy is closeness of the measurements to a specific value, while precision is the closeness of the measurements to each other.

Weather forecasting is the application of science and technology to predict the conditions of the atmosphere for a given location and time. People have attempted to predict the weather informally for millennia and formally since the 19th century. Weather forecasts are made by collecting quantitative data about the current state of the atmosphere, land, and ocean and using meteorology to project how the atmosphere will change at a given place.

A sensor is a device that produces an output signal for the purpose of sensing of a physical phenomenon.

Forecasting is the process of making predictions based on past and present data and most commonly by analysis of trends. A commonplace example might be estimation of some variable of interest at some specified future date. Prediction is a similar, but more general term. Both might refer to formal statistical methods employing time series, cross-sectional or longitudinal data, or alternatively to less formal judgmental methods. Usage can differ between areas of application: for example, in hydrology the terms "forecast" and "forecasting" are sometimes reserved for estimates of values at certain specific future times, while the term "prediction" is used for more general estimates, such as the number of times floods will occur over a long period.

An oxygen sensor (or lambda sensor, where lambda refers to air–fuel equivalence ratio, usually denoted by λ) is an electronic device that measures the proportion of oxygen (O2) in the gas or liquid being analysed.

An electricity meter, electric meter, electrical meter, energy meter, or kilowatt-hour meter is a device that measures the amount of electric energy consumed by a residence, a business, or an electrically powered device.

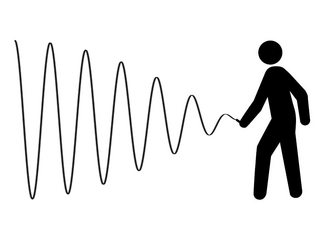

When describing a periodic function in the time domain, the DC bias, DC component, DC offset, or DC coefficient is the mean amplitude of the waveform. If the mean amplitude is zero, there is no DC bias. A waveform with no DC bias is known as a DC balanced or DC free waveform.

Safety stock is a term used by logisticians to describe a level of extra stock that is maintained to mitigate risk of stockouts caused by uncertainties in supply and demand. Adequate safety stock levels permit business operations to proceed according to their plans. Safety stock is held when uncertainty exists in demand, supply, or manufacturing yield, and serves as an insurance against stockouts.

A pairs trade or pair trading is a market neutral trading strategy enabling traders to profit from virtually any market conditions: uptrend, downtrend, or sideways movement. This strategy is categorized as a statistical arbitrage and convergence trading strategy. Pair trading was pioneered by Gerry Bamberger and later led by Nunzio Tartaglia's quantitative group at Morgan Stanley in the 1980s.

The bullwhip effect is a distribution channel phenomenon in which demand forecasts yield supply chain inefficiencies. It refers to increasing swings in inventory in response to shifts in consumer demand as one moves further up the supply chain. The concept first appeared in Jay Forrester's Industrial Dynamics (1961) and thus it is also known as the Forrester effect. It has been described as “the observed propensity for material orders to be more variable than demand signals and for this variability to increase the further upstream a company is in a supply chain”.

The mean absolute percentage error (MAPE), also known as mean absolute percentage deviation (MAPD), is a measure of prediction accuracy of a forecasting method in statistics. It usually expresses the accuracy as a ratio defined by the formula:

Capacity management's goal is to ensure that information technology resources are sufficient to meet upcoming business requirements cost-effectively. One common interpretation of capacity management is described in the ITIL framework. ITIL version 3 views capacity management as comprising three sub-processes: business capacity management, service capacity management, and component capacity management.

The quantitative precipitation forecast is the expected amount of melted precipitation accumulated over a specified time period over a specified area. A QPF will be created when precipitation amounts reaching a minimum threshold are expected during the forecast's valid period. Valid periods of precipitation forecasts are normally synoptic hours such as 0000, 0600, 1200 and 1800 GMT. Terrain is considered in QPFs by use of topography or based upon climatological precipitation patterns from observations with fine detail. Starting in the mid-to-late 1990s, QPFs were used within hydrologic forecast models to simulate impact to rivers throughout the United States. Forecast models show significant sensitivity to humidity levels within the planetary boundary layer, or in the lowest levels of the atmosphere, which decreases with height. QPF can be generated on a quantitative, forecasting amounts, or a qualitative, forecasting the probability of a specific amount, basis. Radar imagery forecasting techniques show higher skill than model forecasts within 6 to 7 hours of the time of the radar image. The forecasts can be verified through use of rain gauge measurements, weather radar estimates, or a combination of both. Various skill scores can be determined to measure the value of the rainfall forecast.

Demand forecasting is a field of predictive analytics which tries to understand and predict customer demand to optimize supply decisions by corporate supply chain and business management. Demand forecasting methods are divided in two major categories, qualitative and quantitative methods. Qualitative methods are based on expert opinion and information gathered from the field, while quantitative methods use data, and especially historical sales data, as well as statistical techniques from test markets. Demand forecasting may be used in production planning, inventory management, and at times in assessing future capacity requirements, or in making decisions on whether to enter a new market.

SARAL is a cooperative altimetry technology mission of Indian Space Research Organisation (ISRO) and Centre National d'Études Spatiales (CNES). SARAL performs altimetric measurements designed to study ocean circulation and sea surface elevation.

In machine learning, particularly in the creation of artificial neural networks, ensemble averaging is the process of creating multiple models and combining them to produce a desired output, as opposed to creating just one model. Frequently an ensemble of models performs better than any individual model, because the various errors of the models "average out."

In statistics and management science, a tracking signal monitors any forecasts that have been made in comparison with actuals, and warns when there are unexpected departures of the outcomes from the forecasts. Forecasts can relate to sales, inventory, or anything pertaining to an organization’s future demand.

In statistics, the mean absolute scaled error (MASE) is a measure of the accuracy of forecasts. It is the mean absolute error of the forecast values, divided by the mean absolute error of the in-sample one-step naive forecast. It was proposed in 2005 by statistician Rob J. Hyndman and Professor of Decision Sciences Anne B. Koehler, who described it as a "generally applicable measurement of forecast accuracy without the problems seen in the other measurements." The mean absolute scaled error has favorable properties when compared to other methods for calculating forecast errors, such as root-mean-square-deviation, and is therefore recommended for determining comparative accuracy of forecasts.

Automation bias is the propensity for humans to favor suggestions from automated decision-making systems and to ignore contradictory information made without automation, even if it is correct. Automation bias stems from the social psychology literature that found a bias in human-human interaction that showed that people assign more positive evaluations to decisions made by humans than to a neutral object. The same type of positivity bias has been found for human-automation interaction, where the automated decisions are rated more positively than neutral. This has become a growing problem for decision making as intensive care units, nuclear power plants, and aircraft cockpits have increasingly integrated computerized system monitors and decision aids to mostly factor out possible human error. Errors of automation bias tend to occur when decision-making is dependent on computers or other automated aids and the human is in an observatory role but able to make decisions. Examples of automation bias range from urgent matters like flying a plane on automatic pilot to such mundane matters as the use of spell-checking programs.