| Heritrix | |

|---|---|

| | |

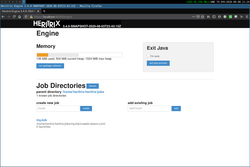

Screenshot of Heritrix Admin Console. | |

| Stable release | |

| Repository | |

| Written in | Java |

| Operating system | Linux/Unix-like/Windows (unsupported) |

| Type | Web crawler |

| License | Apache License |

| Website | github |

Heritrix is a web crawler designed for web archiving. It was originally written in collaboration between the Internet Archive, National Library of Norway and National Library of Iceland. [2] Heritrix is available under a free software license and written in Java. The main interface is accessible using a web browser, and there is a command-line tool that can optionally be used to initiate crawls.

Contents

- Projects using Heritrix

- Arc files

- Tools for processing Arc files

- Command-line tools

- See also

- References

- External links

- Tools by Internet Archive

- Links to related tools

Heritrix was developed jointly by the Internet Archive and the Nordic national libraries on specifications written in early 2003. The first official release was in January 2004, and it has been continually improved by employees of the Internet Archive and other interested parties.

For many years Heritrix was not the main crawler used to crawl content for the Internet Archive's web collection. [3] The largest contributor to the collection, as of 2011, is Alexa Internet. [3] Alexa crawls the web for its own purposes, [3] using a crawler named ia_archiver. Alexa then donates the material to the Internet Archive. [3] The Internet Archive itself did some of its own crawling using Heritrix, but only on a smaller scale. [3]

Starting in 2008, the Internet Archive began performance improvements to do its own wide scale crawling, and now does collect most of its content. [4] [ failed verification ]