Examples and applications

A linear program may be defined by a system of d non-negative real variables, subject to n linear inequality constraints, together with a non-negative linear objective function to be minimized. This may be placed into the framework of LP-type problems by letting S be the set of constraints, and defining f(A) (for a subset A of the constraints) to be the minimum objective function value of the smaller linear program defined by A. With suitable general position assumptions (in order to prevent multiple solution points having the same optimal objective function value), this satisfies the monotonicity and locality requirements of an LP-type problem, and has combinatorial dimension equal to the number d of variables. [1] Similarly, an integer program (consisting of a collection of linear constraints and a linear objective function, as in a linear program, but with the additional restriction that the variables must take on only integer values) satisfies both the monotonicity and locality properties of an LP-type problem, with the same general position assumptions as for linear programs. Theorems of Bell (1977) and Scarf (1977) show that, for an integer program with d variables, the combinatorial dimension is at most 2d. [1]

Many natural optimization problems in computational geometry are LP-type:

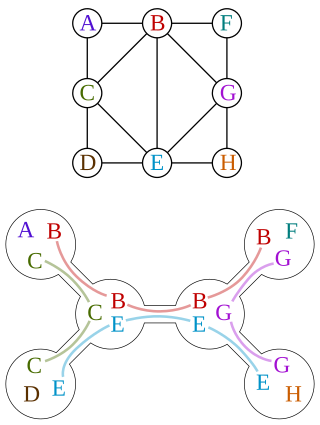

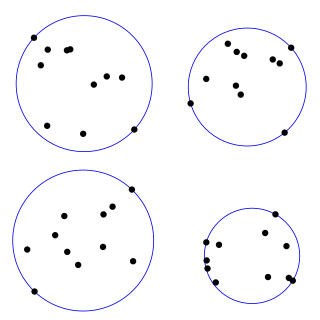

- The smallest circle problem is the problem of finding the minimum radius of a circle containing a given set of n points in the plane. It satisfies monotonicity (adding more points can only make the circle larger) and locality (if the smallest circle for set A contains B and x, then the same circle also contains B ∪ {x}). Because the smallest circle is always determined by some three points, the smallest circle problem has combinatorial dimension three, even though it is defined using two-dimensional Euclidean geometry. [2] More generally, the smallest enclosing ball of points in d dimensions forms an LP-type problem of combinatorial dimension d + 1. The smallest circle problem can be generalized to the smallest ball enclosing a set of balls, [3] to the smallest ball that touches or surrounds each of a set of balls, [4] to the weighted 1-center problem, [5] or to similar smaller enclosing ball problems in non-Euclidean spaces such as the space with distances defined by Bregman divergence. [6] The related problem of finding the smallest enclosing ellipsoid is also an LP-type problem, but with a larger combinatorial dimension, d(d + 3)/2. [7]

- Let K0, K1, ... be a sequence of n convex sets in d-dimensional Euclidean space, and suppose that we wish to find the longest prefix of this sequence that has a common intersection point. This may be expressed as an LP-type problem in which f(A) = −i where Ki is the first member of A that does not belong to an intersecting prefix of A, and where f(A) = −n if there is no such member. The combinatorial dimension of this system is d + 1. [8]

- Suppose we are given a collection of axis-aligned rectangular boxes in three-dimensional space, and wish to find a line directed into the positive octant of space that cuts through all the boxes. This may be expressed as an LP-type problem with combinatorial dimension 4. [9]

- The problem of finding the closest distance between two convex polytopes, specified by their sets of vertices, may be represented as an LP-type problem. In this formulation, the set S is the set of all vertices in both polytopes, and the function value f(A) is the negation of the smallest distance between the convex hulls of the two subsets A of vertices in the two polytopes. The combinatorial dimension of the problem is d + 1 if the two polytopes are disjoint, or d + 2 if they have a nonempty intersection. [1]

- Let S = {f0, f1, ...} be a set of quasiconvex functions. Then the pointwise maximum maxifi is itself quasiconvex, and the problem of finding the minimum value of maxifi is an LP-type problem. It has combinatorial dimension at most 2d + 1, where d is the dimension of the domain of the functions, but for sufficiently smooth functions the combinatorial dimension is smaller, at most d + 1. Many other LP-type problems can also be expressed using quasiconvex functions in this way; for instance, the smallest enclosing circle problem is the problem of minimizing maxifi where each of the functions fi measures the Euclidean distance from one of the given points. [10]

LP-type problems have also been used to determine the optimal outcomes of certain games in algorithmic game theory, [11] improve vertex placement in finite element method meshes, [12] solve facility location problems, [13] analyze the time complexity of certain exponential-time search algorithms, [14] and reconstruct the three-dimensional positions of objects from their two-dimensional images. [15]