A relational database (RDB) is a database based on the relational model of data, as proposed by E. F. Codd in 1970.

A distributed hash table (DHT) is a distributed system that provides a lookup service similar to a hash table. Key–value pairs are stored in a DHT, and any participating node can efficiently retrieve the value associated with a given key. The main advantage of a DHT is that nodes can be added or removed with minimum work around re-distributing keys. Keys are unique identifiers which map to particular values, which in turn can be anything from addresses, to documents, to arbitrary data. Responsibility for maintaining the mapping from keys to values is distributed among the nodes, in such a way that a change in the set of participants causes a minimal amount of disruption. This allows a DHT to scale to extremely large numbers of nodes and to handle continual node arrivals, departures, and failures.

Extract, transform, load (ETL) is a three-phase computing process where data is extracted from an input source, transformed, and loaded into an output data container. The data can be collected from one or more sources and it can also be output to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on recurring schedules either as single jobs or aggregated into a batch of jobs.

In distributed data storage, a P-Grid is a self-organizing structured peer-to-peer system, which can accommodate arbitrary key distributions, still providing storage load-balancing and efficient search by using randomized routing.

MySQL Cluster, also known as MySQL Ndb Cluster is a technology providing shared-nothing clustering and auto-sharding for the MySQL database management system. It is designed to provide high availability and high throughput with low latency, while allowing for near linear scalability. MySQL Cluster is implemented through the NDB or NDBCLUSTER storage engine for MySQL.

In a database, a table is a collection of related data organized in table format; consisting of columns and rows.

A database index is a data structure that improves the speed of data retrieval operations on a database table at the cost of additional writes and storage space to maintain the index data structure. Indexes are used to quickly locate data without having to search every row in a database table every time said table is accessed. Indexes can be created using one or more columns of a database table, providing the basis for both rapid random lookups and efficient access of ordered records.

In computer science, data is any sequence of one or more symbols; datum is a single symbol of data. Data requires interpretation to become information. Digital data is data that is represented using the binary number system of ones (1) and zeros (0), instead of analog representation. In modern (post-1960) computer systems, all data is digital.

SAP IQ is a column-based, petabyte scale, relational database software system used for business intelligence, data warehousing, and data marts. Produced by Sybase Inc., now an SAP company, its primary function is to analyze large amounts of data in a low-cost, highly available environment. SAP IQ is often credited with pioneering the commercialization of column-store technology.

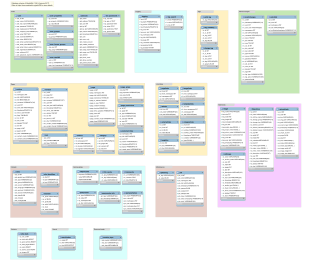

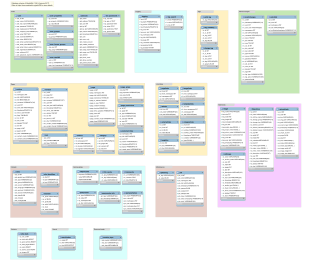

A database model is a type of data model that determines the logical structure of a database. It fundamentally determines in which manner data can be stored, organized and manipulated. The most popular example of a database model is the relational model, which uses a table-based format.

Database tables and indexes may be stored on disk in one of a number of forms, including ordered/unordered flat files, ISAM, heap files, hash buckets, or B+ trees. Each form has its own particular advantages and disadvantages. The most commonly used forms are B-trees and ISAM. Such forms or structures are one aspect of the overall schema used by a database engine to store information.

Apache Cassandra is a free and open-source database management system designed to handle large volumes of data across multiple commodity servers. The system prioritizes availability and scalability over consistency, making it particularly suited for systems with high write throughput requirements due to its LSM tree indexing storage layer. As a wide-column database, Cassandra supports flexible schemas and efficiently handles data models with numerous sparse columns. The system is optimized for applications with well-defined data access patterns that can be incorporated into the schema design. Cassandra supports computer clusters which may span multiple data centers, featuring asynchronous and masterless replication. It enables low-latency operations for all clients and incorporates Amazon's Dynamo distributed storage and replication techniques, combined with Google's Bigtable data storage engine model.

A database shard, or simply a shard, is a horizontal partition of data in a database or search engine. Each shard may be held on a separate database server instance, to spread load.

The standard column family is a NoSQL object that contains columns of related data. It is a tuple (pair) that consists of a key–value pair, where the key is mapped to a value that is a set of columns. In analogy with relational databases, a standard column family is as a "table", each key–value pair being a "row". Each column is a tuple consisting of a column name, a value, and a timestamp. In a relational database table, this data would be grouped together within a table with other non-related data.

Within database management systems, the record columnar file or RCFile is a data placement structure that determines how to store relational tables on computer clusters. It is designed for systems using the MapReduce framework. The RCFile structure includes a data storage format, data compression approach, and optimization techniques for data reading. It is able to meet all the four requirements of data placement: (1) fast data loading, (2) fast query processing, (3) highly efficient storage space utilization, and (4) a strong adaptivity to dynamic data access patterns.

Sherpa is a cloud storage platform developed by Yahoo!. It is a hosted, distributed, and geographically replicated key-value data store. The service is a NoSQL system that address the scalability, availability, and latency needs of the conglomerate's websites. Sherpa has abilities such as elastic growth, multi-tenancy, global footprint for local low-latency access, asynchronous replication, representational state transfer (REST) based web service APIs, novel per-record consistency knobs, high availability, compression, secondary indexes, and record-level replication.

Amazon DynamoDB is a managed NoSQL database service provided by Amazon Web Services (AWS). It supports key-value and document data structures and is designed to handle a wide range of applications requiring scalability and performance.

Oracle NoSQL Database is a NoSQL-type distributed key-value database from Oracle Corporation. It provides transactional semantics for data manipulation, horizontal scalability, and simple administration and monitoring.

Azure Cosmos DB is a globally distributed, multi-model database service offered by Microsoft. It is designed to provide high availability, scalability, and low-latency access to data for modern applications. Unlike traditional relational databases, Cosmos DB is a NoSQL and vector database, which means it can handle unstructured, semi-structured, structured, and vector data types.

Database scalability is the ability of a database to handle changing demands by adding/removing resources. Databases use a host of techniques to cope. According to Marc Brooker: "a system is scalable in the range where marginal cost of additional workload is nearly constant." Serverless technologies fit this definition but you need to consider total cost of ownership not just the infra cost.