In database systems, consistency refers to the requirement that any given database transaction must change affected data only in allowed ways. Any data written to the database must be valid according to all defined rules, including constraints, cascades, triggers, and any combination thereof. This does not guarantee correctness of the transaction in all ways the application programmer might have wanted but merely that any programming errors cannot result in the violation of any defined database constraints.

Multi-master replication is a method of database replication which allows data to be stored by a group of computers, and updated by any member of the group. All members are responsive to client data queries. The multi-master replication system is responsible for propagating the data modifications made by each member to the rest of the group and resolving any conflicts that might arise between concurrent changes made by different members.

Replication in computing involves sharing information so as to ensure consistency between redundant resources, such as software or hardware components, to improve reliability, fault-tolerance, or accessibility.

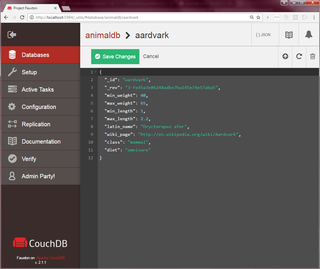

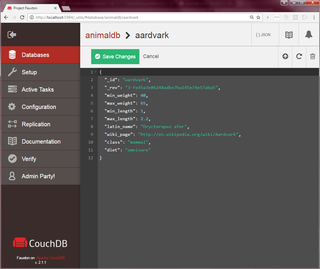

Apache CouchDB is an open-source document-oriented NoSQL database, implemented in Erlang.

Amazon SimpleDB is a distributed database written in Erlang by Amazon.com. It is used as a web service in concert with Amazon Elastic Compute Cloud (EC2) and Amazon S3 and is part of Amazon Web Services. It was announced on December 13, 2007.

Apache Cassandra is a free and open-source database management system designed to handle large volumes of data across multiple commodity servers. Cassandra supports computer clusters and spans multiple data centers, featuring asynchronous and masterless replication. It enables low-latency operations for all clients and incorporates Amazon's Dynamo distributed storage and replication techniques, combined with Google's Bigtable data storage engine model.

NoSQL is an approach to database design that focuses on providing a mechanism for storage and retrieval of data that is modeled in means other than the tabular relations used in relational databases. Instead of the typical tabular structure of a relational database, NoSQL databases house data within one data structure. Since this non-relational database design does not require a schema, it offers rapid scalability to manage large and typically unstructured data sets. NoSQL systems are also sometimes called "Not only SQL" to emphasize that they may support SQL-like query languages or sit alongside SQL databases in polyglot-persistent architectures.

In database theory, the CAP theorem, also named Brewer's theorem after computer scientist Eric Brewer, states that any distributed data store can provide only two of the following three guarantees:

Couchbase Server, originally known as Membase, is a source-available, distributed multi-model NoSQL document-oriented database software package optimized for interactive applications. These applications may serve many concurrent users by creating, storing, retrieving, aggregating, manipulating and presenting data. In support of these kinds of application needs, Couchbase Server is designed to provide easy-to-scale key-value, or JSON document access, with low latency and high sustainability throughput. It is designed to be clustered from a single machine to very large-scale deployments spanning many machines.

Split-brain is a computer term, based on an analogy with the medical split-brain syndrome. It indicates data or availability inconsistencies originating from the maintenance of two separate data sets with overlap in scope, either because of servers in a network design, or a failure condition based on servers not communicating and synchronizing their data to each other. This last case is also commonly referred to as a network partition.

H-Store is an experimental database management system (DBMS). It was designed for online transaction processing applications. H-Store was developed by a team at Brown University, Carnegie Mellon University, the Massachusetts Institute of Technology, and Yale University in 2007 by researchers Michael Stonebraker, Sam Madden, Andy Pavlo and Daniel Abadi.

Sherpa is a cloud storage platform developed by Yahoo!. It is a hosted, distributed, and geographically replicated key-value data store. The service is a NoSQL system that address the scalability, availability, and latency needs of the conglomerate's websites. Sherpa has abilities such as elastic growth, multi-tenancy, global footprint for local low-latency access, asynchronous replication, representational state transfer (REST) based web service APIs, novel per-record consistency knobs, high availability, compression, secondary indexes, and record-level replication.

Amazon DynamoDB is a managed NoSQL database service provided by Amazon Web Services (AWS). It supports key-value and document data structures and is designed to handle a wide range of applications requiring scalability and performance.

Oracle NoSQL Database is a NoSQL-type distributed key-value database from Oracle Corporation. It provides transactional semantics for data manipulation, horizontal scalability, and simple administration and monitoring.

NewSQL is a class of relational database management systems that seek to provide the scalability of NoSQL systems for online transaction processing (OLTP) workloads while maintaining the ACID guarantees of a traditional database system.

Aerospike Database is a real-time, high performance NoSQL database. Designed for applications that cannot experience any downtime and require high read & write throughput. Aerospike is optimized to run on NVMe SSDs capable of efficiently storing large datasets. Aerospike can also be deployed as a fully in-memory cache database. Aerospike offers Key-Value, JSON Document, Graph data, and Vector Search models. Aerospike is an open source distributed NoSQL database management system, marketed by the company also named Aerospike.

FoundationDB is a free and open-source multi-model distributed NoSQL database developed by Apple Inc. with a shared-nothing architecture. The product was designed around a "core" database, with additional features supplied in "layers." The core database exposes an ordered key–value store with transactions. The transactions are able to read or write multiple keys stored on any machine in the cluster while fully supporting ACID properties. Transactions are used to implement a variety of data models via layers.

Azure Cosmos DB is a globally distributed, multi-model database service offered by Microsoft. It is designed to provide high availability, scalability, and low-latency access to data for modern applications. Unlike traditional relational databases, Cosmos DB is a NoSQL and vector database, which means it can handle unstructured, semi-structured, structured, and vector data types.

CockroachDB is a source-available distributed SQL database management system developed by Cockroach Labs. The relational functionality is built on top of a distributed, transactional, consistent key-value store that can survive a variety of different underlying infrastructure failures, and is wire-compatible with PostgreSQL which means users can take advantage of a wide range of drivers and tools from the extensive PostgreSQL ecosystem. A CockroachDB cluster consists of a number of nodes that can be spread across failure domains such as data centres or public cloud regions. A cluster can be scaled both horizontally and vertically. It can provide high levels of resilience and availability and can be run in a variety of environments such as bare metal, VMs, containers and Kubernetes, both in private data centers and in the cloud. CockroachDB gets its name from cockroaches, as they are known for being disaster-resistant.

A distributed SQL database is a single relational database which replicates data across multiple servers. Distributed SQL databases are strongly consistent and most support consistency across racks, data centers, and wide area networks including cloud availability zones and cloud geographic zones. Distributed SQL databases typically use the Paxos or Raft algorithms to achieve consensus across multiple nodes.