In computer programming, resource management refers to techniques for managing resources (components with limited availability).

Computer programs may manage their own resources[ which? ] by using features exposed by programming languages (Elder, Jackson & Liblit (2008) is a survey article contrasting different approaches), or may elect to manage them by a host – an operating system or virtual machine – or another program.

Host-based management is known as resource tracking, and consists of cleaning up resource leaks: terminating access to resources that have been acquired but not released after use. This is known as reclaiming resources, and is analogous to garbage collection for memory. On many systems, the operating system reclaims resources after the process makes the exit system call.

The omission of releasing a resource when a program has finished using it is known as a resource leak, and is an issue in sequential computing. Multiple processes wish to access a limited resource can be an issue in concurrent computing, and is known as resource contention.

Resource management seeks to control access in order to prevent both of these situations.

Formally, resource management (preventing resource leaks) consists of ensuring that a resource is released if and only if it is successfully acquired. This general problem can be abstracted as "before,body, and after" code, which normally are executed in this order, with the condition that the after code is called if and only if the before code successfully completes, regardless of whether the body code executes successfully or not. This is also known as execute around [1] or a code sandwich, and occurs in various other contexts, [2] such as a temporary change of program state, or tracing entry and exit into a subroutine. However, resource management is the most commonly cited application. In aspect-oriented programming, such execute around logic is a form of advice .

In the terminology of control flow analysis, resource release must postdominate successful resource acquisition; [3] failure to ensure this is a bug, and a code path that violates this condition causes a resource leak. Resource leaks are often minor problems, generally not crashing the program, but instead causing some slowdown to the program or the overall system. [2] However, they may cause crashes – either the program itself or other programs – due to resource exhaustion: if the system runs out of resources, acquisition requests fail. This can present a security bug if an attack can cause resource exhaustion. Resource leaks may happen under regular program flow – such as simply forgetting to release a resource – or only in exceptional circumstances, such as when a resource is not released if there is an exception in another part of the program. Resource leaks are very frequently caused by early exit from a subroutine, either by a return statement, or an exception raised either by the subroutine itself, or a deeper subroutine that it calls. While resource release due to return statements can be handled by carefully releasing within the subroutine before the return, exceptions cannot be handled without some additional language facility that guarantees that release code is executed.

More subtly, successful resource acquisition must dominate resource release, as otherwise the code will try to release a resource it has not acquired. The consequences of such an incorrect release range from being silently ignored to crashing the program or unpredictable behavior. These bugs generally manifest rarely, as they require resource allocation to first fail, which is generally an exceptional case. Further, the consequences may not be serious, as the program may already be crashing due to failure to acquire an essential resource. However, these can prevent recovery from the failure, or turn an orderly shutdown into a disorderly shutdown. This condition is generally ensured by first checking that the resource was successfully acquired before releasing it, either by having a boolean variable to record "successfully acquired" – which lacks atomicity if the resource is acquired but the flag variable fails to be updated, or conversely – or by the handle to the resource being a nullable type, where "null" indicates "not successfully acquired", which ensures atomicity.

In computer science, resource contention refers to a conflict that arises when multiple entities attempt to access a shared resource, like random access memory, disk storage, cache memory, internal buses, or external network devices.

Memory can be treated as a resource, but memory management is usually considered separately, primarily because memory allocation and deallocation is significantly more frequent than acquisition and release of other resources, such as file handles. Memory managed by an external system has similarities to both (internal) memory management (since it is memory) and resource management (since it is managed by an external system). Examples include memory managed via native code and used from Java (via Java Native Interface); and objects in the Document Object Model (DOM), used from JavaScript. In both these cases, the memory manager (garbage collector) of the runtime environment (virtual machine) is unable to manage the external memory (there is no shared memory management), and thus the external memory is treated as a resource, and managed analogously. However, cycles between systems (JavaScript referring to the DOM, referring back to JavaScript) can make management difficult or impossible.

A key distinction in resource management within a program is between lexical management and explicit management – whether a resource can be handled as having a lexical scope, such as a stack variable (lifetime is restricted to a single lexical scope, being acquired on entry to or within a particular scope, and released when execution exits that scope), or whether a resource must be explicitly allocated and released, such as a resource acquired within a function and then returned from it, which must then be released outside of the acquiring function. Lexical management, when applicable, allows a better separation of concerns and is less error-prone.

The basic approach to resource management is to acquire a resource, do something with it, then release it, yielding code of the form (illustrated with opening a file in Python):

f=open(filename)...f.close()This is correct if the intervening ... code does not contain an early exit (return), the language does not have exceptions, and open is guaranteed to succeed. However, it causes a resource leak if there is a return or exception, and causes an incorrect release of unacquired resource if open can fail.

There are two more fundamental problems: the acquisition-release pair is not adjacent (the release code must be written far from the acquisition code), and resource management is not encapsulated – the programmer must manually ensure that they are always paired. In combination, these mean that acquisition and release must be explicitly paired, but cannot be placed together, thus making it easy for these to not be paired correctly.

The resource leak can be resolved in languages that support a finally construction (like Python) by placing the body in a try clause, and the release in a finally clause:

f=open(filename)try:...finally:f.close()This ensures correct release even if there is a return within the body or an exception thrown. Further, note that the acquisition occurs before the try clause, ensuring that the finally clause is only executed if the open code succeeds (without throwing an exception), assuming that "no exception" means "success" (as is the case for open in Python). If resource acquisition can fail without throwing an exception, such as by returning a form of null, it must also be checked before release, such as:

f=open(filename)try:...finally:iff:f.close()While this ensures correct resource management, it fails to provide adjacency or encapsulation. In many languages there are mechanisms that provide encapsulation, such as the with statement in Python:

withopen(filename)asf:...The above techniques – unwind protection (finally) and some form of encapsulation – are the most common approach to resource management, found in various forms in C#, Common Lisp, Java, Python, Ruby, Scheme, and Smalltalk, [1] among others; they date to the late 1970s in the NIL dialect of Lisp; see Exception handling § History. There are many variations in the implementation, and there are also significantly different approaches.

The most common approach to resource management across languages is to use unwind protection, which is called when execution exits a scope – by execution running off the end of the block, returning from within the block, or an exception being thrown. This works for stack-managed resources, and is implemented in many languages, including C#, Common Lisp, Java, Python, Ruby, and Scheme. The main problems with this approach is that the release code (most commonly in a finally clause) may be very distant from the acquisition code (it lacks adjacency), and that the acquisition and release code must always be paired by the caller (it lacks encapsulation). These can be remedied either functionally, by using closures/callbacks/coroutines (Common Lisp, Ruby, Scheme), or by using an object that handles both the acquisition and release, and adding a language construct to call these methods when control enters and exits a scope (C# using, Java try-with-resources, Python with); see below.

An alternative, more imperative approach, is to write asynchronous code in direct style: acquire a resource, and then in the next line have a deferred release, which is called when the scope is exited – synchronous acquisition followed by asynchronous release. This originated in C++ as the ScopeGuard class, by Andrei Alexandrescu and Petru Marginean in 2000, [4] with improvements by Joshua Lehrer, [5] and has direct language support in D via the scope keyword (ScopeGuardStatement), where it is one approach to exception safety, in addition to RAII (see below). [6] It has also been included in Go, as the defer statement. [7] This approach lacks encapsulation – one must explicitly match acquisition and release – but avoids having to create an object for each resource (code-wise, avoid writing a class for each type of resource).

In object-oriented programming, resources are encapsulated within objects that use them, such as a file object having a field whose value is a file descriptor (or more general file handle). This allows the object to use and manage the resource without users of the object needing to do so. However, there is a wide variety of ways that objects and resources can be related.

Firstly, there is the question of ownership: does an object have a resource?

Objects that have a resource can acquire and release it in different ways, at different points during the object lifetime; these occur in pairs, but in practice they are often not used symmetrically (see below):

open or dispose.Most common is to acquire a resource during object creation, and then explicitly release it via an instance method, commonly called dispose. This is analogous to traditional file management (acquire during open, release by explicit close), and is known as the dispose pattern. This is the basic approach used in several major modern object-oriented languages, including Java, C# and Python, and these languages have additional constructs to automate resource management. However, even in these languages, more complex object relationships result in more complex resource management, as discussed below.

A natural approach is to make holding a resource be a class invariant: resources are acquired during object creation (specifically initialization), and released during object destruction (specifically finalization). This is known as Resource Acquisition Is Initialization (RAII), and ties resource management to object lifetime, ensuring that live objects have all necessary resources. Other approaches do not make holding the resource a class invariant, and thus objects may not have necessary resources (because they've not been acquired yet, have already been released, or are being managed externally), resulting in errors such as trying to read from a closed file. This approach ties resource management to memory management (specifically object management), so if there are no memory leaks (no object leaks), there are no resource leaks. RAII works naturally for heap-managed resources, not only stack-managed resources, and is composable: resources held by objects in arbitrarily complicated relationships (a complicated object graph) are released transparently simply by object destruction (so long as this is done properly!).

RAII is the standard resource management approach in C++, but is little-used outside C++, despite its appeal, because it works poorly with modern automatic memory management, specifically tracing garbage collection: RAII ties resource management to memory management, but these have significant differences. Firstly, because resources are expensive, it is desirable to release them promptly, so objects holding resources should be destroyed as soon as they become garbage (are no longer in use). Object destruction is prompt in deterministic memory management, such as in C++ (stack-allocated objects are destroyed on stack unwind, heap-allocated objects are destroyed manually via calling delete or automatically using unique_ptr) or in deterministic reference-counting (where objects are destroyed immediately when their reference count falls to 0), and thus RAII works well in these situations. However, most modern automatic memory management is non-deterministic, making no guarantees that objects will be destroyed promptly or even at all! This is because it is cheaper to leave some garbage allocated than to precisely collect each object immediately on its becoming garbage. Secondly, releasing resources during object destruction means that an object must have a finalizer (in deterministic memory management known as a destructor) – the object cannot simply be deallocated – which significantly complicates and slows garbage collection.

When multiple objects rely on a single resource, resource management can be complicated.

A fundamental question is whether a "has a" relationship is one of owning another object (object composition), or viewing another object (object aggregation). A common case is when one two objects are chained, as in pipe and filter pattern, the delegation pattern, the decorator pattern, or the adapter pattern. If the second object (which is not used directly) holds a resource, is the first object (which is used directly) responsible for managing the resource? This is generally answered identically to whether the first object owns the second object: if so, then the owning object is also responsible for resource management ("having a resource" is transitive), while if not, then it is not. Further, a single object may "have" several other objects, owning some and viewing others.

Both cases are commonly found, and conventions differ. Having objects that use resources indirectly be responsible for the resource (composition) provides encapsulation (one only needs the object that clients use, without separate objects for the resources), but results in considerable complexity, particularly when a resource is shared by multiple objects or objects have complex relationships. If only the object that directly uses the resource is responsible for the resource (aggregation), relationships between other objects that use the resources can be ignored, but there is no encapsulation (beyond the directly using object): the resource must be managed directly, and might not be available to the indirectly using object (if it has been released separately).

Implementation-wise, in object composition, if using the dispose pattern, the owning object thus will also have a dispose method, which in turn calls the dispose methods of owned objects that must be disposed; in RAII this is handled automatically (so long as owned objects are themselves automatically destroyed: in C++ if they are a value or a unique_ptr, but not a raw pointer: see pointer ownership). In object aggregation, nothing needs to be done by the viewing object, as it is not responsible for the resource.

Both are commonly found. For example, in the Java Class Library, Reader#close() closes the underlying stream, and these can be chained. For example, a BufferedReader may contain a InputStreamReader , which in turn contains a FileInputStream , and calling close on the BufferedReader in turn closes the InputStreamReader, which in turn closes the FileInputStream, which in turn releases the system file resource. Indeed, the object that directly uses the resource can even be anonymous, thanks to encapsulation:

try(BufferedReaderreader=newBufferedReader(newInputStreamReader(newFileInputStream(fileName)))){// Use reader.}// reader is closed when the try-with-resources block is exited, which closes each of the contained objects in sequence.However, it is also possible to manage only the object that directly uses the resource, and not use resource management on wrapper objects:

try(FileInputStreamstream=newFileInputStream(fileName)))){BufferedReaderreader=newBufferedReader(newInputStreamReader(stream));// Use reader.}// stream is closed when the try-with-resources block is exited.// reader is no longer usable after stream is closed, but so long as it does not escape the block, this is not a problem.By contrast, in Python, a csv.reader does not own the file that it is reading, so there is no need (and it is not possible) to close the reader, and instead the file itself must be closed. [8]

withopen(filename)asf:r=csv.reader(f)# Use r.# f is closed when the with-statement is exited, and can no longer be used.# Nothing is done to r, but the underlying f is closed, so r cannot be used either.In .NET, convention is to only have direct user of resources be responsible: "You should implement IDisposable only if your type uses unmanaged resources directly." [9]

In case of a more complicated object graph, such as multiple objects sharing a resource, or cycles between objects that hold resources, proper resource management can be quite complicated, and exactly the same issues arise as in object finalization (via destructors or finalizers); for example, the lapsed listener problem can occur and cause resource leaks if using the observer pattern (and observers hold resources). Various mechanisms exist to allow greater control of resource management. For example, in the Google Closure Library, the goog.Disposable class provides a registerDisposable method to register other objects to be disposed with this object, together with various lower-level instance and class methods to manage disposal.

In structured programming, stack resource management is done simply by nesting code sufficiently to handle all cases. This requires only a single return at the end of the code, and can result in heavily nested code if many resources must be acquired, which is considered an anti-pattern by some – the Arrow Anti Pattern, [10] due to the triangular shape from the successive nesting.

One other approach, which allows early return but consolidates cleanup in one place, is to have a single exit return of a function, preceded by cleanup code, and to use goto to jump to the cleanup before exit. This is infrequently seen in modern code, but occurs in some uses of C.

In computer science, a memory leak is a type of resource leak that occurs when a computer program incorrectly manages memory allocations in a way that memory which is no longer needed is not released. A memory leak may also happen when an object is stored in memory but cannot be accessed by the running code. A memory leak has symptoms similar to a number of other problems and generally can only be diagnosed by a programmer with access to the program's source code.

Structured programming is a programming paradigm aimed at improving the clarity, quality, and development time of a computer program by making specific disciplined use of the structured control flow constructs of selection (if/then/else) and repetition, block structures, and subroutines.

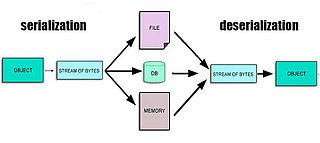

In computing, serialization is the process of translating a data structure or object state into a format that can be stored or transmitted and reconstructed later. When the resulting series of bits is reread according to the serialization format, it can be used to create a semantically identical clone of the original object. For many complex objects, such as those that make extensive use of references, this process is not straightforward. Serialization of objects does not include any of their associated methods with which they were previously linked.

Java and C++ are two prominent object-oriented programming languages. By many language popularity metrics, the two languages have dominated object-oriented and high-performance software development for much of the 21st century, and are often directly compared and contrasted. Java's syntax was based on C/C++.

C++ is a high-level, general-purpose programming language created by Danish computer scientist Bjarne Stroustrup. First released in 1985 as an extension of the C programming language, it has since expanded significantly over time; as of 1997, C++ has object-oriented, generic, and functional features, in addition to facilities for low-level memory manipulation for systems like microcomputers or to make operating systems like Linux or Windows. It is usually implemented as a compiled language, and many vendors provide C++ compilers, including the Free Software Foundation, LLVM, Microsoft, Intel, Embarcadero, Oracle, and IBM.

In object-oriented programming (OOP), object lifetime is the period of time between an object's creation and its destruction. In some programming contexts, object lifetime coincides with the lifetime of a variable that represents the object. In other contexts – where the object is accessed by reference – object lifetime is not determined by the lifetime of a variable. For example, destruction of the variable may only destroy the reference; not the referenced object.

In computer science, a smart pointer is an abstract data type that simulates a pointer while providing added features, such as automatic memory management or bounds checking. Such features are intended to reduce bugs caused by the misuse of pointers, while retaining efficiency. Smart pointers typically keep track of the memory they point to, and may also be used to manage other resources, such as network connections and file handles. Smart pointers were first popularized in the programming language C++ during the first half of the 1990s as rebuttal to criticisms of C++'s lack of automatic garbage collection.

Resource acquisition is initialization (RAII) is a programming idiom used in several object-oriented, statically typed programming languages to describe a particular language behavior. In RAII, holding a resource is a class invariant, and is tied to object lifetime. Resource allocation is done during object creation, by the constructor, while resource deallocation (release) is done during object destruction, by the destructor. In other words, resource acquisition must succeed for initialization to succeed. Thus, the resource is guaranteed to be held between when initialization finishes and finalization starts, and to be held only when the object is alive. Thus, if there are no object leaks, there are no resource leaks.

In computing, a system resource, or simply resource, is any physical or virtual component of limited availability that is accessible to a computer. All connected devices and internal system components are resources. Virtual system resources include files, network connections, and memory areas.

In computer science, a finalizer or finalize method is a special method that performs finalization, generally some form of cleanup. A finalizer is executed during object destruction, prior to the object being deallocated, and is complementary to an initializer, which is executed during object creation, following allocation. Finalizers are strongly discouraged by some, due to difficulty in proper use and the complexity they add, and alternatives are suggested instead, mainly the dispose pattern.

In object-oriented programming, a destructor is a method which is invoked mechanically just before the memory of the object is released. It can happen when its lifetime is bound to scope and the execution leaves the scope, when it is embedded in another object whose lifetime ends, or when it was allocated dynamically and is released explicitly. Its main purpose is to free the resources which were acquired by the object during its life and/or deregister from other entities which may keep references to it. Use of destructors is needed for the process of Resource Acquisition Is Initialization (RAII).

ITK is a cross-platform, open-source application development framework widely used for the development of image segmentation and image registration programs. Segmentation is the process of identifying and classifying data found in a digitally sampled representation. Typically the sampled representation is an image acquired from such medical instrumentation as CT or MRI scanners. Registration is the task of aligning or developing correspondences between data. For example, in the medical environment, a CT scan may be aligned with an MRI scan in order to combine the information contained in both.

In computer science, garbage includes data, objects, or other regions of the memory of a computer system, which will not be used in any future computation by the system, or by a program running on it. Because every computer system has a finite amount of memory, and most software produces garbage, it is frequently necessary to deallocate memory that is occupied by garbage and return it to the heap, or memory pool, for reuse.

In computer science, manual memory management refers to the usage of manual instructions by the programmer to identify and deallocate unused objects, or garbage. Up until the mid-1990s, the majority of programming languages used in industry supported manual memory management, though garbage collection has existed since 1959, when it was introduced with Lisp. Today, however, languages with garbage collection such as Java are increasingly popular and the languages Objective-C and Swift provide similar functionality through Automatic Reference Counting. The main manually managed languages still in widespread use today are C and C++ – see C dynamic memory allocation.

The Java programming language and the Java virtual machine (JVM) is designed to support concurrent programming. All execution takes place in the context of threads. Objects and resources can be accessed by many separate threads. Each thread has its own path of execution, but can potentially access any object in the program. The programmer must ensure read and write access to objects is properly coordinated between threads. Thread synchronization ensures that objects are modified by only one thread at a time and prevents threads from accessing partially updated objects during modification by another thread. The Java language has built-in constructs to support this coordination.

In object-oriented programming, the dispose pattern is a design pattern for resource management. In this pattern, a resource is held by an object, and released by calling a conventional method – usually called close, dispose, free, release depending on the language – which releases any resources the object is holding onto. Many programming languages offer language constructs to avoid having to call the dispose method explicitly in common situations.

Wrapper libraries consist of a thin layer of code which translates a library's existing interface into a compatible interface. This is done for several reasons:

In computer science, a resource leak is a particular type of resource consumption by a computer program where the program does not release resources it has acquired. This condition is normally the result of a bug in a program. Typical resource leaks include memory leak and handle leak, particularly file handle leaks, though memory is often considered separately from other resources.

Qore is an interpreted, high-level, general-purpose, garbage collected dynamic programming language, featuring support for code embedding and sandboxing with optional strong typing and a focus on fundamental support for multithreading and SMP scalability.

In computer programming, several language mechanisms exist for exception handling. The term exception is typically used to denote a data structure storing information about an exceptional condition. One mechanism to transfer control, or raise an exception, is known as a throw; the exception is said to be thrown. Execution is transferred to a catch.

{{cite tech report}}: External link in |postscript=