Related Research Articles

In telecommunications, asynchronous communication is transmission of data, generally without the use of an external clock signal, where data can be transmitted intermittently rather than in a steady stream. Any timing required to recover data from the communication symbols is encoded within the symbols.

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as the Internet Protocol refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness.

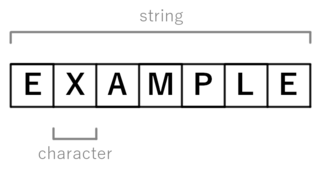

In computer programming, a string is traditionally a sequence of characters, either as a literal constant or as some kind of variable. The latter may allow its elements to be mutated and the length changed, or it may be fixed. A string is generally considered as a data type and is often implemented as an array data structure of bytes that stores a sequence of elements, typically characters, using some character encoding. String may also denote more general arrays or other sequence data types and structures.

UTF-8 is a character encoding standard used for electronic communication. Defined by the Unicode Standard, the name is derived from Unicode Transformation Format – 8-bit.

In data transmission and telecommunications, bit stuffing is the insertion of non-information bits into data. Stuffed bits should not be confused with overhead bits.

In mathematics and computing, Fibonacci coding is a universal code which encodes positive integers into binary code words. It is one example of representations of integers based on Fibonacci numbers. Each code word ends with "11" and contains no other instances of "11" before the end.

A prefix code is a type of code system distinguished by its possession of the "prefix property", which requires that there is no whole code word in the system that is a prefix of any other code word in the system. It is trivially true for fixed-length codes, so only a point of consideration for variable-length codes.

In telecommunications and computing, bit rate is the number of bits that are conveyed or processed per unit of time.

In abstract algebra, the free monoid on a set is the monoid whose elements are all the finite sequences of zero or more elements from that set, with string concatenation as the monoid operation and with the unique sequence of zero elements, often called the empty string and denoted by ε or λ, as the identity element. The free monoid on a set A is usually denoted A∗. The free semigroup on A is the subsemigroup of A∗ containing all elements except the empty string. It is usually denoted A+.

In telecommunications, 8b/10b is a line code that maps 8-bit words to 10-bit symbols to achieve DC balance and bounded disparity, and at the same time provide enough state changes to allow reasonable clock recovery. This means that the difference between the counts of ones and zeros in a string of at least 20 bits is no more than two, and that there are not more than five ones or zeros in a row. This helps to reduce the demand for the lower bandwidth limit of the channel necessary to transfer the signal.

UTF-1 is a method of transforming ISO/IEC 10646/Unicode into a stream of bytes. Its design does not provide self-synchronization, which makes searching for substrings and error recovery difficult. It reuses the ASCII printing characters for multi-byte encodings, making it unsuited for some uses. UTF-1 is also slow to encode or decode due to its use of division and multiplication by a number which is not a power of 2. Due to these issues, it did not gain acceptance and was quickly replaced by UTF-8.

In telecommunications, 6b/8b is a line code that expands 6-bit codes to 8-bit symbols for the purposes of maintaining DC-balance in a communications system.

In mathematics, in the areas of combinatorics and computer science, a Lyndon word is a nonempty string that is strictly smaller in lexicographic order than all of its rotations. Lyndon words are named after mathematician Roger Lyndon, who investigated them in 1954, calling them standard lexicographic sequences. Anatoly Shirshov introduced Lyndon words in 1953 calling them regular words. Lyndon words are a special case of Hall words; almost all properties of Lyndon words are shared by Hall words.

In coding theory, a variable-length code is a code which maps source symbols to a variable number of bits. The equivalent concept in computer science is bit string.

In data networking and transmission, 64b/66b is a line code that transforms 64-bit data to 66-bit line code to provide enough state changes to allow reasonable clock recovery and alignment of the data stream at the receiver. It was defined by the IEEE 802.3 working group as part of the IEEE 802.3ae-2002 amendment which introduced 10 Gbit/s Ethernet. At the time 64b/66b was deployed, it allowed 10 Gb Ethernet to be transmitted with the same lasers used by SONET OC-192, rather than requiring the 12.5 Gbit/s lasers that were not expected to be available for several years.

In computer networks, a syncword, sync character, sync sequence or preamble is used to synchronize a data transmission by indicating the end of header information and the start of data. The syncword is a known sequence of data used to identify the start of a frame, and is also called reference signal or midamble in wireless communications.

Combinatorics on words is a fairly new field of mathematics, branching from combinatorics, which focuses on the study of words and formal languages. The subject looks at letters or symbols, and the sequences they form. Combinatorics on words affects various areas of mathematical study, including algebra and computer science. There have been a wide range of contributions to the field. Some of the first work was on square-free words by Axel Thue in the early 1900s. He and colleagues observed patterns within words and tried to explain them. As time went on, combinatorics on words became useful in the study of algorithms and coding. It led to developments in abstract algebra and answering open questions.

In coding theory, the Sardinas–Patterson algorithm is a classical algorithm for determining in polynomial time whether a given variable-length code is uniquely decodable, named after August Albert Sardinas and George W. Patterson, who published it in 1953. The algorithm carries out a systematic search for a string which admits two different decompositions into codewords. As Knuth reports, the algorithm was rediscovered about ten years later in 1963 by Floyd, despite the fact that it was at the time already well known in coding theory.

In computer science, the complexity function of a word or string is the function that counts the number of distinct factors of that string. More generally, the complexity function of a formal language counts the number of distinct words of given length.

In mathematics and computer science, a morphic word or substitutive word is an infinite sequence of symbols which is constructed from a particular class of endomorphism of a free monoid.

References

- ↑ "Self-synchronizing code – Glossary".

- ↑ Berstel, Jean; Perrin, Dominique; Reutenauer, Christophe (2010). Codes and automata. Encyclopedia of Mathematics and its Applications. Vol. 129. Cambridge, UK: Cambridge University Press. p. 137. ISBN 978-0-521-88831-8. Zbl 1187.94001.

- ↑ Berstel, Jean; Perrin, Dominique (1985). Theory of Codes. Pure and Applied Mathematics. Vol. 117. Academic Press. p. 377. Zbl 0587.68066.