Related Research Articles

In mathematical analysis, in particular the subfields of convex analysis and optimization, a proper convex function is an extended real-valued convex function with a non-empty domain, that never takes on the value and also is not identically equal to

In mathematics and mathematical optimization, the convex conjugate of a function is a generalization of the Legendre transformation which applies to non-convex functions. It is also known as Legendre–Fenchel transformation, Fenchel transformation, or Fenchel conjugate. It allows in particular for a far reaching generalization of Lagrangian duality.

In convex analysis and the calculus of variations, both branches of mathematics, a pseudoconvex function is a function that behaves like a convex function with respect to finding its local minima, but need not actually be convex. Informally, a differentiable function is pseudoconvex if it is increasing in any direction where it has a positive directional derivative. The property must hold in all of the function domain, and not only for nearby points.

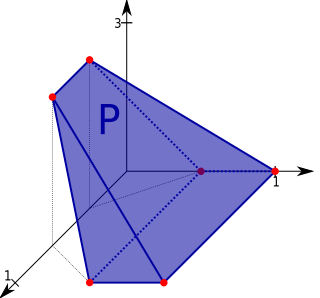

Convex analysis is the branch of mathematics devoted to the study of properties of convex functions and convex sets, often with applications in convex minimization, a subdomain of optimization theory.

In mathematics, subderivatives generalizes the derivative to convex functions which are not necessarily differentiable. The set of subderivatives at a point is called the subdifferential at that point. Subderivatives arise in convex analysis, the study of convex functions, often in connection to convex optimization.

In mathematics, the Caristi fixed-point theorem generalizes the Banach fixed-point theorem for maps of a complete metric space into itself. Caristi's fixed-point theorem modifies the -variational principle of Ekeland. The conclusion of Caristi's theorem is equivalent to metric completeness, as proved by Weston (1977). The original result is due to the mathematicians James Caristi and William Arthur Kirk.

A set-valued function is a mathematical function that maps elements from one set, the domain of the function, to subsets of another set. Set-valued functions are used in a variety of mathematical fields, including optimization, control theory and game theory.

In mathematics, the hypograph or subgraph of a function is the set of points lying on or below its graph. A related definition is that of such a function's epigraph, which is the set of points on or above the function's graph.

In mathematical analysis, Ekeland's variational principle, discovered by Ivar Ekeland, is a theorem that asserts that there exist nearly optimal solutions to some optimization problems.

Roger Jean-Baptiste Robert Wets is a "pioneer" in stochastic programming and a leader in variational analysis who publishes as Roger J-B Wets. His research, expositions, graduate students, and his collaboration with R. Tyrrell Rockafellar have had a profound influence on optimization theory, computations, and applications. Since 2009, Wets has been a distinguished research professor at the mathematics department of the University of California, Davis.

Ralph Tyrrell Rockafellar is an American mathematician and one of the leading scholars in optimization theory and related fields of analysis and combinatorics. He is the author of four major books including the landmark text "Convex Analysis" (1970), which has been cited more than 27,000 times according to Google Scholar and remains the standard reference on the subject, and "Variational Analysis" for which the authors received the Frederick W. Lanchester Prize from the Institute for Operations Research and the Management Sciences (INFORMS).

The Shapley–Folkman lemma is a result in convex geometry that describes the Minkowski addition of sets in a vector space. It is named after mathematicians Lloyd Shapley and Jon Folkman, but was first published by the economist Ross M. Starr.

Convexity is a geometric property with a variety of applications in economics. Informally, an economic phenomenon is convex when "intermediates are better than extremes". For example, an economic agent with convex preferences prefers combinations of goods over having a lot of any one sort of good; this represents a kind of diminishing marginal utility of having more of the same good.

Robert Ralph Phelps was an American mathematician who was known for his contributions to analysis, particularly to functional analysis and measure theory. He was a professor of mathematics at the University of Washington from 1962 until his death.

Ivar I. Ekeland is a French mathematician of Norwegian descent. Ekeland has written influential monographs and textbooks on nonlinear functional analysis, the calculus of variations, and mathematical economics, as well as popular books on mathematics, which have been published in French, English, and other languages. Ekeland is known as the author of Ekeland's variational principle and for his use of the Shapley–Folkman lemma in optimization theory. He has contributed to the periodic solutions of Hamiltonian systems and particularly to the theory of Kreĭn indices for linear systems. Ekeland is cited in the credits of Steven Spielberg's 1993 movie Jurassic Park as an inspiration of the fictional chaos theory specialist Ian Malcolm appearing in Michael Crichton's 1990 novel Jurassic Park.

In convex analysis, a branch of mathematics, the effective domain extends of the domain of a function defined for functions that take values in the extended real number line

Jean Jacques Moreau was a French mathematician and mechanician. He normally published under the name J. J. Moreau.

Robert Ronald Jensen is an American mathematician, specializing in nonlinear partial differential equations with applications to physics, engineering, game theory, and finance.

Adrian Stephen Lewis is a British-Canadian mathematician, specializing in variational analysis and nonsmooth optimization.

In mathematics, the Clarke generalized derivatives are types generalized of derivatives that allow for the differentiation of nonsmooth functions. The Clarke derivatives were introduced by Francis Clarke in 1975.

References

- Rockafellar, R. Tyrrell; Wets, Roger J.-B. (26 June 2009). Variational Analysis. Grundlehren der mathematischen Wissenschaften. Vol. 317. Berlin New York: Springer Science & Business Media. ISBN 9783642024313. OCLC 883392544. https://doi.org/10.1007/978-3-642-02431-3

- Ekeland, Ivar; Témam, Roger; Convex analysis and variational problems 1999 SIAM https://doi.org/10.1137/1.9781611971088

- Borwein, Jonathan M.; Zhu, Qiji J.; Techniques of Variational Analysis 2005 Springer https://doi.org/10.1007/0-387-28271-8